Transformers have revolutionized the sector of pure language processing (NLP) and have turn out to be a elementary structure for varied duties akin to translation, classification, and textual content era. On this article, we’ll delve into the intricacies of Transformer structure, aiming to supply an in depth understanding that may assist in technical interviews and sensible purposes.

Introduction to Transformer Structure

The Transformer structure, launched within the paper “Consideration is All You Want” by Vaswani et al., has redefined how we strategy sequence-to-sequence duties. In contrast to conventional RNNs and LSTMs, Transformers rely totally on self-attention mechanisms to mannequin relationships between components in a sequence, enabling environment friendly parallelization and improved efficiency.

Key Parts of Transformer Structure

1. Tokenization and Token Embeddings

Earlier than any processing begins, the enter textual content is tokenized. Tokenization entails splitting the textual content into smaller models referred to as tokens. For instance, utilizing the WordPiece algorithm, a sentence is split into subwords or tokens, every mapped to a novel integer ID from a predefined vocabulary.

The next picture illustrates this course of:

On this instance, every phrase within the sentence “The Fast Brown Fox Jumps Excessive” is tokenized and mapped to its corresponding embedding vector.

2. Positional Encoding

Since Transformers lack inherent recurrence or convolution mechanisms, they use positional encodings to include the place data of tokens within the sequence. This helps the mannequin perceive the order of tokens, essential for processing language.

Traits of Positional Embeddings

In contrast to RNNs, which course of tokens sequentially, positional embeddings enable Transformers to course of all tokens in parallel. This results in important speedup in coaching and inference. Within the authentic Transformer structure, positional encodings are mounted and computed utilizing sine and cosine capabilities. Nonetheless, in some variations of the Transformer, positional encodings might be realized throughout coaching. The usage of sine and cosine capabilities introduces a periodic nature to positional encodings, enabling the mannequin to generalize to longer sequences than it has seen throughout coaching. Positional encodings fluctuate easily with place, which helps the mannequin to seize the relative positions of tokens successfully.

The token embeddings and positional encodings are mixed by element-wise addition to type the ultimate enter embeddings. These vectors are then fed into the next layers of the Transformer.

The Self-Consideration Mechanism

A crucial innovation in Transformers is the self-attention mechanism, which permits the mannequin to weigh the significance of various tokens in a sequence. This mechanism is essential for capturing dependencies no matter their distance within the sequence.

1. Multi-Head Consideration

Transformers use a number of heads within the consideration mechanism to seize completely different facets of relationships between tokens. Every head performs its consideration operation independently, specializing in varied elements of the sentence (e.g., capturing relationships between nouns, verbs, and so forth.). The outcomes are then concatenated and linearly remodeled to type the ultimate output.

For instance, within the Okay and Q matrix visualization, the connection of “making” with “tough” is calculated by some heads (described by completely different colours), whereas the connection between “making” and “2009” is captured by completely completely different heads (completely different colours).

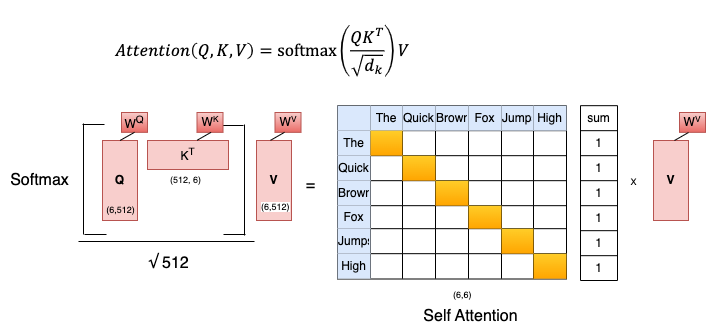

2. Consideration Calculation

The eye mechanism entails three matrices: Question (Q), Key (Okay), and Worth (V). These matrices are derived from the enter embeddings. The eye rating is computed because the dot product of the Question and Key matrices, scaled by the sq. root of the dimensionality of the Key, and handed via a softmax perform.

Traits of Self-Consideration

- Permutation Invariant: Self-attention doesn’t rely upon the order of the tokens. Which means the identical set of tokens will produce the identical output no matter their order.

- Parameter-Free: Self-attention doesn’t introduce any new parameters. The interplay between phrases is pushed by their embeddings and positional encodings.

- Diagonal Dominance: Within the consideration matrix, values alongside the diagonal are anticipated to be the best, indicating that every phrase attends most strongly to itself.

- Masking: To forestall sure positions from interacting, values might be set to −∞ earlier than making use of the softmax perform. That is significantly helpful within the decoder to stop attending to future tokens throughout coaching.

Enhancements in Transformer Architectures: Introduction of Particular Tokens

Whereas the unique “Consideration is All You Want” paper by Vaswani et al. (2017) launched the groundbreaking transformer structure, subsequent fashions have launched extra mechanisms to reinforce its performance. Notably, fashions akin to BERT (Bidirectional Encoder Representations from Transformers) have launched particular tokens [CLS] and [SEP] to deal with varied pure language processing duties extra successfully.

The [CLS] Token

The [CLS] token stands for “classification” and is a particular token added at first of the enter sequence. Its main objective is to function a consultant abstract of all the sequence. In BERT and comparable fashions, the ultimate hidden state similar to the [CLS] token is usually used for classification duties. As an example, in a sentiment evaluation process, the hidden state of the [CLS] token might be fed right into a classifier to foretell the sentiment of the sentence.

Utilization Situations:

Single Sentence: [CLS] This can be a single sentence. [SEP]

Sentence Pair: [CLS] That is the primary sentence. [SEP] That is the second sentence. [SEP]

On this case, the hidden state of [CLS] after processing the sentence encapsulates the data required for classification.

The [SEP] Token

The [SEP] token stands for “separator” and is used to differentiate completely different elements of the enter. It’s significantly helpful in duties involving a number of sentences or pairs of sentences, akin to question-answering or subsequent sentence prediction.

Significance and Functions:

- Classification Duties: The hidden state of the [CLS] token gives a compact illustration of all the enter sequence, making it appropriate for duties like textual content classification and sentiment evaluation.

- Sentence Relationships: The [SEP] token facilitates the mannequin’s potential to grasp and course of sentence boundaries and relationships, which is essential for duties like pure language inference and question-answering.

Encoder and Decoder Constructions

The Transformer consists of an encoder and a decoder, every composed of a number of an identical layers.

1. Encoder

Every encoder layer consists of two predominant parts:

- Multi-Head Self-Consideration Mechanism: This enables the encoder to take care of all positions within the enter sequence concurrently.

- Place-Clever Feed-Ahead Community: A completely related feed-forward community utilized independently to every place.

2. Decoder

The decoder additionally has comparable parts with slight modifications:

- Masked Multi-Head Self-Consideration: Prevents attending to future tokens within the sequence throughout coaching.

- Encoder-Decoder Consideration: Permits the decoder to concentrate on related elements of the enter sequence. The enter to the decoder’s multi-head consideration consists of the Key and Worth matrices from the encoder and the Question from the masked multi-head consideration of the decoder itself.

Coaching and Inference

Coaching

Coaching a Transformer entails processing all the sequence without delay. The mannequin is skilled to reduce the distinction between the expected and precise output sequences.

Inference

Throughout inference, the method is barely completely different as tokens are generated one after the other. For instance, to translate “I like you” to “Ti Amo Molto”:

Coaching of a whole sequence is finished in 1 time step

Inference occurs one token at one time step.

In conclusion, the Transformer structure, with its self-attention mechanism and parallelizable construction, has turn out to be the spine of contemporary NLP duties. Understanding its parts, akin to tokenization, positional encoding, and multi-head consideration, is essential for leveraging its full potential.

References:

– Vaswani et al. (2017), Consideration is all you want https://arxiv.org/pdf/1706.03762