My objective for this challenge is to have the ability to precisely predict the worth of a inventory. On the finish of the code, I would like there to be a perform that predicts the worth of any inventory on the customers request. The request contains the beginning date and the tip date, so it might be from the start of the corporate to the day of the request.

So how will we do that? There are a number of key elements to my challenge.

- Get the information

- Get to know how you can use the information

- Predict on the information (utilizing the perfect mannequin out of 5 mannequin decisions)

- Making a giant perform that somebody might use to simply do all of the little issues my challenge does directly

Alongside the way in which, I intend to learn to analyze time collection information. And likewise, perceive how time collection information works.

Allow us to Start!!!

For this, I’ll write a easy perform that makes use of the pandas_data_reader library. It simply makes life straightforward by with the ability to enter a begin date and an finish date after which getting the information. I don’t know the way typically its up to date, I feel its simply primarily based on the latest, however frankly I don’t care.

def save_to_csv(ticker, syear, smonth, sday, eyear, emonth, eday):

begin = dt.datetime(syear, smonth, sday)

finish = dt.datetime(eyear, emonth, eday)yfin.pdr_override() # I do not know what this line does, it simply was from stackoverflow because the code wasn't working

df = net.get_data_yahoo(ticker, begin, finish) # we overided with yfinance

df.to_csv("/Customers/rish/Finance/Challenge" + ticker + ".csv") # test if this works, do you must add file path?

return df

Now we now have this perform, lets get the information and analyze it.

save_to_csv("AMZN", 2020, 9, 5, 2024, 1, 1)

Analyzing inventory market information, for my part, is not like every other kind of information. Different kinds of information don’t embody time, and in the event that they do, they aren’t actually affected by it.

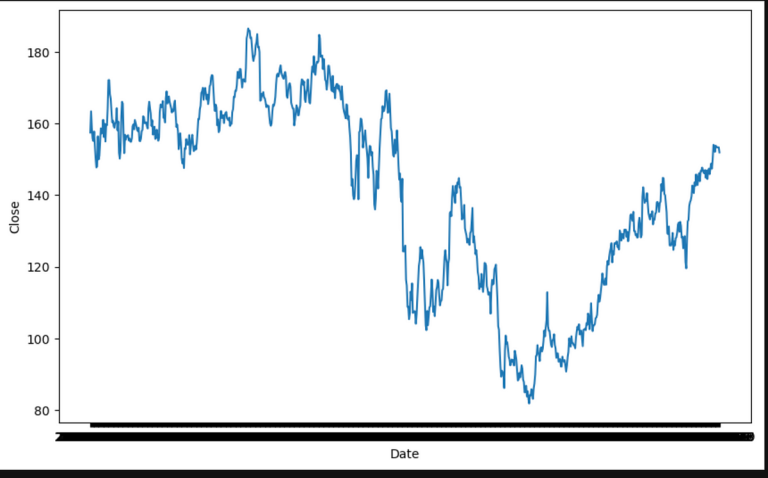

And with inventory market information, the traits are clearly seen, and their isn’t a lot to research, however we’ll simply work out what number of samples we now have, and we’ll scale back the information to simply have the date, and the closing value.

df = pd.read_csv("DataAMZN.csv",

usecols=["Date", "Close"])len(df) # 587 samples

It solely has 587 samples, however the distinction in days between September fifth 2020 and January 1st 2024 is 1,213 days. I don’t know why, however I additionally don’t care. There is no such thing as a manner there’s that many days off from the inventory market, however who is aware of, 2020 was a bizarre yr, and perhaps this API doesn’t have all the information. Who is aware of, however I don’t care.

Now that I give it some thought, and after studying an article with instance of EDA, I don’t actually know how you can do EDA on inventory market information, additionally, do I really want to get to know the information. I do know the scale and all of that, and there’s only a date column which can be of kind object. Thats all easy. I don’t really feel as whether it is essential to do EDA. And likewise, there is no such thing as a profit to it, all I really want to do is show the graph of the information, after which we are able to simply see. There is no such thing as a level in attempting to determine the form of the information when their isn’t any pattern however (often) going up. Let me know if that is flawed and a flawed mind-set.

import matplotlib.pyplot as plt

import seaborn as snsplt.determine(figsize=(10,6))

sns.lineplot(x="Date", y="Shut", information=df)

One thing I can simply see off the highest is that the quicker the numbers climb up the quicker they go down. However that doesn’t matter, I simply need to predict the long run, I feel. So lets break up practice and take a look at. However I want to elucidate how earlier than we do it.

Rationalization: We are able to’t simply practice take a look at break up this drawback with scikit-learn. The info we’re utilizing doesn’t have a goal variable. Effectively it does, I suppose, the closing value is the goal variable, however the date isn’t the unbiased variable. Take into consideration, there’s probably not a dependent variable (with the information we now have, perhaps if we analyze the sentiment of the information and the experiences that the corporate have given, then we’d have a dependent variable), however for now we don’t.

Additionally, this information has time, many of the different information doesn’t have time, and since practice take a look at break up is a random splitting of information, then the time would mess up, and due to this fact we wouldn’t be capable of “predict” the long run since we now have random information factors in random locations. In any case, lets get to coding.

The Improper Method

from sklearn.model_selection import train_test_splitX_train, X_test, y_train, y_test = train_test_split(#what will we use as a goal?)

The Right Method

dates_train = dates[:split_size]

close_train = shut[:split_size]dates_test = dates[split_size:]

close_test = shut[split_size:]

print(len(dates_train), len(close_train), len(dates_test), len(close_test))

plt.scatter(dates_train, close_train)

plt.scatter(dates_test, close_test)

Now, when one seems on the code above (for my part, I throw up, holy crap is that ugly wanting code, we are able to write this in a a lot shorter manner, however the concept is there, beneath is the higher manner). They each do the very same factor, one is simply higher wanting code (one thing I’m attempting to work on).

train_dates, train_close = dates[:split_size], shut[:split_size]

test_dates, test_close = dates[split_size:], shut[split_size:]plt.scatter(train_dates, train_close, s=5)

plt.scatter(test_dates, test_close, s=5)

plt.title("AMZN")

plt.determine(figsize=(10, 7))

That’s seems so significantly better. Copying and pasting that’s a lot simpler than the one above. Listed here are the outcomes:

Now, the following step in to discover a good mannequin, and I’ll take a look at a number of out. I’m studying by way of a textbook https://otexts.com/fpp3/. Hopefully it should give me a number of fashions, if I can’t, then I’ll use GPT, however I’ll strive an be taught. It’s going to take me some time.

Listed here are the algorithms I’ve chosen (I don’t know the appropriate title to name them, fashions??

- Naive Bayes

- Drift Technique

- AR Mannequin (Autoregression)

I’ll clarify each after which I’ll implement them. I’ve used Naive Bayes earlier than, drift is a variation of Naive Bayes, and AR Mannequin is one thing I discovered within the studying (and asking chatGPT). I’ll clarify what I requested ChatGPT when I’m explaining the AR Mannequin.

Naive Bayes

I’ve used Naive Bayes, and its actually well-liked due to its simplicity and value. Life is easy with Naive Bayes. Naive Bayes is an algorithm that merely makes use of the earlier worth as the following forecast worth. Its represented by the equation:

And its tremendous straightforward to implement, I feel. I feel that you simply simply set the earlier one to the following one by shifting the entire thing by 1 day in order that it simply continues it pattern. Otherwise you put the start of the forecast on the finish of the forecast. I feel, however I’ll have a look at an instance to determine how you can do it. Earlier than we do this, the pocket book I used to be wanting by way of has a pleasant plot perform, so I’m going to be utilizing it. Credit score to the good Daniel Bourke for this.

# Create a perform to plot time collection information

def plot_time_series(timesteps, values, format='.', begin=0, finish=None, label=None):

"""

Plots a timesteps (a collection of cut-off dates) in opposition to values (a collection of values throughout timesteps).Parameters

---------

timesteps : array of timesteps

values : array of values throughout time

format : type of plot, default "."

begin : the place to start out the plot (setting a price will index from begin of timesteps & values)

finish : the place to finish the plot (setting a price will index from finish of timesteps & values)

label : label to point out on plot of values

"""

# Plot the collection

plt.plot(timesteps[start:end], values[start:end], format, label=label)

plt.xlabel("Time")

plt.ylabel("AMZN Value")

if label:

plt.legend(fontsize=14) # make label larger

plt.grid(True)

Now lets get to doing the naive forecast. Once more, the pocket book is by Daniel Bourke. We simply have to set the index again by one? I feel thats what this code does.

dates = df["Date"]

shut = df["Close"]split_size = spherical(len(dates) * 0.8)

train_dates, train_close = dates[:split_size], shut[:split_size]

test_dates, test_close = dates[split_size:], shut[split_size:]

# This code is from the pocket book

naive_forecast = test_close[:-1]

plt.determine(figsize=(10, 7))

plot_time_series(timesteps=train_dates, values=train_close, label="Practice information")

plot_time_series(timesteps=test_dates, values=test_close, label="Check information")

plot_time_series(timesteps=test_dates[1:], values=naive_forecast, format="-", label="Naive forecast");

We are able to see that its actually actually correct, there can be nothing this correct, however we are able to attempt to see, and even whether it is, there can be nothing that’s this easy to make this correct. This can be a very energy algorithm, subsequent up is the Drift Technique.

Drift Technique

The drift technique is a variation of the Naive Bayes algorithm. It “drifts” the forecast, permitting it to “improve or lower over time”. The equation for that is a lot way more sophisticated and I’ve actually no clue the way it works, however I’ll use ChatGPT to determine what it means, however right here is the equation:

You mainly simply use a line and predict into the long run with some price of change up or down. I feel a greater wording for it might be a quantity that may enable for some change within the forecast. I don’t know, it only a quantity to push the person forecasts (I feel) up or down. What’s that worth represented by within the equation I don’t know but. However I feel its the h(blah blah blah) half. I feel, however you may right me if I’m flawed.

In any case, allow us to implement it. I feel I simply have to do what I did earlier than after which to every worth add that yT-y1/T-1 or one thing. However I have to know what these values are. After wanting it up within the GPT, I used to be right, h is simply the variety of forecasts you need. Which is sensible. So we might set the h worth to the size of our dataset-1 after which discover the common change time beyond regulation which is the yT-y1/T-1. However I don’t assume we have to do this, should you have a look at the Naive Bayes equation, I feel we simply add the drift worth to it. So allow us to do this in code. We simply copy the code after which discover the common change by doing one thing in pandas or numpy or one thing I don’t know. Lets code it!

df["shifted_column"] = df["Close"].shift()

df["difference"] = df['Close'] - df["shifted_column"]

df['difference'] = df['difference'].abs()

df["difference"].abs()

average_roc = df['difference'].imply()

The above code finds the common change over time. Since we’re simply doing the whole take a look at dataset we don’t want and h worth. I feel. Now lets do the drift forecast.

drift_forecast = test_close[:-1] + average_roc

As you may see, we simply add the common price of change (roc) to the forecast. Lets plot.

plot_time_series(timesteps=train_dates, values=train_close, label="Practice information")

plot_time_series(timesteps=test_dates, values=test_close, label="Check information")

plot_time_series(timesteps=test_dates[1:], values=drift_forecast, format="-", label="Naive forecast");

As you may see from the plot, it simply shifted the entire predicted forecast up a certain quantity. And that certain quantity is the common price of change. I feel that is right, right me if I’m flawed. Now allow us to go onto our AR Mannequin (Autoregression).

AR Mannequin (Autoregression)

Autoregression is a regression method that makes use of the previous values to foretell the long run values. And the principle concept is that there’s a linear relationship between the previous observations (the previous values) and the present values.

So, this seems actually complicated, and I’ll strive (to the perfect of my understanding) to elucidate it, please right me if I’m flawed. So, we now have c, the bizarre O and yt-1. yt is the present time collection, the factor we need to predict, and the yt-1, -2, -3, -p are simply previous time collection values. These are known as lagged values, however they only imply previous time collection values. The bizarre O represents the coefficients of the mannequin. They quantify the affect of the previous values on the present (to be predicted) information. They only transfer it up or down, one thing to have an effect on the previous values. I feel. Then we now have C, the C worth simply strikes the entire thing up or down. The entire graph. Then we now have that E, that E is simply the error worth, the shock worth, the random shock that impacts the entire thing.

Now to entire premise (I feel) of this AR mannequin hubba jubba is to create a autocorrelation coefficient. This coefficient is between a lagged worth and the present worth. The upper the quantity, the better the correlation between these two values in time. This worth offers particulars into the temporal dependence of the information. Wow, that sounds cool to say.

Now I’ve zero clue how to do that in code, I type of perceive the idea, utilizing a bunch of effector values that simply type of like present how a lot the previous impacts the long run or one thing alongside these traces. I feel. I Doubt that this mannequin will do any higher than the opposite two however lets give it a strive.

for i in vary(1,20):

df_for_use[f'Lag_{i}']=df_for_use["Close"].shift(i)df_for_use.dropna(inplace=True)

train_size = int(0.8 * len(df_for_use))

train_data = df_for_use[:train_size]

test_data = df_for_use[train_size:]

y_train = train_data["Close"]

y_test = test_data["Close"]

So we used a smaller pattern measurement of fifty information factors, the primary two traces of code shift the dataframe and provides us these lagged values, the remaining is simply splitting the information, so what I get from that is we simply have to create lagged values by shifting the entire thing by i. Now we simply plot the ACF (autocorrelation perform) utilizing statsmodel. Its gonna be magical.

from statsmodels.graphics.tsaplots import plot_acf

collection = df_for_use["Close"]

plot_acf(collection)

plt.present()

I’ve zero clue what that plot means, let me learn and discover out actually shortly. This half is after I’ve learn a bit of bit about it. So, the horizontal axis (which I most likely ought to have labelled) are the lagged values. So previous values, so 16 means (i believe) 16 handed values. The little line dot factor that appears like its simply producing from the the horzontal axis is the correlation coefficient. I imagine (at 16) this implies there’s a unfavorable correlation between the lagged worth (at 16) and the present worth, that means, it most likely pulls the present worth down with a unfavorable slope. Possibly I’m pondering of this fully flawed, however I can visualize a line with a unfavorable slope, and this line is the connection between the lagged values and the present worth. So now we have to simply practice a mannequin, however earlier than we do this I’ve extra info that I feel is cool.

So we see this blue shaded area, that is the consolation zone, the place the autocorrelation coefficient, (thus far I’ve forgotten to jot down the phrase auto), is in between 0.50 and -0.50, I suppose that is the great space. The values which are like loopy above or beneath are the numerous lags which simply have like actually actually excessive confidence stage (in keeping with my studying, the blue space is a confidence zone) or actually actually low confidence if the autocorrelation coefficient worth is actually actually low. We are able to discover the precise worth, for instance with our graph we measurement that lag worth 1 has a extremely excessive autocorrelation coefficient so we are able to see this by doing this in code.

df_for_use["Close"].corr(df_for_use["Close"].shift(1))

# 0.6932489805617724 (output)

We see that the autocorrelation coefficient a lot larger than our confidence zone. So now that I acquired that out of the way in which allow us to practice our mannequin, after all, we’ll do it on the small dataset.

from statsmodels.tsa.ar_model import AutoReg

from statsmodels.graphics.tsaplots import plot_acf

from statsmodels.tsa.api import AutoReg

from sklearn.metrics import mean_absolute_error, mean_squared_errorlag_order = 1

ar_model = AutoReg(y_train, lags =lag_order)

ar_results = ar_model.match()

We import all of the neccesary dependencies, after which we use the lag_order worth which we discovered was one which had the very best autocorrelation coefficient. We then simply plug within the y_train worth (there is no such thing as a x_train in timeseries) and the we give it a lag, this we’ll use one once more due to what I defined earlier. Then we match the mannequin.

y_pred = ar_results.predict(begin=len(train_data), finish = len(train_data)+len(test_data) -1, dynamic = False)mae = mean_absolute_error(y_test, y_pred)

rmse = np.sqrt(mean_squared_error(y_test, y_pred))

print(f'Imply Absolute Error: {mae:.2f}')

print(f'Root Imply Squared Error: {rmse:.2f}')

We see that we simply plug at first of the take a look at information since we educated our mannequin on our coaching information (clearly), then we finish with the tip of our take a look at information (clearly), the dynamic=False I’m advised signifies that we’re utilizing “out-of-sample forecasting”, I feel simply means that is simply values that it hasn’t seen, out of pattern that means not within the earlier pattern I feel, right me if I’m flawed. Lastly we discover our MAE and RMSE and its not good, however as soon as we have a look at the graph you may see why, I simply assume our information is type of laborious to foretell on.

It DID NOT go nicely, haha, I don’t actually care, nevertheless it appears actually actually cool to me. So we are able to apply to the bigger information, however I don’t assume that it’ll do significantly better. So for now, we’re completed.

Abstract, abstract, abstract, I don’t actually have a abstract, should you scrolled right here, unfortunetly, you will have to learn the whole factor. However I do have a number of questions, particularly in regards to the AR Mannequin.

- What’s confidence?

- Precisely how is that this completed, like all the way down to the bone?

- Does dataset measurement have something to do with it?

- What’s lag order?

Thats all for this one, it took me a full two months, this text was partly for me to concentrate on myself, and concentrate on code, and wow did this take a very long time however I’m joyful that it’s completed, so now I can simply do one other one. Haha. If I’ve made any errors, please let me know, and be imply if you must. Goodbye for now, I can be again.