Chia-Wei Chen; Sr. Software program Engineer | Raymond Lee; Sr. Software program Engineer | Alex Wang; Software program Engineer II | Saurabh Vishwas Joshi; Sr. Employees Software program Engineer | Karthik Anantha Padmanabhan; Sr. Supervisor, Engineering | Se Received Jang; Sr. Supervisor, Engineering |

Within the Part 1 of our weblog collection, we mentioned the rationale why we have been motivated to spend money on Ray to resolve vital enterprise issues. On this blogpost, we are going to go one step additional to explain what it takes to combine Ray right into a web-scale firm like Pinterest, the place now we have varied distinctive constraints and challenges to embrace new applied sciences. This can be a extra complete model of Ray Infrastructure half in our speak Last Mile Data Processing for ML Training using Ray in Ray summit 2023.

In our use case, having the ability to provision a Ray Cluster like what KubeRay offers is barely a part of having a matured Ray infrastructure. Firms must observe all the opposite best practices advised by Ray and different particular necessities together with log, metrics persistence, community isolation, figuring out optimum {hardware} situations, safety, site visitors setting, and miscellaneous inside service integrations.

The journey started in 2023 when one full-time engineer devoted 50% of their time to this challenge:

- 2023 Q1: Prototyping stage was initiated with help from our companions at Anyscale

- 2023 Q2: Ray Infra MVP was accomplished, together with important instruments reminiscent of logging, metrics, UI, and CLI for purposes, which have been iterated upon and enhanced

- 2023 Q3: The main target shifted to onboarding our first manufacturing use case, involving the mixing of inside programs like workflow programs to boost service stability

- 2023 This autumn: Emphasis was positioned on making ready for manufacturing, addressing safety considerations, bettering community stability, and evaluating the transition to a Ray-optimized Kubernetes setting

When constructing the Ray infrastructure at Pinterest, a number of key challenges have been encountered that wanted to be addressed:

- Restricted entry to K8s API: Working on PinCompute, a general-purpose federation Kubernetes cluster at Pinterest, restricted the set up of obligatory operators like KubeRay and its Customized Sources Definitions.

- Ephemeral logging and metrics: Whereas logging and metrics have been obtainable by the Ray Dashboard when the Ray Cluster was energetic, it was not sensible to keep up a resource-intensive Ray Cluster solely for debugging functions. An answer was sought to persist and replay the lifecycle of Ray workloads.

- Metrics Integration: Our firm had its personal model of a time collection database and visualization device that differed from standard open-source options like Prometheus and Grafana.

- Authentication, Authorization, Audit (AAA) tips: As per firm requirements, it’s required to have AAA assure For providers working on K8s, utilizing Envoy as service mesh is the really helpful method to construct AAA at Pinterest.

- A number of improvement experiences: Various improvement experiences have been sought, together with interactive choices with Jupyter and CLI entry with Dev servers, to cater to numerous developer wants.

- Value optimization and useful resource wastage: Ray clusters left idle may lead to vital bills. A rubbish assortment coverage and value attribution have been wanted to extend crew consciousness and mitigate useful resource wastage.

- Offline knowledge evaluation: Exporting all Ray cluster-related metrics to an enormous knowledge format (e.g., Hive, Parquet) for offline evaluation was a precedence. This knowledge would come with metrics reminiscent of GPU utilization to determine areas for enchancment and monitor software and infrastructure stability over time.

Because of the restricted K8s API entry, we can not simply set up KubeRay in the environment to function Ray Cluster in K8s. Moreover, particular sidecars managed by completely different groups are required for duties reminiscent of secret administration, site visitors dealing with, and log rotation throughout the Pinterest K8s cluster. To make sure centralized management over obligatory sidecar updates like bug fixes or safety patches, we should adhere to sure restrictions.

To prototype the important elements wanted for the Ray cluster (as outlined within the Launching an On-Premise Cluster information), whereas incorporating the required sidecars, we opted to make use of the Pinterest-specific CRD, which is a wrapper that builds on prime of an open-source Kubeflow PyTorchJob.

For the preliminary iteration, we aimed to maintain issues easy by establishing the Ray head and Ray employee on the consumer aspect. This entailed utilizing completely different instructions for every element and crafting a custom-made script for the consumer aspect to execute.

def launch_ray_cluster(configs: RayClusterConfig) -> str:

# outline sources, instance_type, command, envs_vars and so on...

configs = RayClusterAndJobConfigs()

with ThreadPoolExecutor() as executor:

# Submit the capabilities to the executor

ray_head = executor.submit(launch_ray_head(configs)).consequence()

ray_workers = executor.submit(launch_ray_workers(configs).consequence()

return check_up_and_running(ray_head, ray_workers)

The step has quite a lot of room for enchancment. The primary downside is that this method is tough to handle because the client-side execution may be interrupted resulting from varied causes (reminiscent of community errors or expired credentials), leading to a zombie Ray cluster that wastes sources on K8s. Whereas this method is adequate to unblock our Engineers to mess around with Ray, it’s removed from best for a platform designed to handle the Ray Cluster effectively.

Within the second iteration, a transition was produced from managing the Ray cluster on the client-side to a server-side method by growing a controller just like KubeRay. Our answer entailed the creation of an intermediate layer between the person and K8s, consisting of a number of elements together with an API Server, Ray Cluster / Job Controller, and MySQL database for exterior state administration.

- API Server: This element facilitates request validation, authentication, and authorization. It abstracts the complexities of K8s from the client-side, enabling customers to work together with the platform APIs interface, which is especially invaluable for enhancing safety, particularly in TLS-related implementations within the later part.

- MySQL database:The database shops state data associated to the Ray Cluster, permitting for the replay of obligatory ephemeral statuses from the K8s aspect. It additionally decouples the information move between the API Server and Ray Cluster Controller, with the additional benefit of facilitating knowledge dumping to Hive for offline evaluation.

- Ray Cluster Controller: This element constantly queries K8s to handle the life cycle of the Ray Cluster, together with provisioning Ray head and employee nodes, monitoring the standing of the Ray Cluster, and performing cleanup operations as wanted.

- Ray Job Controller: Just like the Ray Cluster Controller, the Ray Job Controller focuses on the administration of Ray Job life cycles. Serving as the first entity for submitting RayJobs, it ensures correct authentication and authorization protocols throughout the system. Moreover, the controller helps the submission of a number of Ray Jobs to the identical Ray Cluster, enabling customers to iterate extra effectively with out the necessity to wait for brand spanking new Ray Cluster provisioning for every job submission.

This method offers a invaluable abstraction layer between customers and Kubernetes, eliminating the necessity for customers to understand intricate Kubernetes artifacts. As a substitute, they will make the most of the user-facing library offered by the platform. By shifting the heavy lifting of provisioning steps from the client-side, the method is streamlined, simplifying steps and enhancing the general person expertise.

Through the implementation of our personal controller, we ensured modularity, enabling a seamless transition to KubeRay sooner or later. This method permits for the easy substitution of the tactic used to launch a Ray cluster, transitioning from an in-house Kubernetes primitive to KubeRay with ease.

Class Controller:

def reconcile(self, ray_cluster: RayClusterRecord):

# this half may be swap out from in-house primitive to KubeRay

standing, k8s_meta = self.launch_and_monitor_ray_cluster(ray_cluster.configs)

db.replace(ray_cluster, standing=standing, k8s_meta=k8s_meta)def run(self):

whereas True:

ray_clusters = db.get_ray_cluster_to_dispatch()

for ray_cluster in ray_clusters:

self.reconcile(ray_cluster)

sleep(1)

def launch_and_monitor_ray_cluster(self, configs) -> Tuple[str, Dict]:

return get_actual_k8s_related_status(ray_identifier=configs.ray_identifier)

Observability

Contemplating that the Ray Cluster’s current Ray dashboard is accessible solely when the cluster is energetic, with no provision for log or metric replay, we selected to develop a devoted person interface integrating persistent logging and metrics performance. Supported by the APIs Gateway constructed beforehand, this person interface gives real-time insights into each Ray Cluster and Ray Job standing. Since all of the metadata, occasions, and logs are saved in both database or S3, this technique permits for log evaluation with out the necessity to keep an energetic Ray Cluster, mitigating prices related to idle sources reminiscent of GPUs.

It’s seemingly true that varied firms have their very own time collection metrics options. At Pinterest, we make the most of our personal in-house time collection database generally known as Goku, which has APIs compliant with OpenTSDB. We run an extra sidecar that scrapes prometheus metrics and reformats them to be appropriate with our in-house system. Concerning logging, we observe Ray’s recommendation of persisting logs to AWS S3. These logs are then consumed by the API server and displayed on our Ray Cluster UI.

Ray Software Stats

We translate the identical grafana chart to an in-house visualization device known as Statsboard. As well as, we add extra application-specific options reminiscent of dcgm GPU metrics and dataloader metrics, that are useful for ML Engineers at Pinterest to determine the bottleneck and subject for his or her ray purposes.

Ray Infrastructure Stats

Monitoring all infrastructure-level metrics is crucial for implementing efficient monitoring, producing alerts, and establishing SLO/SLA benchmarks based mostly on historic knowledge. For instance, monitoring the end-to-end Ray Cluster wait time and monitoring the rolling Success Fee of Ray Jobs are vital for evaluating and sustaining system efficiency. Moreover, figuring out any platform-side errors which will result in Ray Cluster provisioning failures is essential for sustaining operational effectivity.

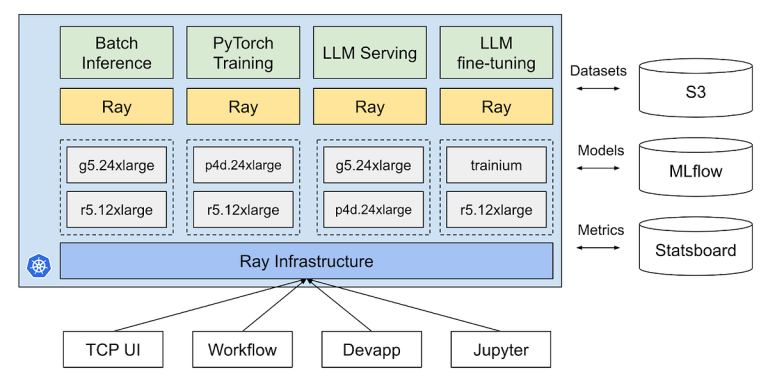

We offer three choices for growing Ray purposes at Pinterest together with Dev server, Jupyter, and Spinner workflow. All of them are powered through the use of the RESTful APIs in our ML Platform.

We depend on PythonOperator in Airflow to compose a custom-made operator the place customers can present their job_configuration, and we do the interpretation into RayJob requests towards our MLP Server.

Unittest & Integration Take a look at

We provide two kinds of testing for customers to leverage when growing ray software:

- Unittest is really helpful for platform library house owners using decrease stage Ray core or Ray knowledge library. Integration testing is appropriate. We observe the Tips for testing Ray programs and use pytest fixtures to reuse a ray cluster as a lot as attainable throughout the similar check suite.

- Integration testing is appropriate for customers trying to run an entire Ray job to determine and deal with any regressions which will come up from code modifications or library updates. We additionally run the mixing check periodically to observe the enterprise vital Ray software healthiness.

Whereas Ray as a compute platform is extraordinarily versatile for builders to run workloads simply by APIs, this additionally results in a safety vulnerability (CVE-2023–48022), emphasised by this Shadowray article. The problem is that Ray itself doesn’t present a great way of authentication and authorization, so everybody who has entry to Ray Dashboard APIs can execute code remotely with none validation or controls.

At Pinterest, we considered this safety subject significantly and we addressed this subject correctly. We go one step additional to make sure correct authentication and authorization is utilized on Ray Cluster, so a given Ray Cluster can’t be used if the person doesn’t have the proper permissions.

Nevertheless, the complexity of this subject was additional compounded by Pinterest’s federation Kubernetes cluster structure, which posed challenges in making use of intra-cluster options to inter-cluster environments. For instance, we can not use NetworkPolicy to regulate the ingress and egress move throughout K8s clusters, so we want an alternate method to obtain community isolation, particularly when Pods can scatter throughout K8s clusters resulting from our intention for maximizing {hardware} availability in numerous zones.

- HTTP: At Pinterest, we use Envoy as our service mesh within the Kubernetes setting. We deploy the Ray Dashboard on localhost behind Envoy and observe the usual method of authentication and authorization at Pinterest. This enables us to restrict the entry of the Ray Dashboard to both OAuth for customers from the UI or mTLS for providers.

2. gRPC: to stop arbitrary Pod in K8s setting that may hook up with energetic Ray Cluster, we leverage the Ray TLS with some customization throughout Ray cluster bootstrap time. Intimately, for every Ray Cluster, we create a singular pair (personal key, certificates) Certificates Authority (CA). This ensures now we have a 1:1 mapping between a CA and a selected Ray Cluster. Step one of mutual authentication is finished by limiting the consumer (Ray Pods) entry to a given CA by correct AuthN / AuthZ on the Server aspect, in order that solely a subset of the pods will be capable of obtain a certificates signed by the CA meant to symbolize that specific Ray Cluster. The second step happens when the pods talk utilizing these issued certificates, checking that they have been signed by the CA similar to the anticipated Ray cluster. Furthermore, all cryptographic operations to signal and subject leaf certificates for Ray pods needs to be carried out on the server aspect (MLP Server) to make sure that purchasers, together with the Ray head and employee pods, wouldn’t have entry to the CA personal key.

Incremental enchancment:

- Start by deploying a Ray Cluster in an easy method, then give attention to automating and scaling the method in a manufacturing or cloud setting.

- Make the most of current infrastructure throughout the firm to reduce the necessity for reinventing the wheel when growing the MVP. For us, we leverage the Kubeflow operator, and current ML-specific infrastructure logic can streamline the event course of.

- Refine the infrastructure,reminiscent of addressing safety pitfalls and another compliance points, in keeping with company-wide greatest practices as soon as the prototype is accomplished,

- Conduct common conferences with prospects to collect early suggestions on challenges and areas for enchancment.

- With the present success of the Ray initiative at Pinterest, we’re searching for extra enhancements like integrating KubeRay when transferring to a ML devoted K8s cluster.

Intermediate Layer between Shopper and Kubernetes Cluster:

- The API server serves as a bridge between the consumer and Kubernetes, providing an abstraction layer.

- Be sure that life cycle occasions of a Ray cluster are persistently recorded even after the customized useful resource is faraway from Kubernetes.

- The platform has the chance to implement enterprise logic, reminiscent of further validation and customization, together with authentication, authorization, and limiting entry to the Ray Dashboard API for finish customers.

- By decoupling the precise technique of provisioning the Ray Cluster, it turns into simpler to change to a distinct node supplier as wanted, particularly as we plan to maneuver ahead to KubeRay and a devoted K8s cluster sooner or later.

Visibility:

- Offering inadequate infrastructure-related data to customers might result in confusion concerning software failures or delays in Ray cluster provisioning.

- Platform-side monitoring and alert is vital to function tens or lots of of Ray Clusters on the similar time. We’re nonetheless within the early phases of Ray infrastructure, and fast modifications can break the applying aspect, so we should be diligent in establishing alerts and do thorough testing in staging environments earlier than deploying to manufacturing.

We began gathering Ray infrastructure utilization in Q2 2023 and noticed a surge in This autumn 2023 as our final mile knowledge processing software GA and increasingly customers began to onboard the Ray framework to discover completely different Ray purposes reminiscent of batch inference and adhoc Ray Serve improvement. We at the moment are actively serving to customers migrate their native PyTorch based mostly purposes towards Ray-based purposes to take pleasure in the advantages of Ray. We’re nonetheless within the early phases of transferring from native PyTorch to Ray based mostly PyTorch coaching, however we’re eagerly collaborating with prospects to onboard extra superior use circumstances.

Ray Infrastructure has been deployed for manufacturing ML use-cases and for fast experimentation of latest purposes.

Ray Prepare

- A number of recommender system mannequin coaching has migrated to Ray, and we’re actively onboarding the remaining use circumstances

- We’re at present working 5000+ Coaching Jobs / month utilizing Ray

- These coaching runs make the most of a heterogeneous CPU / GPU cluster

Key wins:

Scalability:

- Ray permits our coaching runs to scale knowledge loading & preprocessing transparently past a coach occasion.

- A single gpu node reminiscent of p4d.24xlarge occasion has a hard and fast 12:1 CPU:GPU ratio, which prevents data-loaders from scaling out and saturating the GPU.

- With Ray, we are able to scale out the information loaders exterior the p4d occasion utilizing cheaper-CPU solely situations

Dev-velocity

- Except for scalability, Ray drastically contributes to the acceleration of improvement velocity.

- A big a part of ML engineers’ each day work is implementing modeling modifications and submitting dev coaching runs utilizing native code

- Ray permits customers to interactively use the Ray compute cluster to submit jobs by way of Jupyter notebooks as a terminal / interface

Batch Inference

- Prior to now, Pinterest utilized a PySpark based mostly batch inference answer.

- Utilizing Ray, now we have re-implemented a brand new BatchInference answer, designed as a map_batches implementation on the ray.knowledge.Dataset.

- We’re utilizing this answer for 3 manufacturing use circumstances

- We’re at present working 300+ Batch Inference Jobs / month utilizing Ray

Key wins:

Effectivity:

- Not like the outdated implementation, Ray permits pipelining of pre-processing, GPU inference, and output file writes.

- Moreover, it may decouple these three steps robotically to run on heterogeneous CPU & GPU nodes.

- Mixed, this has resulted in a 4x discount in job runtime (1hr → 15 minutes) on our manufacturing GPU inference jobs.

Unlocked Alternative:

- The benefit of programming with Ray, and the effectivity derived from pipelining, has enabled us to undertake characteristic ablation tooling for GPU based mostly fashions.

Experimental Workloads

- Ray gives a sturdy ecosystem of instruments, which additionally contains Ray Serve

- RayServe offers built-in routing and auto-scaling performance for mannequin serving, which may be very useful to rapidly arrange a mannequin for analysis.

- With out RayServe, purchasers must manually arrange an RPC Server, deployment pipelines, service discovery, and autoscaling.

Key wins:

- Throughout an inside hackathon, groups may arrange and use an open supply giant mannequin in just a few hours

- With out Ray, establishing such an infrastructure would have taken days if not weeks

- Deep dive into Ray Batch Inference at Pinterest

- Ray Tune at Pinterest

- Distinctive problem for Ray software at Pinterest

Cloud Runtime Staff: Jiajun Wang, Harry Zhang

Visitors Staff: James Fish, Bruno Palermo, Kuo-Chung Hsu

Safety Staff: Jeremy Krach, Cedric Staub

ML Platform: Qingxian Lai, Lei Pan

Anyscale: Zhe Zhang, Kai-Hsun Chen, SangBin Cho