Nvidia has just lately launched the Nemotron-4 340B mannequin household [1], together with Nemotron-4–340B-Base, Nemotron-4340B-Instruct, and Nemotron-4–340B-Reward, as open entry fashions with a permissive license.

As proven by authors, Nemotron-4–340B-Base is aggressive with open entry base fashions like Llama-3 70B (MetaAI, 2024), Mixtral 8x22B (Mistral-AI-Staff, 2024b) and the just lately launched Qwen-2 72B mannequin on commonsense reasoning duties like ARC-Problem, MMLU, and the BigBench Laborious benchmark. One promising software of those fashions is artificial information era, which has already demonstrated important worth in bettering information high quality for pretraining.

Key contributions:

- launched the Nemotron-4 340B mannequin household, together with Nemotron-4–340B-Base, Nemotron-4340B-Instruct and Nemotron-4–340B-Reward, below the NVIDIA Open Mannequin License Settlement, which is permissive for industrial functions

- launch code for coaching and inference of those fashions to advertise transparency and reproducibility.

- present complete particulars about launched mannequin’s artificial information era pipeline and illustrate its effectiveness in mannequin alignment. Authors additionally shared their era prompts, human annotated choice dataset, and the Nemotron-4–340B-Reward for high quality filtering and choice rating

i) Knowledge

- three various kinds of information used: English pure language information (70%), multilingual pure language information (15%), and supply code information (15%

- English corpus contained paperwork like net paperwork, information articles, scientific papers, books

- multilingual information comprises 53 pure languages and consists of paperwork from each monolingual and parallel corpora

ii) Architectural Particulars

- It’s a commonplace decoder-only Transformer structure, with causal consideration masks, makes use of Rotary Place Embeddings (RoPE), SentencePiece tokenizer and squared ReLU activations within the MLP layers.

- It has no bias phrases, has dropout price of zero, and untied input-output embeddings, makes use of grouped question consideration (GQA)

- Key hyper-parameters affecting dimension of Nemotron-4–340B-Base, outlined in determine beneath

iii) Coaching Particulars

- skilled utilizing 768 DGX H100 nodes

- used a mixture of 8-way tensor parallelism [2], 12-way pipeline parallelism with interleaving [3] and information parallelism to coach the mannequin

- additionally used a distributed optimizer to shard the optimizer state over the data-parallel replicas and cut back the reminiscence footprint of coaching

- Desk beneath summarizes the three phases of batch dimension ramp, and contains the per-iteration time and Mannequin FLOP/s Utilization (MFU)

iv) Base Mannequin Analysis

- Desk beneath exhibits Outcomes on commonplace reasoning benchmarks

- Outcomes above exhibits illustrates that Nemotron-4–340B-Base achieves the strongest accuracy on commonsense reasoning duties(ARC-c,Winogrande,Hellaswag) in addition to on standard benchmarks like BBH. Moreover, it’s aggressive on MMLU and code benchmarks like HumanEval.

i) Reward Modeling

- To develop a powerful reward mannequin, collected a dataset of 10k human choice information, known as HelpSteer2, following a technique just like the one described in HelpSteer[4].

- authors discover that multi-attribute regression reward fashions are simpler at disentangling actual helpfulness from irrelevant artifacts, corresponding to preferring longer however unhelpful responses solely as a consequence of their size

- additionally regression fashions are higher at predicting fine-grained rewards, capturing the nuances of helpfulness between comparable responses

- regression reward mannequin is constructed on prime of Nemotron-4–340B-Base mannequin by changing the ultimate softmax layer with a brand new reward “head”. This “head” is a linear projection which maps hidden states of the final layer right into a five-dimensional vector of HelpSteer attributes (Helpfulness, Correctness, Coherence,Complexity, Verbosity).

- Throughout inference, these attribute values may be aggregated by a weighted sum to be an general reward.

ii) Alignment Knowledge

a) Immediate Preparation

- method of producing artificial prompts permits to manage the immediate distribution to cowl a various set of situations

- For immediate variety to be multidimensional, used the permissive Mixtral-8x7B-Instruct-v0.1 as generator to generate artificial prompts individually for the duties together with open Q&A, writing, closed Q&A, math&coding.

- For every immediate process, we seed the era with a various set of subjects or key phrases in order that the prompts cowl all kinds of subjects

- additionally generate instruction following prompts which explicitly outline the format of the anticipated response, e.g., “The output must be within the json format.”

- Moreover, we generate two-turn prompts which embrace the user-assistant interplay historical past to spice up our mannequin’s dialog abilities

(1) Artificial single-turn prompts:

- Determine beneath exhibits the high-level pipelines for producing Artificial single-turn prompts era for open Q&A, writing, closed Q&A, math&coding, from left to proper.

(2) Artificial two-turn prompts

- constructed two-turn prompts for constructing choice datasets. Particularly, the immediate comprises one consumer query, one assistant reply, and one other consumer query, within the type of “Person: XXX; Assistant: XXX; Person: XXX;”.

- supply the primary consumer prompts from ShareGPT , and generate the assistant response and the subsequent flip query with our intermediate instruct fashions

(3) Actual-world LMSYS prompts

- To raised mirror real-world consumer requests, prompts additionally drawn from LMSYS-Chat-1M [5]

- Determine beneath exhibits helpfulness distribution for Mixtral-8x7B-Instruct-v0.1’s responses from artificial prompts and LMSYS prompts. respectively

- Since it’s simpler to be “useful” for easy prompts, this means that LMSYS prompts are harder and sophisticated than artificial single-turn prompts on common

b) Artificial Dialogue Technology

- Supervised fine-tuning permits fashions to discover ways to work together with customers in a dialogue format.

- artificial conversations initiated by prompting an instruct mannequin to generate responses primarily based on the enter prompts

- Via iterative role-playing, the mannequin alternates between simulating the Assistant’s and Person’s roles.

- utilized Nemotron-4–340B-Reward to evaluate the standard of dialogues, assigning a rating to every pattern and filtering out those who fall beneath a predetermined threshold

c) Artificial Desire Knowledge Technology

used 10K human-annotated HelpSteer2 choice information to coach Nemotron-4–340B-Reward, however choice information can be wanted with a extra various area of prompts, with higher-quality responses from top-tier intermediate fashions. Due to this fact, authors attempt to generate artificial choice information within the triplet type of (immediate, chosen response, rejected response).

(1) Response era

- choice information comprises artificial single-turn prompts, instruction-following prompts, two-turn prompts, in addition to real-world prompts together with ShareGPT prompts, LMSYS prompts, and prompts from the GSM8K and MATH coaching datasets

- For every immediate, authors generate responses utilizing a number of random intermediate fashions

(2) Floor-Fact-as-a-Decide

- Given a number of responses for every immediate, authors decide their choice rating and select the chosen and the rejected response

(3) LLM-as-Decide and Reward-Mannequin-as-Decide

- In LLM-as-Decide, we offer the immediate and two responses to the judging LLM and asking it to check the 2 responses

- In Reward-Mannequin-as-Decide, authors ask Nemotron-4–340B-Reward to foretell the reward for every (immediate, response) pair and determine the choice rating primarily based on the rewards

- Reward Bench benchmark exhibits that Reward-Mannequin-as-Decide has the next accuracy than LLM-as-Decide.

- Particularly, within the Chat-Laborious class, the place the chosen and rejected responses are onerous to distinguish, Reward-Mannequin-as-Decide performs a lot better than LLM-as-Decide with the typical accuracy 0.87 vs 0.54

d) Iterative Weak-to-Robust Alignment

- Determine beneath illustrates the workflow of Iterative Weak-to-Robust Alignment.

- Right here the standard of a mannequin(whether or not it’s thought-about weak or sturdy) is outlined by a mixture of a number of analysis metrics

- An preliminary aligned mannequin is employed because the generator for each dialogue and choice information.

- The info is then used for aligning a greater base mannequin utilizing supervised fine-tuning and choice tuning

- as the bottom mannequin and alignment information are refined, the newly aligned mannequin is ready to surpass the preliminary aligned mannequin by a major margin.

- alignment process is carried out in parallel with base mannequin pretraining.

- Within the first iteration, we select Mixtral-8x7B-Instruct-v0.1 because the preliminary aligned mannequin, because it has been demonstrated as a powerful mannequin with permissive license.

- The generated information is leveraged to coach an intermediate checkpoint of Nemotron-4–340B-Base, known as 340B-Interm-1-Base. Notably, 340B-Interm-1-Base outperforms the Mixtral 8x7B Base mannequin, which in flip permits the ensuing 340B-Interm-1-Instruct mannequin to surpass the Mixtral-8x7B-Instruct-v0.1 mannequin. reflecting the truth that we will elicit sturdy capabilities with weak supervision.

- Within the second iteration, we make the most of the resultant 340B-Interm-1-Instruct mannequin as the brand new information generator. Given its enhanced capability in comparison with Mixtral-8x7B-Instruct-v0.1, the artificial information generated within the second iteration reveals increased high quality than the info produced within the first iteration. The ensuing information is used to coach 340B-Interm-2-Base to turn into 340B-Interm-2-Chat

- This iterative course of creates a self-reinforcing flywheel impact, the place enhancements may be attributed to 2 elements: (1) When utilizing the identical dataset, the power of the bottom mannequin has a direct impression on the instruct mannequin, with stronger base fashions yielding stronger instruct fashions; (2) Conversely, when utilizing the identical base mannequin, the standard of the dataset performs a crucial function in figuring out the effectiveness of the instruct mannequin, with higher-quality information resulting in stronger instruct fashions. All through all the alignment process, we conduct a number of rounds of information era and refinement, frequently bettering the standard of our fashions.

iii) Alignment Algorithms

a) Staged Supervised Advantageous-tuning

Supervised Advantageous-tuning (SFT) constitutes step one of alignment. Conventionally, SFT is carried out in a single stage, the place the dataset contains a mix of samples from all duties. Nevertheless, our experimental outcomes recommend that studying a number of behaviors concurrently can typically result in conflicts between them, thereby stopping the mannequin from reaching optimum alignment on all duties on the identical time

(1) Code SFT

- For bettering coding and reasoning capabilities with out interfering with different duties, SFT was carried out purely on coding information as a primary stage

- To successfully synthesize coding information, authors develop Genetic Instruct, an method that mimics evolutionary processes, using self instruction [6] and wizard coder mutations [7] to create quite a few artificial samples from a restricted variety of high-quality seeds

- additionally introduce a health perform that employs an LLM to evaluate the correctness and high quality of the generated instruction and its answer

(2) Basic SFT

- Within the second stage, we proceed with Basic SFT, leveraging a blended dataset of 200K samples that encompasses quite a lot of duties

- To mitigate the chance of forgetting, the info mix additionally contains 2% of the code era samples from the previous Code SFT stage

b) Desire Advantageous-tuning

choice fine-tuning stage includes a number of iterations of mannequin enchancment, utilizing each the Direct Desire Optimization and our new alignment algorithm, the Reward-aware Desire optimization

(1) Direct Desire Optimization (DPO)

- DPO algorithm optimizes the coverage community to maximise the implicit reward hole between the chosen and rejected responses

- Empirically, we observe the the coverage community tends to overfitting when coaching lengthy sufficient and the development of 1 metric (e.g., MT-Bench) normally comes with the degradation of different metrics (e.g., 0-shot MMLU).

- These points have been mitigated by including a weighted SFT loss on the chosen responses along with vanilla DPO loss

- extra SFT loss helps to stop the coverage community from shifting rather a lot away from the choice information, particularly since our choice information is just not generated from the reference coverage

(2) Reward-aware Desire Optimization (RPO)

- because the majority of our choice information are artificial, whose choice rank is judged in keeping with the reward from Nemotron-4–340-B-Reward

- Whereas DPO solely makes use of the binary order between two responses, the distinction between the rewards comprises extra info

- Utilizing the checkpoint skilled from DPO as initialization and reference coverage, we additional prepare the mannequin with RPO.

- Reward-aware Desire Optimization (RPO), which makes an attempt to approximate the reward hole utilizing the implicit reward (Rafailov et al., 2024) outlined by the coverage community. Particularly, this results in a brand new loss perform as recognized beneath

the place π is the coverage community to coach; πref is the reference coverage; (x, yc, yl) corresponds to the immediate, chosen response, and rejected response; r⋆(x, yc), r⋆(x, yl) are the rewards of the chosen and rejected responses by the reward mannequin, respectively

iv) Instruct Mannequin Analysis

a) Automated Benchmarks

- Desk beneath exhibits Analysis outcomes of instruct fashions on automated benchmarks. Daring signifies the highest rating amongst all fashions, whereas underlined signifies the highest rating amongst open-source fashions.

- Outcomes above exhibits that Nemotron-4–340B-Instruct is aggressive with at present accessible open entry fashions

- Desk beneath exhibits Analysis outcomes of every intermediate mannequin within the alignment course of, the place the final column corresponds to our Nemotron-4–340B-Instruct

b) Human Analysis

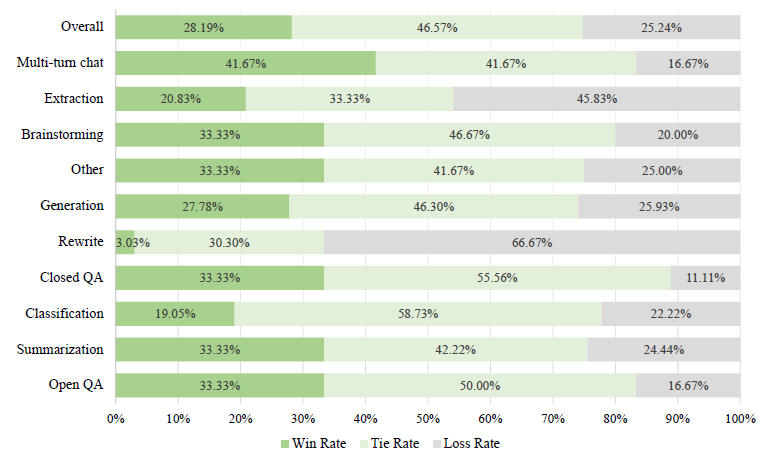

- Determine beneath exhibits Human evaluations evaluating Nemotron-4–340B-Instruct with GPT-4–1106-preview throughout ten process classes. We plot the general Win/Tie/Loss price in addition to for every class.

- with exception of extraction and rewrite, win charges for Nemotron-4–340B-Instruct are comparable or higher than GPT-4–1106-preview, with sturdy outcomes on multi-turn chat

- Desk beneath exhibits Human analysis outcomes concerning notion of response size. Underlined signifies the mannequin with the upper price of perceived acceptable size

- Outcomes present that annotators contemplate Nemotron-4–340B-Instruct to have a barely increased price of acceptable response size (79.41% vs 74.02%) when in comparison with GPT-4–1106-preview

c) Security Evaluations

- To judge the security of our mannequin, we make use of AEGIS [8], a top quality content material security answer and analysis benchmark from NVIDIA

- Desk beneath exhibits Proportion of unsafe responses over all mannequin responses in AEGIS security evaluations. Decrease is best.

- outcomes show that Nemotron-4–340B-Instruct has a really low unsafe response price

- presents a household of Nemotron-4 340B fashions: Nemotron-4–340B-Base, Nemotron-4–340B-Instruct and Nemotron-4–340B-Reward

- present complete particulars about our artificial information era pipeline and illustrate its effectiveness

Paper:https://d1qx31qr3h6wln.cloudfront.net/publications/Nemotron_4_340B_8T_0.pdf

Alignment information: https://huggingface.co/datasets/nvidia/HelpSteer2