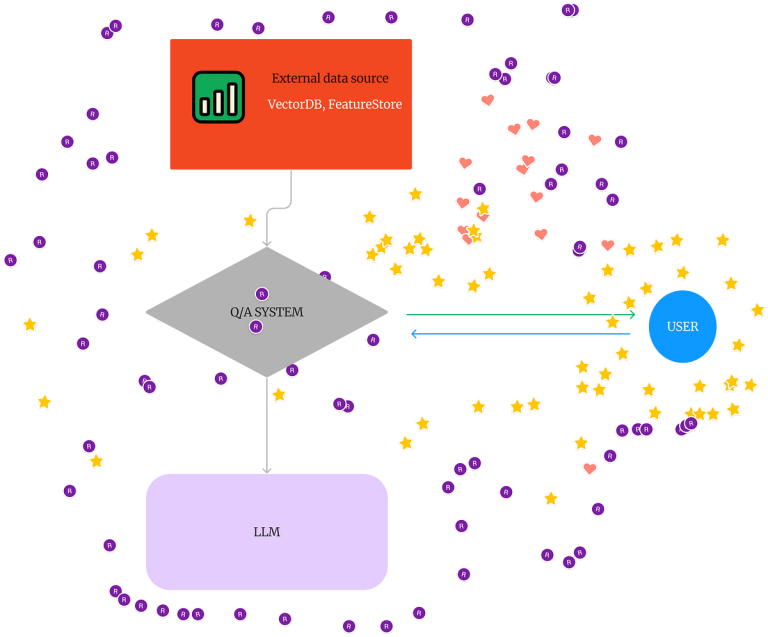

Let have a look at this picture under;

The LLM lack area particular details about Hamster mini app within the above instance. From the instance above, the LLM tried to reply the query to the very best of its means however was flatly fallacious

WE NEED RAG FOR UP-TO-DATE SPECIFIC INFORMATION:

LLM are caught: This implies the LLM can reply from the info given to it from 2021. It can not present reply past this time. The dataset use for LLM is static, for instance asking ChatGpt query exterior its coaching dataset

The LLM lack area particular details about Hamster mini app within the above instance. From the instance above, the LLM tried to reply the query to the very best of its means however was flatly fallacious

WE NEED RAG FOR UP-TO-DATE SPECIFIC INFORMATION

To get up to date details about from LLM, RAG is used to fetched knowledge out of your exterior database at era time. This reduces hallucination

Secondly, LLM can not reply particular query about your corporation. Though their work is sort of good however they can not get the precise particulars about your corporation and the context the enterprise is working on reminiscent of buyer wants, retailer necessities.

RAG(Retrieval augmented era) remedy this drawback by offering context and factual info to LLM at era time.

From our earlier dialogue we famous that LLM doesn’t know something about area -specific of a enterprise. Additionally, LLM is prepare on huge quantity of knowledge which makes very tough to quote it sources, nonetheless, RAG solves this drawback by giving LLM the flexibility to quote the sources of its reply.

Constructing basis mannequin is dear.

Open AI Sam Altman Estimated $100 million to coach chatGPT.

It isn’t each firm that has the identical capability to fund reminiscent of initiatives, moreover there problems with scarce skills, knowledge labelling in addition to a number of technical points

- Query and reply chatbots: If you add RAG to LLM it’ll present context reply from the corporate’s doc thereby decreasing hallucination

- Search segmentation: If you incorporate LLM to look engine, it’ll assist customers to seek out related end result very quickly

- Ask questions in your knowledge: With RAG, it’s simple to ask questions in your knowledge