The authors current the picture classification outcomes achieved by their completely different configurations on the ImageNet dataset.

The authors start by evaluating the efficiency of particular person ConvNet fashions at a single scale. The primary commentary is that the native response normalisation doesn’t enhance on the mannequin A with any normalisation layers, and thus, the authors don’t use any normalisation within the deeper architectures.

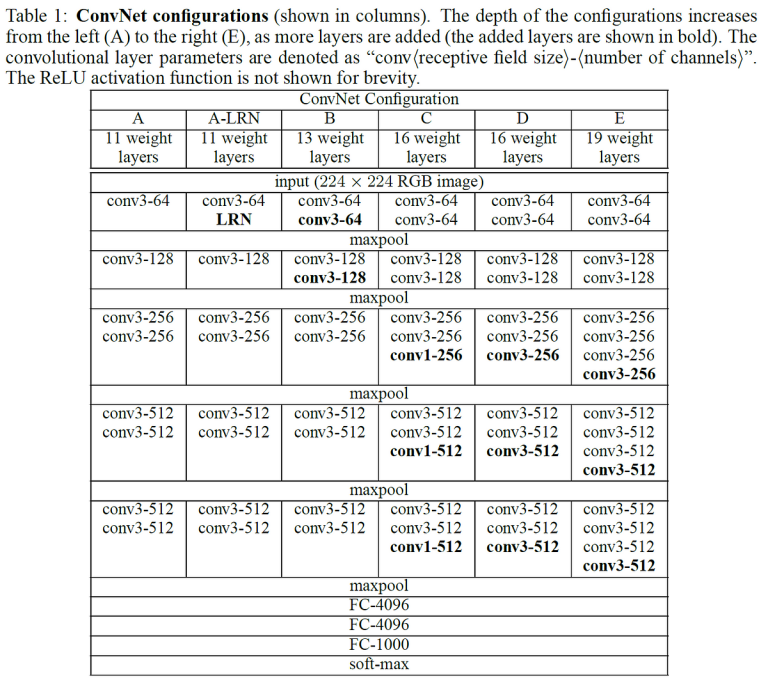

The second commentary is that the classification error decreases with the elevated ConvNet depth. An attention-grabbing factor to notice is that inspite of the identical depth, mannequin C (which incorporates 1×1 conv layers) performs worse than mannequin D. This means that which the extra non-linearity does assist (C performans higher than B), it is very important seize spatial context (D is healthier than C). The error charges saturate at 19 layers, nonetheless, even deeper fashions could be useful for bigger datasets.

One other attention-grabbing perception is proven above. We additionally notice that scale jittering at coaching time results in considerably higher outcomes than coaching on pictures with mounted scale, despite the fact that a single scale is used at take a look at time. This confirms that coaching set augmentation by scale jittering is certainly useful for capturing multi-scale picture statistics.

Now the authors experiment with scale jittering at take a look at time. Their outcomes point out that scale jittering at coaching and analysis of take a look at picture at a number of scales results in one of the best efficiency.

Subsequent, the authors evaluate dense ConvNet analysis with multi-crop analysis. In addition they assess their mixture by averaging their softmax outputs. They notice that a number of crops performs barely higher than dense analysis and the 2 approaches are complementary, since their mixture outperforms every of them.

The authors now mix the outputs of a number of fashions by averaging their softmax class posteriors, which boosts their accuracy additional. They type an ensemble of solely two greatest performing fashions which ends up in their greatest accuracy.

In addition they outperform earlier fashions who achieved greatest leads to ILSVRC-2012 and 2013, in addition to, obtain comparable efficiency with GoogLeNet.