In right this moment’s digital age, entry to credit score is essential, however manually analyzing bank card purposes could be time-consuming and error-prone. On this mission, I used machine studying to construct a mannequin that predicts bank card approval with excessive accuracy, probably serving to lenders make sooner and extra knowledgeable choices.”

Targets and Method:

- My aim is to construct a mannequin that would predict whether or not an applicant could be accredited for a bank card primarily based on their knowledge. I began by exploring the info, and figuring out patterns and tendencies. Then, I preprocessed the info by dealing with lacking values, and outliers, and performing obligatory transformations. Subsequent, I skilled varied machine studying fashions, together with Logistic Regression, Random Forest, and Gradient Boosting. Lastly, I evaluated the fashions primarily based on their efficiency metrics and chosen one of the best one.”

Achievements and Challenges:

- One key discovering from my knowledge evaluation was that annual revenue, relations, and employment length have been crucial options for predicting approval. I used strategies like SMOTE to handle imbalanced knowledge, the place candidates with excessive danger have been a lot fewer. Curiously, through the financial recession, prioritizing recall (catching good candidates) was extra necessary than precision (avoiding dangerous candidates), so I selected Gradient Boosting as one of the best mannequin primarily based on its recall rating.”

Influence and Expertise:

- My mannequin achieved an accuracy of 88.48%, probably enhancing lenders’ skill to evaluate creditworthiness effectively. This mission allowed me to showcase my abilities in knowledge evaluation, machine studying, and have engineering. I additionally realized priceless classes about moral concerns in AI fashions and the significance of tailoring them to particular enterprise contexts.”

Right here We comply with the Following Steps:

- Exploratory knowledge evaluation(EDA)

- Characteristic engineering

- Characteristic choice

- Knowledge preprocessing

- Mannequin coaching

- Mannequin choice

1.Import all of the required libraries

import numpy as np

import pandas as pd

import matplotlib

import matplotlib.pyplot as plt

import seaborn as sns

import missingno as msno

import warnings

import warnings

from pandas.errors import SettingWithCopyWarning

warnings.simplefilter(motion="ignore", class=SettingWithCopyWarning)

from pathlib import Path

import os

%matplotlib inlinefrom scipy.stats import probplot, chi2_contingency, chi2, stats

from sklearn.model_selection import train_test_split, GridSearchCV, RandomizedSearchCV, cross_val_score, cross_val_predict

from sklearn.base import BaseEstimator, TransformerMixin

from sklearn.pipeline import Pipeline

from sklearn.calibration import CalibratedClassifierCV

from sklearn.compose import ColumnTransformer

from sklearn.preprocessing import OneHotEncoder, MinMaxScaler, OrdinalEncoder

from sklearn.metrics import ConfusionMatrixDisplay, classification_report, roc_curve, roc_auc_score

from imblearn.over_sampling import SMOTE

from sklearn.linear_model import SGDClassifier, LogisticRegression

from sklearn.svm import SVC

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier, GradientBoostingClassifier, BaggingClassifier, AdaBoostClassifier, ExtraTreesClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.neighbors import KNeighborsClassifier

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

from sklearn.neural_network import MLPClassifier

from yellowbrick.model_selection import FeatureImportances

import joblib

from sklearn.inspection import permutation_importance

import scikitplot as skplt

2.1.Import dataset

credit_card_data = pd.read_csv("dataset/Credit_card.csv")

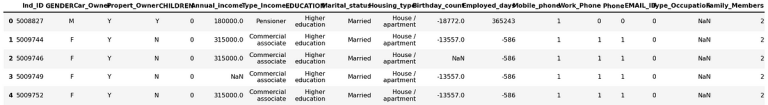

credit_card_label_data = pd.read_csv("dataset/Credit_card_label.csv")credit_card_data.head()

credit_card_label_data.head()

2.2.Merge goal variable(‘label’) in authentic dataset

credit_card_full_data = pd.merge(credit_card_data,credit_card_label_data, on = 'Ind_ID')credit_card_full_data.head()

2.3.Rename Characteristic

credit_card_full_data = credit_card_full_data.rename(columns = {'Birthday_count' : 'Age', 'label':'Is_high_risk'})credit_card_full_data.head()

2.4.Cut up the info into coaching and check units

Now we’ll break up the credit_card_data_full_data right into a coaching and testing set. We’ll use 80% of the info for coaching and 20% for testing and retailer them respectively in cc_train_original and cc_test_original variables

# break up the info into prepare and check dataset

def data_split(df, test_size):

train_df, test_df = train_test_split(df, test_size=test_size, random_state=42)

return train_df.reset_index(drop=True), test_df.reset_index(drop=True)# moist set check measurement 0.2, which suggests our coaching knowledge set is 0.8

cc_train_original, cc_test_original = data_split(credit_card_full_data, 0.2)

Form of coaching knowledge

cc_train_original.form(1238, 19)

- Right here we’ve 19 options(columns) and 1238 observations(rows) for the coaching dataset.

Form of coaching knowledge

cc_test_original.form(310, 19)

- Right here we’ve 19 options(columns) and 310 observations(rows) for the testing dataset.

Save prepare and check knowledge

cc_train_original.to_csv('dataset/prepare.csv',index=False)

cc_test_original.to_csv('dataset/check.csv',index=False)

3.1.Exploring the info

credit_card_full_data.head()

Get Options

credit_card_full_data.columnsIndex(['Ind_ID', 'GENDER', 'Car_Owner', 'Propert_Owner', 'CHILDREN',

'Annual_income', 'Type_Income', 'EDUCATION', 'Marital_status',

'Housing_type', 'Age', 'Employed_days', 'Mobile_phone', 'Work_Phone',

'Phone', 'EMAIL_ID', 'Type_Occupation', 'Family_Members',

'Is_high_risk'],

dtype='object')

Get Information

credit_card_full_data.data()<class 'pandas.core.body.DataFrame'>

RangeIndex: 1548 entries, 0 to 1547

Knowledge columns (complete 19 columns):

# Column Non-Null Depend Dtype

--- ------ -------------- -----

0 Ind_ID 1548 non-null int64

1 GENDER 1541 non-null object

2 Car_Owner 1548 non-null object

3 Propert_Owner 1548 non-null object

4 CHILDREN 1548 non-null int64

5 Annual_income 1525 non-null float64

6 Type_Income 1548 non-null object

7 EDUCATION 1548 non-null object

8 Marital_status 1548 non-null object

9 Housing_type 1548 non-null object

10 Age 1526 non-null float64

11 Employed_days 1548 non-null int64

12 Mobile_phone 1548 non-null int64

13 Work_Phone 1548 non-null int64

14 Cellphone 1548 non-null int64

15 EMAIL_ID 1548 non-null int64

16 Type_Occupation 1060 non-null object

17 Family_Members 1548 non-null int64

18 Is_high_risk 1548 non-null int64

dtypes: float64(2), int64(9), object(8)

reminiscence utilization: 229.9+ KB

Describes

- The describe() operate offers us statistics in regards to the numerical options within the dataset. These statistics embrace every numerical characteristic’s depend, imply, customary deviation, interquartile vary(25%, 50%, 75%), and minimal and most values

credit_card_full_data.describe()

Lacking Worth

- Discover lacking worth

- Additionally we’re utilizing Missingno to visualise the lacking values per characteristic utilizing its matrix operate

credit_card_full_data.isnull().sum()Ind_ID 0

GENDER 7

Car_Owner 0

Propert_Owner 0

CHILDREN 0

Annual_income 23

Type_Income 0

EDUCATION 0

Marital_status 0

Housing_type 0

Age 22

Employed_days 0

Mobile_phone 0

Work_Phone 0

Cellphone 0

EMAIL_ID 0

Type_Occupation 488

Family_Members 0

Is_high_risk 0

dtype: int64msno.matrix(credit_card_full_data)

plt.present()

- Right here we are able to see that the GENDER, Annual_income, Age and Tyoe_Occupation options with lacking values. Slim white traces characterize lacking values

Bar Plot

- we are able to additionally use bar() operate to have a barplot with the depend of non-null values for clear illustration of the lacking values depend

msno.bar(credit_card_full_data)

plt.present()

3.2.create a features to research every characteristic(Univariate evaluation)

3.2.1.Worth Depend Freq operate

- we’ll create first operate value_cnt_freq is used to calculate the depend of every class in a characteristic with its frequency (normalized on a scale of 100)

def value_cnt_freq(df, characteristic):

# we get the worth counts of every characteristic

ftr_value_cnt = df[feature].value_counts()

# we normalize the worth counts on a scale of 100

ftr_value_cnt_norm = df[feature].value_counts(normalize=True) * 100

# we concatenate the worth counts with normalized worth depend column sensible

ftr_value_cnt_concat = pd.concat([ftr_value_cnt, ftr_value_cnt_norm], axis=1)

# give it a column identify

ftr_value_cnt_concat.columns = ['Count', 'Frequency (%)']

# return the dataframe

return ftr_value_cnt_concat

3.2.2.Characteristic Information operate

- Create feature_info operate that may return the outline, the datatype, statistics, the worth counts and frequencies.

def feature_info(df, characteristic):

# if the characteristic is Age

if characteristic == 'Age':

# change the characteristic in constructive variety of days and divide by 365.25 to transform in 12 months

print('Description:n{}'.format((np.abs(df[feature])/365.25).describe()))

print('*'*40)

print('Knowledge sort:{}'.format(df[feature].dtype))# if the characteristic is Employed_days

if characteristic == 'Employed_days':

employment_days_no_ret = df['Employed_days'][df['Employed_days'] < 0]

# change destructive to constructive values

employment_days_no_ret_yrs = np.abs(employment_days_no_ret) /365.25

print('Description:n{}'.format((employment_days_no_ret_yrs).describe()))

print('*'*40)

# print the datatype

print('Knowledge sort:{}'.format(employment_days_no_ret.dtype))

else:

# get the outline

print('Description:n{}'.format(df[feature].describe()))

# print separators

print('*'*40)

# print the datatype

print('Knowledge sort:n{}'.format(df[feature].dtype))

# print separators

print('*'*40)

# calling the value_cnt_freq operate

value_cnt = value_cnt_freq(df,characteristic)

# print the outcome

print('Worth depend:n{}'.format(value_cnt))

3.2.3.Bar Plot Perform

- We’re making a operate right here to generate a bar plot.

def bar_plot(df,characteristic):

if characteristic == 'Marital_status' or characteristic == 'Housing_type' or characteristic == 'Type_Occupation' or characteristic == 'Type_Income' or characteristic == 'EDUCATION':

fig, ax = plt.subplots(figsize=(6,10))sns.barplot(x=value_cnt_freq(df,characteristic).index,y=value_cnt_freq(df,characteristic).values[:,0])

# set the plot's tick labels to the index from the value_cnt_norm_cal operate, rotate these ticks by 45 levels

ax.set_xticks(vary(len(value_cnt_freq(df, characteristic).index)))

ax.set_xticklabels(labels = value_cnt_freq(df,characteristic).index,rotation=45,ha='proper')

# Give the X-axis the identical label because the characteristic identify

plt.xlabel('{}'.format(characteristic))

# Give the Y-axis the label "Depend"

plt.ylabel('Depend')

# Give the plot a title

plt.title('{} depend'.format(characteristic))

# Return the title

return plt.present()

else:

fig, ax = plt.subplots(figsize=(6,10))

sns.barplot(x=value_cnt_freq(df,characteristic).index,y=value_cnt_freq(df,characteristic).values[:,0])

plt.xlabel('{}'.format(characteristic))

plt.ylabel('Depend')

plt.title('{} depend'.format(characteristic))

return plt.present()

3.2.4. Pie Chart Perform

- We’re making a operate to generate a pie chart plot

def pie_chart_plot(df, characteristic):

if characteristic == 'Housing_type' or characteristic == 'EDUCATION':

# name value_cnt_freq operate

ratio_size = value_cnt_freq(df, characteristic)

# get what number of lessons we've

ratio_size_len = len(ratio_size.index)

ratio_list = []

# loop continues till the max vary

for i in vary(ratio_size_len):

# append the ratio of every characteristic to the listing

ratio_list.append(ratio_size.iloc[i]['Frequency (%)'])

# create subplot

fig, ax = plt.subplots(figsize=(6, 6))

plt.pie(ratio_list, startangle=90, autopct='%1.2f%%', wedgeprops={'edgecolor': 'black'})

# add title

plt.title('Pie chart of {}'.format(characteristic))

# add legend

plt.legend(loc='finest', labels=ratio_size.index)

# middle the plot within the subplot

plt.axis('equal')return plt.present()

# For different options

else:

ratio_size = value_cnt_freq(df, characteristic)

ratio_size_len = len(ratio_size.index)

ratio_list = []

for i in vary(ratio_size_len):

ratio_list.append(ratio_size.iloc[i]['Frequency (%)'])

# create subplot

fig, ax = plt.subplots(figsize=(8, 8))

plt.pie(ratio_list, labels=ratio_size.index, autopct='%1.2f%%', startangle=90, wedgeprops={'edgecolor': 'black'})

# add title

plt.title('Pie chart of {}'.format(characteristic))

# add legend

plt.legend(loc='finest')

# axis equal to make sure that pie is drawn as a circle.

plt.axis('equal')

# return the plot

return plt.present()

3.2.5.Field-Plot Perform

- We’re making a operate for producing a field plot.

def box_plot(df,characteristic):

if characteristic == 'Age':

fig, ax = plt.subplots(figsize = (2,8))

# convert the characteristic in constructive numbers days

sns.boxplot(y = np.abs(df[feature])/365.25)

plt.title('{} distribution(Boxplot)'.format(characteristic))

return plt.present()if characteristic == 'CHILDREN':

fig, ax = plt.subplots(figsize=(2,8))

sns.boxplot(y=df[feature])

plt.title('{} distribution(Boxplot)'.format(characteristic))

# utilizing the numpy prepare to populate the Y ticks ranging from 0 until the max depend of kids with an interval of 1

# as follows np.arange(begin, cease, step)

plt.yticks(np.arange(0,df[feature].max(),1))

return plt.present()

if characteristic == 'Employed_days':

fig, ax = plt.subplots(figsize=(2,8))

employment_days_no_ret = df['Employed_days'][df['Employed_days'] < 0]

# change destructive to constructive values

employment_days_no_ret_yrs = np.abs(employment_days_no_ret) /365.25

# create boxplot

sns.boxplot(y = employment_days_no_ret_yrs)

plt.title('{} distribution(Boxplot)'.format(characteristic))

# set y-axis ticks from 0 to the max worth with an interval of two

plt.yticks(np.arange(0,employment_days_no_ret_yrs.max(),2))

return plt.present()

if characteristic == 'Annual_income':

fig, ax = plt.subplots(figsize=(2,8))

sns.boxplot(y=df[feature])

plt.title('{} distribution(Boxplot)'.format(characteristic))

# format y-axis ticks as integers with commas

ax.get_yaxis().set_major_formatter(

matplotlib.ticker.FuncFormatter(lambda x, p: format(int(x), ',')))

return plt.present()

else:

fig, ax = plt.subplots(figsize=(2,8))

sns.boxplot(y=df[feature])

plt.title('{} distribution(Boxplot)'.format(characteristic))

return plt.present()

3.2.6.Histogram Perform

- We’re making a operate to generate a histogram.

def hist_plot(df, characteristic, the_bins = 50):

if characteristic == 'Age':

fig, ax = plt.subplots(figsize = (18,10))

# convert the characteristic in constructive numbers of days

sns.histplot(np.abs(df[feature])/365.25,bins=the_bins,kde=True)

plt.title('{} distribution'.format(characteristic))

return plt.present()elif characteristic == 'Annual_income':

fig, ax = plt.subplots(figsize=(18,10))

sns.histplot(df[feature], bins = the_bins, kde = True)

ax.get_xaxis().set_major_formatter(

matplotlib.ticker.FuncFormatter(lambda x, p: format(int(x), ',')))

plt.title('{} distribution'.format(characteristic))

return plt.present()

elif characteristic == 'Employed_days':

fig, ax = plt.subplots(figsize=(18,10))

employment_days_no_ret = df['Employed_days'][df['Employed_days'] < 0]

# change destructive to constructive values

employment_days_no_ret_yrs = np.abs(employment_days_no_ret) /365.25

sns.histplot(employment_days_no_ret_yrs,bins=the_bins,kde=True)

plt.title('{} distribution'.format(characteristic))

return plt.present()

else:

fig, ax = plt.subplots(figsize=(18,10))

sns.histplot(df[feature],bins=the_bins,kde=True)

plt.title('{} distribution'.format(characteristic))

return plt.present()

3.2.7.Low vs Excessive Danger Field Plot Perform

- This operate will plot two field plots: one for low-risk (good shopper) candidates and the opposite for high-risk (dangerous shopper) candidates.

def low_vs_high_risk_box_plot(df, characteristic):

if characteristic == 'Age':

print(np.abs(df.groupby('Is_high_risk')[feature].imply()/365.25))

fig, ax = plt.subplots(figsize=(5,8))

sns.boxplot(y=np.abs(df[feature])/365.25,x=df['Is_high_risk'])

# add ticks to the X axis

plt.xticks(ticks=[0,1],labels=['no','yes'])

plt.title('Excessive danger grouped by age')

return plt.present()if characteristic == 'Annual_income':

print(np.abs(df.groupby('Is_high_risk')[feature].imply()))

fig, ax = plt.subplots(figsize=(5,8))

sns.boxplot(y=np.abs(df[feature]),x=df['Is_high_risk'])

plt.xticks(ticks=[0,1],labels=['no','yes'])

ax.get_yaxis().set_major_formatter(

matplotlib.ticker.FuncFormatter(lambda x, p: format(int(x), ',')))

plt.title('Excessive danger grouped by {}'.format(characteristic))

return plt.present()

if characteristic == 'Employed_days':

# test an applicant is excessive danger or not

employment_no_ret = cc_train_original['Employed_days'][cc_train_original['Employed_days'] <0]

employment_no_ret_idx = employment_no_ret.index

employment_days_no_ret_yrs = np.abs(employment_no_ret)/365.25

# extract those that are employed from the unique dataframe and return solely the employment size and Is excessive danger columns

employment_no_ret_df = cc_train_original.iloc[employment_no_ret_idx][['Employed_days','Is_high_risk']]

# return the imply employment size group by how dangerous is the applicant

employment_no_ret_is_high_risk = employment_no_ret_df.groupby('Is_high_risk')['Employed_days'].imply()

print(np.abs(employment_no_ret_is_high_risk)/365.25)

fig, ax = plt.subplots(figsize=(5,8))

sns.boxplot(y= employment_days_no_ret_yrs,x=df['Is_high_risk'])

plt.xticks(ticks=[0,1],labels=['no','yes'])

plt.title('Excessive vs low danger grouped by {}'.format(characteristic))

return plt.present()

else:

print(np.abs(df.groupby('Is_high_risk')[feature].imply()))

fig, ax = plt.subplots(figsize=(5,8))

sns.boxplot(y=np.abs(df[feature]),x=df['Is_high_risk'])

plt.xticks(ticks=[0,1],labels=['no','yes'])

plt.title('Excessive danger grouped by {}'.format(characteristic))

return plt.present()

3.2.8.Low vs Excessive Danger Bar Plot

- We’re making a operate for a bar plot evaluating Low and Excessive classes.

def low_high_risk_bar_plot(df, characteristic):

# Group by the desired characteristic and sum the high-risk candidates

is_high_risk_grp = df.groupby(characteristic)['Is_high_risk'].sum()

# Kind in descending order

is_high_risk_grp_srt = is_high_risk_grp.sort_values(ascending=False)# Create a bar plot

fig, ax = plt.subplots(figsize=(6, 10))

sns.barplot(x=is_high_risk_grp_srt.index, y=is_high_risk_grp_srt.values)

# Set the ticks and labels after creating the plot

ax.set_xticks(vary(len(is_high_risk_grp_srt)))

ax.set_xticklabels(labels=is_high_risk_grp_srt.index, rotation=45, ha='proper')

plt.ylabel('Depend')

plt.title(f'Excessive danger candidates depend grouped by {characteristic}')

plt.present()

# Instance utilization:

# low_high_risk_bar_plot(your_dataframe, 'Marital_status')

Age

feature_info(cc_train_original, 'Age')Description:

depend 1220.000000

imply 43.863197

std 11.521349

min 22.422998

25% 33.952772

50% 42.945927

75% 53.479808

max 68.298426

Title: Age, dtype: float64

****************************************

Knowledge sort:float64

Description:

depend 1220.000000

imply -16021.032787

std 4208.172710

min -24946.000000

25% -19533.500000

50% -15686.000000

75% -12401.250000

max -8190.000000

Title: Age, dtype: float64

****************************************

Knowledge sort:

float64

****************************************

Worth depend:

Depend Frequency (%)

Age

-21363.0 5 0.409836

-13557.0 5 0.409836

-18173.0 4 0.327869

-14523.0 4 0.327869

-13682.0 3 0.245902

... ... ...

-14661.0 1 0.081967

-8304.0 1 0.081967

-18661.0 1 0.081967

-15429.0 1 0.081967

-18348.0 1 0.081967[1046 rows x 2 columns]

- we are able to see that the youngest candidates is 22 12 months previous whereas the oldest is 68.and common age is 43.86 and median age is 42.94

box_plot(cc_train_original,'Age')

hist_plot(cc_train_original,'Age')

- we plot histogrma with the kernal density estimator. we are able to see ‘Age’ will not be usually distributed.it’s sligtly positively skewed.

low_vs_high_risk_box_plot(cc_train_original, 'Age')Is_high_risk

0 43.733156

1 44.834892

Title: Age, dtype: float64

- we are able to see that there isn’t any significance distinction between the age of those that are excessive danger and those that will not be. The imply age for each group is round 43 12 months previous and there’s no correlation between the age and danger elements of the candidates.

Gender

feature_info(cc_train_original, 'GENDER')Description:

depend 1232

distinctive 2

prime F

freq 778

Title: GENDER, dtype: object

****************************************

Knowledge sort:

object

****************************************

Worth depend:

Depend Frequency (%)

GENDER

F 778 63.149351

M 454 36.850649

- we are able to see which have two distinctive lessons F(Feminine) and M(Male) with 778 and 454 repectively. And have 63.14% feminine and 36.85% Male

bar_plot(cc_train_original,'GENDER')

pie_chart_plot(cc_train_original,'GENDER')

Marital_status

feature_info(cc_train_original,'Marital_status')Description:

depend 1238

distinctive 5

prime Married

freq 848

Title: Marital_status, dtype: object

****************************************

Knowledge sort:

object

****************************************

Worth depend:

Depend Frequency (%)

Marital_status

Married 848 68.497577

Single / not married 183 14.781906

Civil marriage 76 6.138934

Separated 71 5.735057

Widow 60 4.846527pie_chart_plot(cc_train_original,'Marital_status')

bar_plot(cc_train_original,'Marital_status')

low_high_risk_bar_plot(cc_train_original,'Marital_status')

- Right here we are able to see there are 5 distinctive lessons.Most candidates belong to married with 68.50 % .

- fascinating remark we are able to see that although we’ve the next variety of candidates who’re separated than widows.however widow candidates are excessive danger than those that are separated.

Family_Members

feature_info(cc_train_original,'Family_Members')Description:

depend 1238.000000

imply 2.172052

std 0.968524

min 1.000000

25% 2.000000

50% 2.000000

75% 3.000000

max 15.000000

Title: Family_Members, dtype: float64

****************************************

Knowledge sort:

int64

****************************************

Worth depend:

Depend Frequency (%)

Family_Members

2 642 51.857835

1 264 21.324717

3 214 17.285945

4 102 8.239095

5 14 1.130856

15 1 0.080775

6 1 0.080775box_plot(cc_train_original,'Family_Members')

bar_plot(cc_train_original,'Family_Members')

- we are able to see that is numerical characteristic with the median of two relations with 51.85%(642) of all , adopted by single member of the family with 21.32%(264)

- In field plot we are able to see there are three outlier 5 and two are extream with 6 and 15 members.

CHILDREN

feature_info(cc_train_original,'CHILDREN')Description:

depend 1238.000000

imply 0.421648

std 0.804142

min 0.000000

25% 0.000000

50% 0.000000

75% 1.000000

max 14.000000

Title: CHILDREN, dtype: float64

****************************************

Knowledge sort:

int64

****************************************

Worth depend:

Depend Frequency (%)

CHILDREN

0 869 70.193861

1 245 19.789984

2 107 8.642973

3 15 1.211632

14 1 0.080775

4 1 0.080775box_plot(cc_train_original,'CHILDREN')

bar_plot(cc_train_original,'CHILDREN')

- Right here we are able to see most candidates don’t have a toddler

- Additionally right here have three outlier 3 and extream outlier with 4 and 15

Housing_type

feature_info(cc_train_original,'Housing_type')Description:

depend 1238

distinctive 6

prime Home / condo

freq 1107

Title: Housing_type, dtype: object

****************************************

Knowledge sort:

object

****************************************

Worth depend:

Depend Frequency (%)

Housing_type

Home / condo 1107 89.418417

With dad and mom 58 4.684976

Municipal condo 45 3.634895

Rented condo 18 1.453958

Workplace condo 6 0.484653

Co-op condo 4 0.323102pie_chart_plot(cc_train_original,'Housing_type')

bar_plot(cc_train_original, 'Housing_type')

- we are able to see 89.42 % candidates lives in Home/condo

Annual_income

pd.set_option('show.float_format', lambda x: '%.2f' % x)

feature_info(cc_train_original,'Annual_income')Description:

depend 1222.00

imply 194770.84

std 117728.60

min 33750.00

25% 126000.00

50% 172125.00

75% 225000.00

max 1575000.00

Title: Annual_income, dtype: float64

****************************************

Knowledge sort:

float64

****************************************

Worth depend:

Depend Frequency (%)

Annual_income

135000.00 135 11.05

112500.00 114 9.33

180000.00 107 8.76

157500.00 95 7.77

225000.00 91 7.45

... ... ...

787500.00 1 0.08

175500.00 1 0.08

95850.00 1 0.08

215100.00 1 0.08

382500.00 1 0.08[104 rows x 2 columns]

box_plot(cc_train_original,'Annual_income')

hist_plot(cc_train_original,'Annual_income')

# bivariate evaluation with goal variable

low_vs_high_risk_box_plot(cc_train_original,'Annual_income')Is_high_risk

0 193525.39

1 204396.43

Title: Annual_income, dtype: float64

- we are able to see common revenue is 194770.84 however this quantity is outlier, Most individuals make 172125, if we ignore the the outliers

- we are able to see within the field plot it’s positively skewed

- low danger and excessive danger candidates nearly related revenue

Type_Occupation

feature_info(cc_train_original, 'Type_Occupation')Description:

depend 838

distinctive 18

prime Laborers

freq 210

Title: Type_Occupation, dtype: object

****************************************

Knowledge sort:

object

****************************************

Worth depend:

Depend Frequency (%)

Type_Occupation

Laborers 210 25.06

Core workers 141 16.83

Managers 112 13.37

Gross sales workers 91 10.86

Drivers 70 8.35

Excessive ability tech workers 51 6.09

Medication workers 39 4.65

Accountants 37 4.42

Safety workers 18 2.15

Cooking workers 17 2.03

Personal service workers 15 1.79

Cleansing workers 14 1.67

Secretaries 7 0.84

Low-skill Laborers 5 0.60

Waiters/barmen workers 5 0.60

HR workers 3 0.36

IT workers 2 0.24

Realty brokers 1 0.12Type_Occupation_nan_count = cc_train_original['Type_Occupation'].isna().sum()

Type_Occupation_nan_count400rows_total_count = cc_train_original.form[0]print('The lacking worth share is {:.2f} %'.format(Type_Occupation_nan_count * 100 / rows_total_count))The lacking worth share is 32.31 %bar_plot(cc_train_original,'Type_Occupation')

- we are able to see most Kind Occupation is Laborers with 210(25.06%), adopted by Core workers with 141(16.83%)

- Even have 32.31 % of lacking knowledge

Type_Income

feature_info(cc_train_original, 'Type_Income')Description:

depend 1238

distinctive 4

prime Working

freq 634

Title: Type_Income, dtype: object

****************************************

Knowledge sort:

object

****************************************

Worth depend:

Depend Frequency (%)

Type_Income

Working 634 51.21

Industrial affiliate 292 23.59

Pensioner 216 17.45

State servant 96 7.75bar_plot(cc_train_original,'Type_Income')

pie_chart_plot(cc_train_original,'Type_Income')

- Most candidates are working(51.21%), second is Industrial affiliate(23.59%)

EDUCATION

feature_info(cc_train_original,'EDUCATION')Description:

depend 1238

distinctive 5

prime Secondary / secondary particular

freq 820

Title: EDUCATION, dtype: object

****************************************

Knowledge sort:

object

****************************************

Worth depend:

Depend Frequency (%)

EDUCATION

Secondary / secondary particular 820 66.24

Greater training 349 28.19

Incomplete greater 54 4.36

Decrease secondary 14 1.13

Tutorial diploma 1 0.08pie_chart_plot(cc_train_original,'EDUCATION')

bar_plot(cc_train_original,'EDUCATION')

- Most of candidates have accomplished their Secondary / secondary particular diploma(66.24%)

Employed_days

feature_info(cc_train_original,'Employed_days')Description:

depend 1030.00

imply 7.32

std 6.54

min 0.20

25% 2.57

50% 5.30

75% 9.60

max 40.76

Title: Employed_days, dtype: float64

****************************************

Knowledge sort:int64# beneath employed_days are in years

box_plot(cc_train_original,'Employed_days')

# beneath Employed_days are in 12 months

hist_plot(cc_train_original,'Employed_days')

# bivaraiate evaluation with goal variable, right here 0 means No and 1 means Sure

low_vs_high_risk_box_plot(cc_train_original,'Employed_days')Is_high_risk

0 7.58

1 5.27

Title: Employed_days, dtype: float64

- Right here we are able to see that many of the candidates have been working between 5 to 7 years on common

- Even have many outlier who’ve been working for greater than 20 years.

- Additionally we are able to see employed days[in year] histogram is positively skewed.

- Additionally we are able to see excessive danger have low employment days

Car_Owner

feature_info(cc_train_original,'Car_Owner')Description:

depend 1238

distinctive 2

prime N

freq 750

Title: Car_Owner, dtype: object

****************************************

Knowledge sort:

object

****************************************

Worth depend:

Depend Frequency (%)

Car_Owner

N 750 60.58

Y 488 39.42bar_plot(cc_train_original,'Car_Owner')

pie_chart_plot(cc_train_original,'Car_Owner')

- we are able to see many of the candidates don’t have a automobile

Propert_Owner

feature_info(cc_train_original,'Propert_Owner')Description:

depend 1238

distinctive 2

prime Y

freq 805

Title: Propert_Owner, dtype: object

****************************************

Knowledge sort:

object

****************************************

Worth depend:

Depend Frequency (%)

Propert_Owner

Y 805 65.02

N 433 34.98bar_plot(cc_train_original,'Propert_Owner')

pie_chart_plot(cc_train_original,'Propert_Owner')

- we are able to see many of the candidates personal a property(65.02%)

Work_Phone

feature_info(cc_train_original,'Work_Phone')Description:

depend 1238.00

imply 0.22

std 0.41

min 0.00

25% 0.00

50% 0.00

75% 0.00

max 1.00

Title: Work_Phone, dtype: float64

****************************************

Knowledge sort:

int64

****************************************

Worth depend:

Depend Frequency (%)

Work_Phone

0 970 78.35

1 268 21.65bar_plot(cc_train_original,'Work_Phone')

pie_chart_plot(cc_train_original,'Work_Phone')

- Right here we are able to see 78.35% candidates don’t have work cellphone

- Right here 0 means No and 1 means Sure

EMAIL_ID

feature_info(cc_train_original,'EMAIL_ID')Description:

depend 1238.00

imply 0.09

std 0.29

min 0.00

25% 0.00

50% 0.00

75% 0.00

max 1.00

Title: EMAIL_ID, dtype: float64

****************************************

Knowledge sort:

int64

****************************************

Worth depend:

Depend Frequency (%)

EMAIL_ID

0 1123 90.71

1 115 9.29bar_plot(cc_train_original,'EMAIL_ID')

pie_chart_plot(cc_train_original,'EMAIL_ID')

- Right here we are able to see greater than 90% of candidates don’t have e-mail id, solely lower than 10% candidates have e-mail id.

- Right here 0 means No and 1 means sure

Mobile_phone

feature_info(cc_train_original,'Mobile_phone')Description:

depend 1238.00

imply 1.00

std 0.00

min 1.00

25% 1.00

50% 1.00

75% 1.00

max 1.00

Title: Mobile_phone, dtype: float64

****************************************

Knowledge sort:

int64

****************************************

Worth depend:

Depend Frequency (%)

Mobile_phone

1 1238 100.00bar_plot(cc_train_original,'Mobile_phone')

pie_chart_plot(cc_train_original,'Mobile_phone')

- Right here we are able to see all candidates have cell phone

Cellphone

feature_info(cc_train_original,'Cellphone')Description:

depend 1238.00

imply 0.31

std 0.46

min 0.00

25% 0.00

50% 0.00

75% 1.00

max 1.00

Title: Cellphone, dtype: float64

****************************************

Knowledge sort:

int64

****************************************

Worth depend:

Depend Frequency (%)

Cellphone

0 859 69.39

1 379 30.61bar_plot(cc_train_original,'Cellphone')

pie_chart_plot(cc_train_original,'Cellphone')

- Right here we are able to see 69.39% candidates don’t have cellphone,and 30.61% candidates have cellphone.

- Right here 0 means No and 1 means Sure

Is_high_risk(Goal variable)

feature_info(cc_train_original,'Is_high_risk')Description:

depend 1238.00

imply 0.12

std 0.32

min 0.00

25% 0.00

50% 0.00

75% 0.00

max 1.00

Title: Is_high_risk, dtype: float64

****************************************

Knowledge sort:

int64

****************************************

Worth depend:

Depend Frequency (%)

Is_high_risk

0 1093 88.29

1 145 11.71bar_plot(cc_train_original,'Is_high_risk')

pie_chart_plot(cc_train_original,'Is_high_risk')

- Right here we are able to see that 88.29% No danger, it means good candidates ,bank card can be accredited and 11.71% has danger, it means dangerous candidates , bank card is not going to be accredited.

- Right here we are able to see have a imbalanced knowledge that must balanced utilizing SMOTE earlier than coaching our mannequin

- Right here 0 means No and 1 means Sure

3.4.Bivariate evaluation

Numerical vs Numerical Options

scatter plot

sns.pairplot(cc_train_original[cc_train_original['Employed_days'] < 0].drop(['Ind_ID','Mobile_phone','Work_Phone','Phone','EMAIL_ID','Is_high_risk'], axis = 1),nook = True)<seaborn.axisgrid.PairGrid at 0x16a045aeb40>

- “Right here, we observe a constructive linear correlation between the variety of Household Members and the depend of Youngsters. This suggests that as somebody has extra kids, the Household Member depend additionally will increase. Nonetheless, this correlation introduces multicollinearity, an issue arising from two extremely correlated options, which isn’t appropriate for coaching our mannequin. Subsequently, we have to drop these options.

- Moreover, we discover the same sample between Employed Days and Age. The longer the interval of employment, the older somebody tends to be.

Household Member vs CHILDREN(Numerical vs numerical)

sns.regplot(x = 'CHILDREN',y = 'Family_Members', knowledge = cc_train_original, line_kws = {'colour':'inexperienced'})

plt.present()

- Right here, we observe that the extra kids an individual has, the bigger the household measurement

Employed days vs Age(numerical vs numerical)

# Calculate absolutely the values of age and employed days

y_age = np.abs(cc_train_original['Age']) / 365.25

x_employed_days = np.abs(cc_train_original[cc_train_original['Employed_days'] < 0]['Employed_days']) / 365.25# Create a scatter plot utilizing sns.scatterplot

fig, ax = plt.subplots(figsize=(12, 8))

sns.scatterplot(knowledge=cc_train_original, x=x_employed_days, y=y_age,alpha=0.50)

# Set customized tick values for higher readability

plt.xticks(np.arange(0, x_employed_days.max(), 2.5))

plt.yticks(np.arange(20, y_age.max(), 5))

# Present the plot

plt.present()

- Right here we observe a correlation between the age of candidates and their employed days on this scatter plot.

Heatmap

cc_train_modified = cc_train_original.copy()

# drop Mobile_phone and Ind_ID options

cc_train_modified.drop(['Mobile_phone', 'Ind_ID'], axis=1, inplace=True)# Choose numeric columns

numeric_df = cc_train_modified.select_dtypes(embrace=['number'])

# Calculate correlation matrix

corr_matrix = numeric_df.corr()

# Create a masks for the higher triangle

masks = np.triu(np.ones_like(corr_matrix, dtype=bool))

# Arrange the matplotlib determine

fig, ax = plt.subplots(figsize=(12, 10))

# Draw the heatmap with the masks and proper side ratio

sns.heatmap(corr_matrix, masks=masks, cmap='coolwarm', vmax=.3, middle=0, annot=True,

sq.=True, linewidths=.5, cbar_kws={"shrink": .5})

# Present the plot

plt.present()

- Right here we observe a excessive correlation between Household Members and Youngsters. Moreover:

- Age reveals some constructive correlation with Household Members and Youngsters, indicating bigger household sizes for older people.

- We will see there isn’t any options that’s correlated with the goal variable.

- There’s a constructive correlation between having a Cellphone and a Work_phone.

- A destructive correlation is obvious between Employed_days and Age.

- Moreover, a constructive correlation exists between Age and Work_phone

Numerical vs Categorical Options(ANOVA[Analysis of Variance])

Age vs categorical options

fig, axes = plt.subplots(4,2,figsize=(15,20),dpi=180)

fig.tight_layout(pad=5.0)

cat_features = ['GENDER','Car_Owner','Propert_Owner','Type_Income','EDUCATION','Marital_status','Housing_type','Type_Occupation']

for cat_feature_count, ax in enumerate(axes):

for row_count in vary(4):

for feat_count in vary(2):

sns.boxplot(ax=axes[row_count,feat_count],x=cc_train_original[cat_features[cat_feature_count]],y=np.abs(cc_train_original['Age'])/365.25)

axes[row_count,feat_count].set_title(cat_features[cat_feature_count] + " vs age")

plt.sca(axes[row_count,feat_count])

plt.xticks(rotation=45,ha='proper')

plt.ylabel('Age')

cat_feature_count += 1

break

- Feminine candidates are older than male candidates.

- People and not using a automobile are usually older.

- Property house owners are usually older than these with out property.

- Pensioners are older than those that are at the moment working (with some outliers the place people pensioned at a younger age).

- Widows are usually a lot older, with some outliers of their 30s.

- People dwelling with dad and mom are youthful, with some outliers.

- Safety workers members are usually older, whereas these in data expertise (IT) are usually youthful.

Categorical vs Categorical Options(Chi-Sq. check)

- Null Speculation: The classes of the options haven’t any impact on the goal variable.

Various Speculation: A number of characteristic classes have a big impact on the goal variable.

import scipy.stats as stats

def chi_square_test(characteristic):

# Choose rows with excessive danger

high_risk_ft = cc_train_original[cc_train_original['Is_high_risk'] == 1][feature]

high_risk_ft_ct = pd.crosstab(index=high_risk_ft, columns=['Count']).rename_axis(None, axis=1)# Drop the index characteristic identify

high_risk_ft_ct.index.identify = None# Observe values

obs = high_risk_ft_ct

print('Noticed values:n')

print(obs)

print('n')# Anticipated values

exp = pd.DataFrame([obs['Count'].sum() / len(obs)] * len(obs.index), columns=['Count'], index=obs.index)

print('Anticipated values:n')

print(exp)

print('n')# Chi-square check

chi_squared_stat = (((obs - exp) ** 2) / exp).sum().values[0]

print('Chi-square:n')

print(chi_squared_stat)

print('n')# Crucial worth

crit = stats.chi2.ppf(q=0.95, df=len(obs) - 1)

print('Crucial worth:n')

print(crit)

print('n')# P-value

p_value = 1 - stats.chi2.cdf(x=chi_squared_stat, df=len(obs) - 1)

print('P-value:n')

print(p_value)

print('n')if chi_squared_stat >= crit:

cat_features = ['GENDER','Car_Owner','Propert_Owner','Type_Income','EDUCATION','Marital_status','Housing_type','Type_Occupation']

print('Reject the null speculation')

elif chi_squared_stat <= crit:

print('Fail to reject the null speculation')

for feat in cat_features:

print('nn**** {} ****n'.format(feat))

chi_square_test(feat)**** GENDER ****Noticed values:

Depend

F 77

M 63Anticipated values:

Depend

F 70.00

M 70.00Chi-square:

1.4

Crucial worth:

3.841458820694124

P-value:

0.2367235706378572

Fail to reject the null speculation

**** Car_Owner ****

Noticed values:

Depend

N 85

Y 60Anticipated values:

Depend

N 72.50

Y 72.50Chi-square:

4.310344827586207

Crucial worth:

3.841458820694124

P-value:

0.0378812823153869

Reject the null speculation

**** Propert_Owner ****

Noticed values:

Depend

N 52

Y 93Anticipated values:

Depend

N 72.50

Y 72.50Chi-square:

11.593103448275862

Crucial worth:

3.841458820694124

P-value:

0.0006619684903875767

Reject the null speculation

**** Type_Income ****

Noticed values:

Depend

Industrial affiliate 42

Pensioner 34

State servant 6

Working 63Anticipated values:

Depend

Industrial affiliate 36.25

Pensioner 36.25

State servant 36.25

Working 36.25Chi-square:

46.03448275862069

Crucial worth:

7.814727903251179

P-value:

5.576543671281797e-10

Reject the null speculation

**** EDUCATION ****

Noticed values:

Depend

Greater training 50

Incomplete greater 4

Decrease secondary 5

Secondary / secondary particular 86Anticipated values:

Depend

Greater training 36.25

Incomplete greater 36.25

Decrease secondary 36.25

Secondary / secondary particular 36.25Chi-square:

129.12413793103448

Crucial worth:

7.814727903251179

P-value:

0.0

Reject the null speculation

**** Marital_status ****

Noticed values:

Depend

Civil marriage 4

Married 99

Separated 11

Single / not married 24

Widow 7Anticipated values:

Depend

Civil marriage 29.00

Married 29.00

Separated 29.00

Single / not married 29.00

Widow 29.00Chi-square:

219.24137931034483

Crucial worth:

9.487729036781154

P-value:

0.0

Reject the null speculation

**** Housing_type ****

Noticed values:

Depend

Co-op condo 1

Home / condo 121

Municipal condo 14

Workplace condo 1

Rented condo 3

With dad and mom 5Anticipated values:

Depend

Co-op condo 24.17

Home / condo 24.17

Municipal condo 24.17

Workplace condo 24.17

Rented condo 24.17

With dad and mom 24.17Chi-square:

470.43448275862056

Crucial worth:

11.070497693516351

P-value:

0.0

Reject the null speculation

**** Type_Occupation ****

Noticed values:

Depend

Accountants 5

Cleansing workers 1

Cooking workers 4

Core workers 21

Drivers 7

Excessive ability tech workers 5

IT workers 2

Laborers 22

Low-skill Laborers 1

Managers 11

Medication workers 3

Gross sales workers 8

Safety workers 7

Waiters/barmen workers 1Anticipated values:

Depend

Accountants 7.00

Cleansing workers 7.00

Cooking workers 7.00

Core workers 7.00

Drivers 7.00

Excessive ability tech workers 7.00

IT workers 7.00

Laborers 7.00

Low-skill Laborers 7.00

Managers 7.00

Medication workers 7.00

Gross sales workers 7.00

Safety workers 7.00

Waiters/barmen workers 7.00Chi-square:

86.28571428571428

Crucial worth:

22.362032494826934

P-value:

7.139844271364382e-13

Reject the null speculation

Abstract of EDA

Gender Distribution

- Two distinctive lessons, Feminine (F) and Male (M), with 778 and 454 candidates, respectively.

- 63.14% are feminine, and 36.85% are male.

Marital Standing

- 5 distinctive lessons, with most candidates (68.50%) being married.

- Widows have the next danger in comparison with separated people.

Household Members

- Numerical characteristic with a median of two relations.

- Most candidates have 2 relations (51.85%).

Youngsters

- Most candidates don’t have kids.

- Three outliers with 5, 6, and 15 kids.

Housing Kind

- 89.42% of candidates dwell in a home/condo.

Annual Revenue

- Common revenue is 194,770.84, with outliers.

- Most individuals make 172,125, ignoring outliers.

- Positively skewed distribution.

Kind Occupation

- Laborers (25.06%) and Core workers (16.83%) are the commonest occupations.

- 32.31% lacking knowledge.

Kind Revenue

- Most candidates are working (51.21%), adopted by industrial associates (23.59%).

Schooling

- Most candidates accomplished Secondary/Secondary Particular (66.24%).

Employed Days

- Most candidates have been working between 5 to 7 years on common.

- Outliers with employment durations over 20 years.

Automobile Possession and Property Possession

- Most candidates don’t have a automobile (70.36%).

- Most candidates personal property (65.02%).

Cellphone and Work Cellphone

- 78.35% of candidates don’t have a piece cellphone.

- Greater than 90% don’t have an e-mail ID.

- All candidates have a cell phone.

- 69.39% don’t have a cellphone, and 30.61% have a cellphone.

Is Excessive Danger (Goal Variable)

- 88.29% haven’t any danger, and 11.71% have danger, indicating imbalanced knowledge.

- Imbalance must be addressed utilizing SMOTE earlier than mannequin coaching.

Age and Is Excessive Danger

- There isn’t any important distinction in age between high-risk and low-risk candidates.

- The imply age for each teams is round 43 years, and there’s no correlation between age and danger elements.

Correlations

- Constructive linear correlation between Household Members and Youngsters, introducing multicollinearity.

- Related sample noticed between Employed Days and Age, indicating longer employment correlates with older age.

- No options are strongly correlated with the goal variable.

- Constructive correlation between having a Cellphone and a Work_phone.

- Unfavorable correlation between Employed_days and Age.

- Constructive correlation between Age and Work_phone.

Demographic Observations

- Feminine candidates are older than male candidates.

- Non-car house owners are usually older.

- Property house owners are usually older.

- Pensioners are older than working people, with some outliers.

- Widows are usually older.

- People dwelling with dad and mom are youthful, with some outliers.

- Safety workers tends to be older, whereas IT workers tends to be youthful.

4.1.Here’s a listing of all of the transformations that must be utilized to every characteristic

Ind_ID

GENDER

- One-hot Encoding

- impute lacking worth

Car_Owner

- Change it numerical

- One-hot encoding

Propert_Owner

- Change it numerical

- One-hot encoding

CHILDREN

Annual_income

- Take away outlier

- Repair Skewness

- Min-Max Scaling

- impute lacking worth

Type_Income

EDUCATION

Marital_status

Housing_type

Age

- Min-Max Scaling

- Repair Skewness

- Abs worth and divide 365.25

- impute lacking worth

Employed_days

- Min-Max Scaling

- Take away outlier

- Abs worth and divide 365.25

Mobile_phone

Work_Phone

Cellphone

EMAIL_ID

Type_Occupation

- One-hot encoding

- impute lacking worth

Family_Members

Is_high_risk

- Stability the Imbalance Knowledge with SMOTE

4.2.Knowledge Cleansing

4.3.1.Impute Lacking Worth

class Handle_Missing_Values(BaseEstimator, TransformerMixin):

def __init__(self, categorical_cols=['GENDER', 'Type_Occupation'], numerical_cols=['Annual_income', 'Age']):

self.categorical_cols = categorical_cols

self.numerical_cols = numerical_colsdef match(self, df):

# Retailer the mode for categorical columns and the imply for numerical columns

self.imputation_values = {}

for col in self.categorical_cols:

self.imputation_values[col] = df[col].mode()[0]

for col in self.numerical_cols:

self.imputation_values[col] = df[col].imply()

return selfdef remodel(self, df):

#cc_train_original.isnull().sum()

# Change lacking values with the saved imputation values

for col in self.categorical_cols:

df[col].fillna(self.imputation_values[col], inplace=True)

for col in self.numerical_cols:

df[col].fillna(self.imputation_values[col], inplace=True)

return df

4.3.2.Outlier Remover Perform

- Right here we’re creating a category to deal with outliers.

- This class will take away outliers roughly than 3 inter-quantile ranges away from the imply

class OutlierRemover(BaseEstimator, TransformerMixin):

def __init__(self,feat_with_outliers = ['Family_Members','Annual_income', 'Employed_days']):

self.feat_with_outliers = feat_with_outliers

def match(self,df):

return self

def remodel(self,df):

if (set(self.feat_with_outliers).issubset(df.columns)):

# 25% quantile

Q1 = df[self.feat_with_outliers].quantile(.25)

# 75% quantile

Q3 = df[self.feat_with_outliers].quantile(.75)

IQR = Q3 - Q1

# preserve the info inside 3 IQR

df = df[~((df[self.feat_with_outliers] < (Q1 - 3 * IQR)) |(df[self.feat_with_outliers] > (Q3 + 3 * IQR))).any(axis=1)]

return df

else:

print("Warning: A number of specified options for outlier elimination will not be current within the dataframe. No outliers eliminated.")

return df