This isn’t information to anybody who has an excellent understanding of sklearn fashions or mannequin analysis metrics, however I just lately ran right into a scenario I had a tough time understanding till I dug deeper; I doc my learnings right here.

I used to be evaluating a logistic regression classifier utilizing sklearn’s built-in accuracy and AUC rating features, and was observing a comparatively excessive AUC rating with a low corresponding accuracy measure. So I went into the weeds of Google search to know what precisely was occurring…..

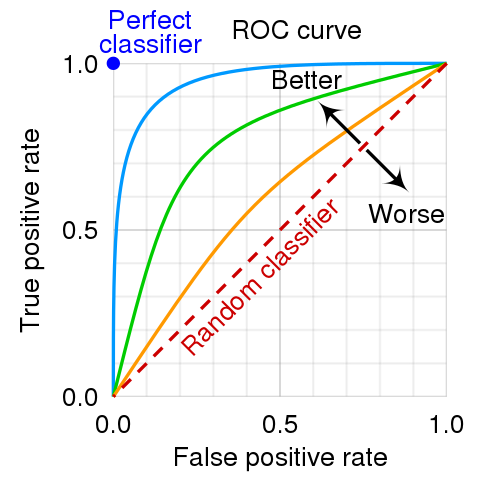

A fast refresher on AUC for many who aren’t acquainted or are rusty. One strategy for evaluating a binary classifier that outputs a chance (or associated amount — e.g., logit) is to think about the connection between true positives vs. false positives. Classification is carried out by thresholding on the output chance values, such that the enter pattern x is labeled as 1 if the output chance is ≥ some threshold p, or 0 in any other case. Every chosen threshold p has an related true optimistic (TPR) and false optimistic charge (FPR). The receiver working curve is a visualization of the set of all potential TPR vs FPR values related to all potential thresholds.

Every level alongside the ROC corresponds to a selected threshold and every classifier has its personal related curve. The nearer the curve is to a unit step perform, the higher. A random classifier corresponds to the diagonal line, with a threshold of 0.5 akin to (0.5, 0.5) on the curve. AUC, or space below the curve, is a single quantity for evaluating how near best the curve is with good classifiers having AUC of 1 and likelihood classifiers having a rating of 0.5.

I believed the next was a extremely good reference with useful visualizations.

So again to my confusion. When evaluating my classifier I used to be seeing that my computed AUC scores have been greater than my accuracy scores. Intuitively, a excessive AUC would suggest that the mannequin ought to have the capability to attain a excessive TPR with out sacrificing the FPR. Nonetheless, I wasn’t seeing that based mostly on the accuracy measure.

It seems that, by default, sklearn’s logistic regression will use a default threshold of 0.5 to categorise samples when the predict() perform is used immediately, which means samples with predicted possibilities lower than 0.5 are labelled as 0 whereas samples with predicted possibilities better than 0.5 are labelled as 1. Nonetheless, for unbalanced datasets, utilizing 0.5 wouldn’t essentially be optimum with respect to accuracy — you’d need the chance threshold to comply with the true empirical distribution of the information. Thus, for unbalanced datasets it could be potential that the AUC rating, which is computed utilizing the anticipated possibilities immediately, is greater than the accuracy rating based mostly on the output of the classifier’s predict() fuction. In such a case it could be essential to optimize the choice threshold on the coaching knowledge based mostly on some standards (i.e., F measure, accuracy, and so forth.), quite than use the default 0.5 threshold. This was what I wanted to do, and as soon as I optimized the brink the ensuing accuracy rating made much more sense when put next with the AUC rating.

A well-calibrated mannequin is one through which the anticipated chance for a category output displays the true empirical distribution of the mannequin. An vital level to bear in mind is that it’s potential to have excessive AUC scores a poorly calibrated mannequin (so far as I perceive it). Relying on the use-case it might not be as vital to have a well-calibrated mannequin, as the ultimate classification accuracy is extra vital than studying the information distribution. In that case, the output of the classifier shouldn’t be interpreted as true possibilities.

A couple of helpful assets on the subject: [1], [2], and [3].

Currently in my very own work I’ve been pondering rather a lot about how finest to decide on efficiency metrics which might be essentially the most acceptable for evaluating the properties of my classifier in a method that appears truthful. I believe this has rather a lot to do with 1) the information and the way it’s labelled, and a pair of) the ultimate use-case/goal of the classifier. For instance, in my case I care as a lot about figuring out the positives because the negatives. Accuracy and confusion matrices are each good metrics for quantifying this. AUC can also be usually good selection, however as a metric it’s biased in direction of identification of the optimistic case (we’re utilizing TPR and FPR within the ROC plots). Sure, these metrics are implicitly associated to the case of true negatives nevertheless it gives a barely completely different perspective/lens and it’s vital (not less than for me) to bear in mind when my tensorboard outputs.

In consequence, I do suppose it’s rather well definitely worth the time to suppose deeply concerning the correct analysis strategy/metrics and comparability baselines earlier than embarking on any new machine studying venture (together with constructing the subsequent biggest DL framework). It forces one to essentially perceive their knowledge and the ultimate usecase of the developed mannequin. For instance, past TPRs and FPRs it could be additionally essential to seize the variety of false negatives within the analysis metric. In that case, one may contemplate different metrics, similar to precision-recall curves and f-measure.

As all the time be happy to offer a shout-out if I made a mistake anyway or if there’s anyway the publish may be improved. Thanks for taking the time to learn!

A few of my sources

https://machinelearningmastery.com/threshold-moving-for-imbalanced-classification/