The dataset I chosen is the Metropolis of Chicago’s “Visitors Crash — Crash” dataset, which has collected details about each site visitors crash on Chicago’s metropolis streets from 2015 to the current day. It’s a very well-maintained dataset, with the information being from the Chicago Police Division’s digital crash reporting system, and is repeatedly amended and recorded in SR1050 format (specified by the Illinois Division of Transportation). There are roughly 835 thousand site visitors information with every entry having 48 options such because the posted pace restrict, climate situations, and sort of first collision. With this abundance of information, it’s cheap to say that this dataset offers a base on which a machine studying mannequin might predict whether or not a automobile crash is extreme or not.

import pandas as pd

!pip set up scikit-learn

import sklearn

from sklearn.model_selection import train_test_split

from sklearn.metrics import *

from sklearn import datasets

from sklearn.linear_model import LogisticRegression

!pip set up matplotlib

import matplotlib.pyplot as plt

import numpy as np

url = "/content material/Traffic_Crashes_-_Crashes_20240511.csv"

df = pd.read_csv(url)

df.head()

df.head() shows the primary 5 rows of the DataFrame.I plan to make use of logistic regression as my machine-learning algorithm. Why? Logistic regression is a supervised studying mannequin usually used to foretell binary classification issues, though it additionally works for greater than two class issues. Extra particularly, it predicts the likelihood of information belonging to every class. Due to logistic regression’s capability to foretell the likelihood of an information level being in a single class from 0 to 1, it’s good for predicting whether or not a site visitors accident is extreme.

Earlier than making the mannequin, I needed to put together the information within the Metropolis of Chicago’s “Visitors Crash — Crash” dataset by cleansing it and dropping sure rows and columns. This preparation ensures that the information used to coach the mannequin is constant and dependable. The method takes a number of steps:

- Dropped Duplicates: I eliminated duplicate entries within the dataset to forestall bias or skewed outcomes.

df.drop_duplicates(inplace = True)

- Checked for null or lacking values: Checking for lacking or null values permits for a greater evaluation of what actions to take subsequent, whether or not you continue to should drop extra rows, or whether or not you’ll be able to transfer on to function engineering.

df.information()

df.isna()

df.isnull()

- Checked the distinctive values that exist in categorical options: I checked what distinctive values had been current in my categorical options in order that I might plan what options to change or drop.

- Dropped pointless options that both have quite a lot of lacking or null values or couldn’t be used to foretell whether or not a automobile crash was extreme utilizing a machine-learning mannequin: Dropping these options ensures that I don’t lower the scale of the dataset by an excessive amount of after I drop all null and lacking values.

df.drop(["LONGITUDE", "LATITUDE", "LOCATION", "NOT_RIGHT_OF_WAY_I", "CRASH_DATE_EST_I", "DOORING_I", "WORK_ZONE_I", "WORK_ZONE_TYPE", "WORKERS_PRESENT_I", "INTERSECTION_RELATED_I", "LANE_CNT", "PHOTOS_TAKEN_I", "STATEMENTS_TAKEN_I", "HIT_AND_RUN_I"], axis = 1, inplace = True)

- Dropped all null values: I eliminated values that had a null worth so I might later easily make use of function engineering and stop bias or skewed outcomes.

df = df.dropna(axis = 0, how = "any", subset = None)

df.information()

- Employed function engineering: Characteristic engineering is the method of utilizing one’s area data to extract and remodel information into contains a mannequin can successfully use. In my case, I created the options: SEVERITY (whether or not a automobile accident has an injured or lifeless individual), CLEAR_WEATHER (whether or not the climate is obvious), WET_ROAD (whether or not the highway was moist or icy), MOTOR_VEHICLE_CRASH (separated into a number of options which can be true and false primarily based), and MAX_DAMGE (whether or not harm prices are higher than $1500).

def severityRating (worstInjury):

if worstInjury == "NO INDICATION OF INJURY" or worstInjury == "REPORTED, NOT EVIDENT":

return False

else:

return True

df['SEVERITY'] = df["MOST_SEVERE_INJURY"].apply(severityRating) def isRoadWet(description):

if "WET" in description or "ICE" in description:

return True

else:

return False

df["WET_ROAD"] = df["ROADWAY_SURFACE_COND"].apply(isRoadWet)

df["CLEAR_WEATHER"] = df['WEATHER_CONDITION'] == "CLEAR"

def isStraightCrash (alignment):

return "STRAIGHT" in alignment

df["CRASH_ALIGNMENT"] = df["ALIGNMENT"].apply(isStraightCrash)

listOfFirst_Crash_Type = checklist(df["FIRST_CRASH_TYPE"].distinctive())

def detectCrashType (description):

return listOfFirst_Crash_Type.index(description)

df["MOTOR_VEHICLE_CRASH"] = df['FIRST_CRASH_TYPE'].apply(detectCrashType)

df["MAX_DAMAGED"] = df['DAMAGE'] == "OVER $1,500"

df["PEDESTRIAN_CYCLIST_CRASH"] = (df["FIRST_CRASH_TYPE_PEDESTRIAN"] | df["FIRST_CRASH_TYPE_PEDALCYCLIST"])

df["SIDESWIPE_CRASH"] = (df["FIRST_CRASH_TYPE_SIDESWIPE OPPOSITE DIRECTION"] | df["FIRST_CRASH_TYPE_SIDESWIPE SAME DIRECTION"])

df["STATIONARY_CRASH"] = (df["FIRST_CRASH_TYPE_FIXED OBJECT"] | df['FIRST_CRASH_TYPE_PARKED MOTOR VEHICLE'])

df["OTHER_CRASH"] = (df["FIRST_CRASH_TYPE_TRAIN"] |

df["FIRST_CRASH_TYPE_OTHER OBJECT"] |

df["FIRST_CRASH_TYPE_OTHER NONCOLLISION"] |

df["FIRST_CRASH_TYPE_ANIMAL"])

df.rename(columns={"FIRST_CRASH_TYPE_ANGLE": "ANGLE_CRASH", "FIRST_CRASH_TYPE_TURNING": "TURNING_CRASH","FIRST_CRASH_TYPE_HEAD ON":"HEAD_ON_CRASH"}, inplace = True)

With the information cleaned, we even have to visualise the information earlier than the precise machine-learning course of. Extra exactly, I targeted on visualizing key options similar to climate situations, day of week, pace restrict, and any function I initially believed would have some impact on predicting the severity of a automobile crash in hopes of higher understanding how the mannequin may carry out relying on the options included. By visualizing the information, we are able to uncover patterns within the relationship between totally different options and whether or not a automobile accident is extreme. Now, higher understanding what options have an effect on a automobile accident’s severity, I can single out options that don’t appear to have any impact earlier than coaching the mannequin. Because of singling out these options, the mannequin will likely be extra correct.

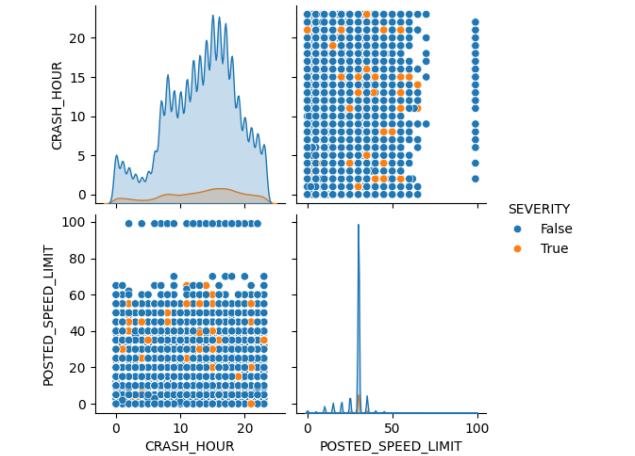

On this case, I chosen CRASH_HOUR, WET_ROAD, POSTED_SPEED_LIMIT, MOTOR_VEHICLE_CRASH, and MAX_DAMAGED as a result of prior visualizations and graphs indicated that they could have an effect on my label, SEVERITY. Having narrowed down what options I needed to make use of, I began constructing my mannequin. However earlier than constructing the mannequin, though I had already visualized these options with my label, I needed to dive deeper. So, I imported one other library for making graphs, seaborn, and performed round with the assistance of documentation and ChatGPT to generate a number of totally different graphs for my numerical and categorical graphs.

options = df[["CRASH_HOUR", "WET_ROAD", "POSTED_SPEED_LIMIT", "MAX_DAMAGED", "ANGLE_CRASH", "STATIONARY_CRASH", "REAR_CRASH", "HEAD_ON_CRASH", "TURNING_CRASH", "SIDESWIPE_CRASH", "PEDESTRIAN_CYCLIST_CRASH"]]

labels = df['SEVERITY']

For my numerical options, whereas it was arduous to see the connection by way of simply scatter plots and field graphs, I felt it was simpler to see patterns by way of histograms. For my categorical options, whereas I already examined them by way of common bar graphs, this time I used depend plots (principally the identical), and the bars for whether or not the accidents had been extreme had been separated facet by facet. This clear separation gave me a clearer thought of the ratio of whether or not a automobile accident was extreme or not for these totally different options. By these graphs, I higher perceive the connection between these options and my label, which permits me to regulate and finetune the mannequin when mandatory later.

import seaborn as sns

information = pd.concat([features, labels], axis=1)

# Plotting scatter plots for numerical options

numerical_features = ['CRASH_HOUR', 'POSTED_SPEED_LIMIT']

sns.pairplot(information, hue='SEVERITY', vars=numerical_features)

plt.present()

for function in numerical_features:

plt.determine(figsize=(10, 6))

sns.boxplot(x='SEVERITY', y=function, information=information)

plt.title(f'Field Plot of {function} vs SEVERITY')

plt.present()# Histograms for numerical options

for function in numerical_features:

plt.determine(figsize=(10, 6))

sns.histplot(information, x=function, hue='SEVERITY', a number of='stack')

plt.title(f'Histogram of {function}')

plt.present()

After visualizing these relationships, I break up my dataset into coaching and testing units utilizing scikit-learn’s train_test_split perform. By splitting the dataset, I ensured that the mannequin wouldn’t be skilled and examined by the identical information; this stored the information the mannequin was examined on as new and surprising, avoiding overfitting. Diving into element, I reserved 80% of the dataset for coaching and the remaining 20% for testing the mannequin.

X_train, X_test, y_train, y_test = train_test_split(

options, labels, test_size=0.2)

The following step was to scale my information. In different phrases, by utilizing totally different capabilities within the scikit-learn library, I can change the numerical distance between information factors and the vary of values throughout all my options in order that when my mannequin makes use of the coaching information it’s all in the identical scale. On this case, although, I used to be misplaced on whether or not I needed to normalize or standardize my information by way of scikit-learn’s capabilities. Being indecisive and never realizing the outcome if I picked one scale over the opposite, I made a decision to implement each scales, one totally different scale for a similar mannequin two separate instances.

First, I made a decision to implement standardization by way of scikit-learn’s StandardScaler. Standardization essentially entails the scaling of information in order that the imply is 0 and the usual deviation is 1.

mannequin = LogisticRegression()

from sklearn.preprocessing import StandardScaler

std_scaler = StandardScaler()X_train_std = std_scaler.fit_transform(X_train)

X_test_std = std_scaler.remodel(X_test)

Now, the mannequin needed to be skilled and make predictions on the brand new standardized coaching and testing units.

mannequin.match(X_train_std, y_train)

pred = mannequin.predict(X_test_std)

After utilizing the mannequin to create new predictions, we should discover how correct this prediction was and perceive which predictions tended to be spot-on and what information the mannequin overfitted to or underfitted to. I did this by utilizing the classification_report perform to search out how the mannequin carried out intimately after which by utilizing a ConfusionMatrixDisplay to visualise what number of true positives, true negatives, false positives, and false negatives had been current within the mannequin’s prediction. I additionally used the accuracy_score perform to search out the accuracy of the mannequin’s prediction in comparison with the label values within the check information.

print(classification_report(y_test, pred))

print(accuracy_score(y_test, pred))

ConfusionMatrixDisplay.from_predictions(y_test, pred,

display_labels = ['0', '1'])plt.present()

Lastly, I simply repeated this course of, however as an alternative of scaling with standardized information, I normalized my information. By normalizing my coaching and datasets, I translate my information right into a [0, 1] vary, with 0 being the minimal worth and 1 being the utmost worth. This truth turns into helpful because the distribution of most graphs representing label vs. function current doesn’t observe a bell curve or any clear form.

from sklearn.preprocessing import MinMaxScaler

norm_scaler = MinMaxScaler()X_train_norm = norm_scaler.fit_transform(X_train)

X_test_norm = norm_scaler.remodel(X_test)

Seeing each the mannequin’s outcomes after the respective information was standardized and normalized demonstrates that the format of how the information was scaled didn’t matter in the long term because the accuracy and ConfusionMatrixDisplay had been the identical. Extra importantly, it’s clear that the nonlinear nature and imbalance current within the SEVERITY label (whether or not a automobile accident was extreme or not) significantly impacted the outcomes of my mannequin. This downside is proven in my ConfusionMatrixDisplay with the truth that the ratio of true negatives to false negatives, negatives being the dominant class in my label, is way larger than the ratio of true positives to false positives, positives being the minority. I now notice that logistical regression was in all probability not the perfect mannequin to make use of as a result of nonlinear nature of how site visitors accidents can happen and grow to be. It additionally didn’t assist that I didn’t steadiness my information which solely exacerbated this downside.

Though my mannequin made many errors, there have been many successes in that it nonetheless achieved an total accuracy above 90%. And, it predicted greater than 92% of negatives relating to the severity of a automobile accident appropriately. I believe if I had completed extra planning on what mannequin to make use of and did higher on selecting my dataset and engineering my options, my mannequin would have in the end completed higher.

Regardless of the beforehand talked about failures, I discovered loads not nearly information science and machine studying model-making, however simply how difficult predicting site visitors is. I ponder simply how those that engineer all these self-driving vehicles and site visitors methods, typically, do that. My technical experience in these libraries and the Python programming language has elevated tenfold, and I believe I ought to do more difficult initiatives like this one. This undertaking was a problem, but it surely was a enjoyable problem.