On this planet of deep studying, autoencoders maintain a particular place. Whether or not you’re trying to compress information, denoise photos, or uncover hidden patterns, autoencoders supply an interesting mix of simplicity and energy. Let’s dive into what makes autoencoders distinctive and discover their key functions.

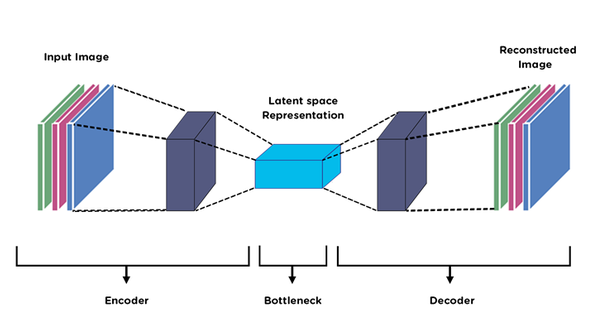

Autoencoders are neural networks designed to be taught environment friendly codings of enter information. They encompass two essential elements:

- Encoder: Compresses the enter right into a latent-space illustration.

- Decoder: Reconstructs the enter information from the latent-space illustration.

An autoencoder construction contains:

- Enter Layer: Takes within the unique information.

- Hidden Layer (bottleneck): Compresses the information.

- Output Layer: Makes an attempt to recreate the enter information.

Like PCA, autoencoders can cut back the variety of options whereas preserving essential info.

2. Knowledge Denoising

Autoencoders can take away noise from photos, audio, and different information by studying to reconstruct clear information from noisy inputs.

3. Anomaly Detection

Educated on regular information, autoencoders can detect anomalies by figuring out excessive reconstruction errors on uncommon information.

4. Picture Era

Variational Autoencoders (VAEs) can generate new information samples just like the coaching information, helpful for duties like picture technology.

- Vanilla Autoencoders: Totally linked layers with out particular constraints.

- Convolutional Autoencoders: Use convolutional layers, best for picture information.

- Sparse Autoencoders: Apply a sparsity constraint on the hidden layer.

- Denoising Autoencoders: Particularly designed to take away noise.

- Variational Autoencoders (VAEs): Use a probabilistic strategy for the latent area, permitting information technology.

Right here’s a short implementation utilizing TensorFlow and Keras:

import tensorflow as tf

from tensorflow.keras.layers import Enter, Dense

from tensorflow.keras.fashions import Mannequininput_dim = 784 # MNIST information

latent_dim = 64

input_layer = Enter(form=(input_dim,))

encoded = Dense(latent_dim, activation='relu')(input_layer)

decoded = Dense(input_dim, activation='sigmoid')(encoded)

autoencoder = Mannequin(input_layer, decoded)

autoencoder.compile(optimizer='adam', loss='mse')

autoencoder.abstract()

Autoencoders are highly effective instruments for varied duties like dimensionality discount, denoising, and anomaly detection. Their means to be taught environment friendly information representations makes them invaluable in deep studying. Completely satisfied coding, and will your autoencoders at all times reconstruct with precision!