As soon as in a hackathon, my teammates and I developed a machine studying python program that predicts the age of crustaceans based mostly on a set of options. It’s a really fundamental regression drawback.

We started by growing this system in a typical workflow :

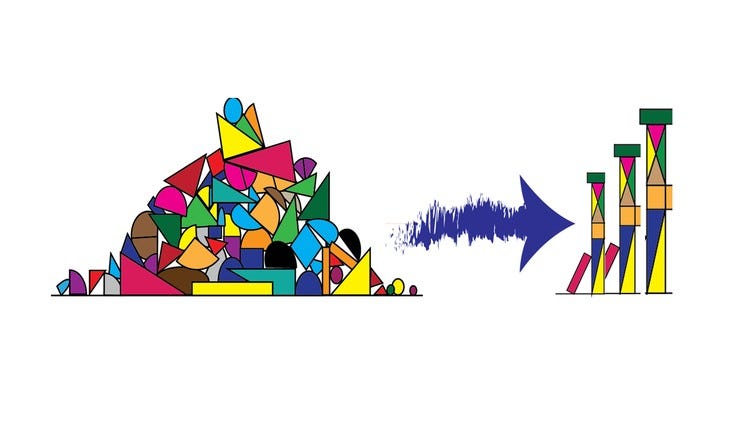

Function engineeering, knowledge cleansing, knowledge splitting,mannequin stacking, fashions analysis and comparaisons, mannequin choice and eventually hyper-parameters tuning.

Since an image is price an image is price a thousand phrases, Right here’s the UML ( Unified Modeling Language ) workflow diagram to summarize it.

All lengthy the evening, our personal rating was rising step-by-step and we very blissful imagining ourselves securing the primary place.

At 6 am, the personal rating have been revealed, the rating extremely decreased and we sadly secured the nineteenth place out of 37.

It was an actual disappointment.

The phenomenon that occurred is just referred to as overfitting. Our mannequin was unable to generalize ( make extrapolation ) to the brand new knowledge that could be completely different.

The purpose is that my teammates and I didn’t deal with the Exploratory Knowledge Evaluation half. I discovered how vital this step is and commenced studying medium articles, books and watching coursera programs on this extremly vital step.

This text is a summarization of all of the ressources that I discovered very helpful.

Coursera :

Exploratory Knowledge Evaluation for Machine Studying by IBM

Structuring Machine Studying Tasks by DeepLearning.AI

Enhancing Deep Neural Networks : Hyperparameter Tuning, Regularization and Optimization by DeepLearning.AI

Kaggle :

Function Engineering course

Knowledge Cleansing course