2. Options:

— I feel the that means of the ‘padding’ in actual life in an actual life and within the CNNs are virtually the identical. Each means the factor which is put outdoors to guard the insider factor. In CNNs, we simply surrounded by one border with ‘zeros’

2.1) Fixing the primary downside: Shrink output

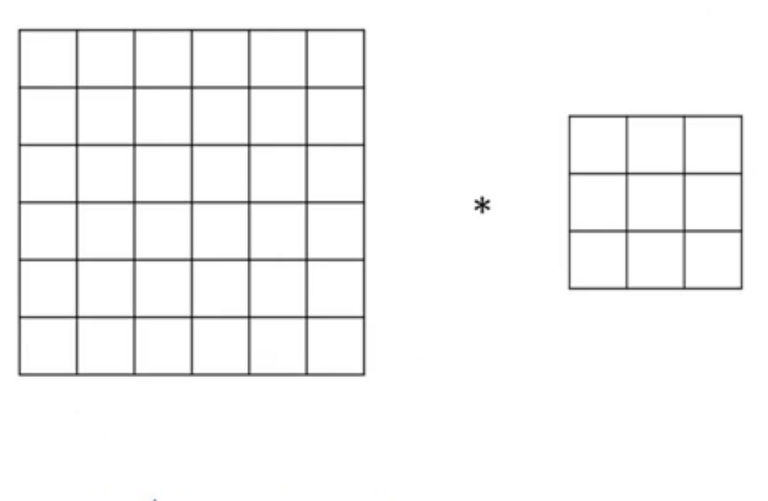

— There are one important parameters within the padding: p (padding quantity), on this case p=1(the width of the ‘padding’ border). With the assistance of the ‘padding’, the scale of the picture after convolution is:

m'=n+2p-f+1 x n+2p-f+1

m':dimension of the picture with the assistance of 'padding' after convolution

p: padding quantity(on this case p=1)

f: dimension of the kernel

n: dimension of the preliminary picture

After the padding the scale of the preliminary picture is 8×8 and after the convolution layer the scale is 6×6(which isn’t shrunk).

p=1,f=3x3,n=6x6

m'=6+2x1-3+1x6+2x1-3+1

m'=6x6

So the primary downside is solved. Do the second downside is solved by this technique?

2.2) Fixing the second downside: Shedding the knowledge of the pixels close to the sting of picture:

With the pixel within the top-left nook (which is sort of close to the sting of the picture) is retained after the convolution and now the pixel of ‘losing-information’ is padding border (which is meaningless). And as outcome, the second challenge is resolved.

3) Some exterior definitions:

— Legitimate Convolutions: The convolutions with out the assistance of ‘padding’. That is the one which happens the issue. The scale of the output will smaller the scale of the enter

— Identical Convolutions: The convolutions with the assistance of ‘padding’. The scale of the output will stay the identical because the output.

— If you wish to discover p ( padding quantity), you should utilize this equation:

n+2p-f+1=n =>p=(f-1)/2

— Usually, in pc imaginative and prescient, f (dimension of the kernel) is odd(for instance 3×3 or 5×5). When f is even, we are going to use the uneven padding(padding with ‘extra on the left’ or ‘extra on the fitting’). Furthermore, once we use f=odd quantity we could have the central level which is to determine the place of the filters.

4)Implement on TensorFlow lib :

On the TensorFlow lib, we are going to tune the ‘padding’ parameter:

import tensorflow as tf

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.fashions import Sequentialmannequin=Sequential()

mannequin.add(Conv2d(filters=32,

kernel_size=(3,3),# parameter f

padding='identical',

activation='relu'))

# on this case we modify padding='identical' to identical convolutions

# padding='legitimate' to legitimate convolutions

mannequin.abstract()

II) Strided convolution:

- Definition:

— Stride convolution is a method utilized in (CNNs) to cut back the spatial dimensions of the enter information. It includes making use of a convolution operation with a bigger stride worth, which implies the filter strikes throughout the enter information by skipping some positions.

— Stride convolution can be utilized to extract high-level options from the enter information. By skipping some positions, the community focuses on capturing extra summary and world patterns within the information.

— Stride convolution can cut back the spatial dimensions of the enter information, which could be helpful in decreasing the variety of parameters within the community and stopping overfitting.

2. Learn how to do it?

— In essence, the kernel (blue window) will move by way of all of the picture with steps=1. However with the parameter ‘stride’ we will modify the steps(for instance stride=2). This modification will have an effect on on horizontal axis and vertical one.

With the strided convolution, the equation to calculate the scale of the picture after convolution is modified with a parameter s(stride)

M= ((n+2p-f)/s)+1 x ((n+2p-f)/s)+1

n: dimension of the preliminary picture

p: padding quantity

f: kernel dimension

s: stride (step)

M: dimension of the picture after convolution

Within the following instance,

n=7

p=0

f=3

s=2

====>M=((7+2*0-3)/2)+1=3

3) Implement on Tensorflow:

import tensorflow as tf

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.fashions import Sequentialmannequin=Sequential()

mannequin.add(Conv2d(filters=32,

kernel_size=(3,3),# parameter f

padding='identical', # padding

stride=2 # stride (steps)

activation='relu'))

# on this case we modify stride=2 that means that step=2 on the horizontal and vertical axis

mannequin.abstract()

III) Conclusion:

— In actuality, the tuning of ‘padding’ parameter is sort of easy. However to deeply perceive it is usually essential. So I write this weblog to assist each one can perceive the parameter ‘padding’.

— Strided convolution is crucial approach to cut back the spaital dimensions of the picture and extracting the important options within the picture.

Reference:

This weblog is very impressed by the course Deep studying Specialization of Andrew Ng.