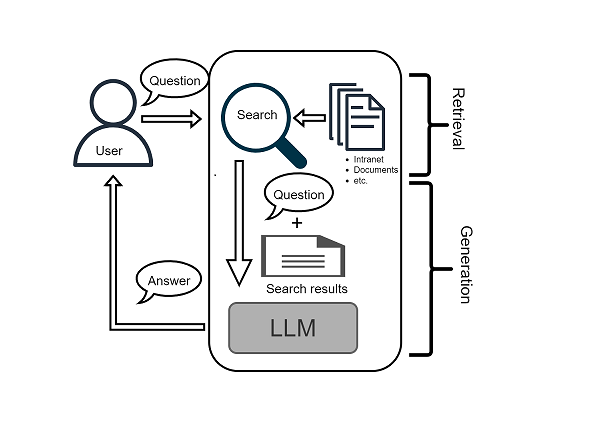

Retrieval-augmented technology (RAG) is without doubt one of the hottest approaches to mix a Massive Language Mannequin (LLM) with proprietary knowledge. An LLM will probably be mixed with an info retrieval system for answering consumer questions:

- The retrieval step entails looking for paperwork or info related to the query.

- Within the technology step the query is augmented with the search outcomes and handed to the LLM for producing a solution.

In lots of industrial or open supply RAG techniques or frameworks you’ll be able to select the LLM for the technology step. And that’s the query we wish to reply right here:

How can you choose the ‘finest’ LLM on your RAG system?

What will we imply by the ‘finest’ LLM? After all, we want to select the LLM that solutions most of our customers’ questions appropriately. Nonetheless, this isn’t doable with out nice effort: we have no idea the questions the customers will ask nor do we all know the search outcomes or the proper reply.

So we select a extra common criterion: Which LLM appropriately solutions questions associated to a common context, resembling Wikipedia articles?

It’s clear that the standard of the solutions supplied by an LLM on this state of affairs is a lot better than within the RAG system: the Wikipedia article has been written in such a method that it’s usually comprehensible and all of the phrases used are acquainted. In distinction, the paperwork within the RAG state of affairs might comprise specialised phrases that aren’t recognized to the LLM they usually might not have been written for a large viewers. However since that limitation is identical for all, we are able to use the criterion for evaluating the LLMs.

The query concerning the criterion above may be answered by some desktop analysis. A minimum of for the state of affairs the place the customers of the RAG system are English audio system. And that brings us to our second query:

How can you choose the ‘finest’ LLM for a RAG system for non-English talking customers?

LLMs can remedy many alternative duties, and for probably the most of theses duties a benchmark is established. So it’s a great place to begin to have a more in-depth take a look at these benchmarks.

For the LLM choice, we want a benchmark the place the duty is much like the technology step within the RAG system. These sorts of benchmarks are sometimes known as reading-comprehension or open-book query answering. Some examples:

- Narrative QA is a dataset of tales and corresponding questions designed to check studying comprehension particularly on lengthy paperwork.

- HotpotQA is a dataset of Wikipedia-based question-answer pairs. There are completely different sorts of questions like intersection questions or comparability questions to check studying comprehension and query answering.

- DROP is a dataset of Wikipedia-based question-answer pairs. Within the overwhelming majority of instances, the solutions encompass a reputation or a quantity (we are going to come again up to now later).

Earlier than we analyze the completely different benchmarks, we have now to determine who the candidates are for our investigation. Let’s select the next ones:

- OpenAI: GPT-4o

- Anthropic: Claude-3-Opus

- Google: Gemini-1.5-Professional

- Meta: Llama3–70b-instruct

Now, let’s come again to the query what benchmark we should always use. If we examine the technical experiences of the LLMs, we see that solely the DROP benchmark is evaluated for all fashions. The analysis outcomes are proven within the desk:

So for the English-speaking RAG system we have now a consequence: GPT-4o is your best option, intently adopted by Claude-3-Opus.

However what a few non-English RAG? How can we choose the ‘finest’ LLM? Let’s assume that we do the analysis for a German-speaking RAG. The method proven right here may be transferred 1:1 to another language.

All of the benchmarks within the technical experiences are in English. So, there isn’t any method round: we should consider the fashions by ourselves. And due to this fact we want a benchmark within the respective language, i.e. German.

One choice is to seek for German benchmark datasets on related platforms resembling HuggingFace, Kaggle or PapersWithCode. There are German question-answering datasets, resembling MLQA (MultiLingual Query Answering) from Meta or GermanQuad from Deepset. We may use them and consider the LMMs. However that method brings some disadvantages:

For the German-speaking RAG system we discovered a benchmark dataset. However what if we have to consider LLMs for a summarization process in Italian, and we didn’t discover a benchmark dataset?

We want to have a extra common answer for the state of affairs the place there isn’t any benchmark for the specified language.

An apparent answer is to translate the benchmark dataset we’re inquisitive about. And consider the LLMs on the translated knowledge. Beside the truth that the method may be utilized to all languages and LLM duties there are additional benefits:

- The analysis outcomes of a single LLM for various languages (however the identical authentic benchmark dataset) may be in contrast and we are able to choose how effectively the LLM ‘understands’ the language.

- It’s doable to guage LLMs for a multilingual RAG system, say French, Italian and German: Consider the LLMs on every of the translated benchmark datasets. Compute a mean rating from the outcomes and use it for choosing the ‘finest’ LLM.

We chosen 1000 knowledge factors from the DROP dataset. For the interpretation from English into German we used the Amazon Translate service.

For the analysis we used OpenAI for the GPT-4o mannequin, Google Vertex for Gemini-1.5-Professional and AWS for the Claude-3-Opus and Llama3–70b-instruct fashions.

As a primary take a look at we did the analysis with the unique 1000 knowledge factors. The F1 rating has been computed utilizing the implementation from the DROP dataset repository.

In contrast to for the analysis within the technical experiences we used a zero-shot immediate. 3-shot or few-shot prompting will not be relevant in a RAG system, as there aren’t any typical examples that may be included within the immediate. As anticipated, that is additionally mirrored within the decrease F1 scores. However the rating of the LLMs doesn’t change. GPT-4O remains to be the chief adopted by Claude-3-Opus.

Now let’s examine the evaluations with the unique and translated knowledge factors:

Once more we see the identical sample: the F1 scores are important decrease, however the rating doesn’t change. The massive shock is the Llama3 mannequin. Its loss within the F1 rating is by far the smallest.

If it’s a must to determine what LLMs to make use of in a RAG system, the DROP benchmark is an efficient analysis criterion.

In case your RAG system is English-speaking, you’ll be able to instantly use the reported F1 scores within the technical experiences of the LMMs on your resolution.

For different languages, it’s best to have the DROP dataset translated into the specified language by a translation service. And to guage the LLMs on the translated dataset.

If you’re inquisitive about a broader view of evaluating LLM’s additionally overlaying non-functional standards, try my blog post.