On this weblog publish, I’ll stroll you thru the method of constructing a textual content classification mannequin utilizing a Neural Community (NN) with Tensorflow-Keras. I’ve used the 20 Newsgroups dataset, which incorporates newsgroup paperwork throughout completely different classes. Particularly, I’ve centered on classifying paperwork into two classes: ‘alt.atheism’ and ‘sci.house’. This information will cowl following steps:

- Knowledge Loading

- Knowledge-preprocessing

- Mannequin Constructing

- Mannequin Coaching

- Mannequin Analysis

Step 1: Knowledge Loading

from sklearn.datasets import fetch_20newsgroups

# Load the 20 newsgroups dataset

newsgroups_train = fetch_20newsgroups(subset='practice', classes=['alt.atheism', 'sci.space'])

newsgroups_test = fetch_20newsgroups(subset='take a look at', classes=['alt.atheism', 'sci.space'])

Right here, I’ve loaded the default dataset from the sklearn.dataset package deal together with extracting the 2 centered classes.

Step 2: Knowledge Pre-processing

Extracting the labels from the datasets.

# Extract the critiques and labels

X_train = newsgroups_train.knowledge

y_train = newsgroups_train.goal

X_test = newsgroups_test.knowledge

y_test = newsgroups_test.goal

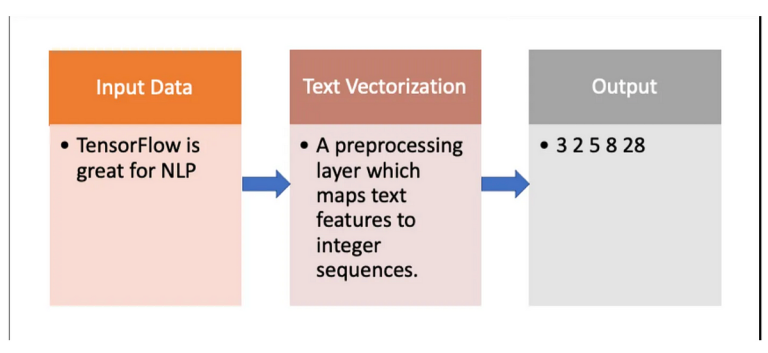

Vectorization transforms textual content knowledge into vectors of numbers, enabling algorithms to interpret and course of the information successfully. There are other ways to transform string knowledge into numerical values. Please refer this page . I’ve used TF-IDF vectorization to remodel the textual content knowledge into numerical vectors.

TF-IDF stands for “Time period Frequency-Inverse Doc Frequency.” It’s a method to measure how essential a phrase is in a doc, set in opposition to how frequent it’s throughout all paperwork. Let me simplify additional:

• Time period Frequency (TF): This tells you ways usually a phrase seems in a single doc.

• Inverse Doc Frequency (IDF): This measures how uncommon or frequent a phrase is throughout all paperwork. If a phrase seems in lots of paperwork, it’s not a novel identifier and will get a decrease rating.

from sklearn.feature_extraction.textual content import TfidfVectorizer# Initialize the TF-IDF vectorizer

vectorizer = TfidfVectorizer(max_features=5000)

# Match and remodel the coaching knowledge

X_train_tfidf = vectorizer.fit_transform(X_train)

X_test_tfidf = vectorizer.remodel(X_test)

Whereas utilizing vectorization to any explicit software of ML, knowledge scientists could face numerous challenges, to seek out such challenges and their attainable options refer this blog.

Step 3: Mannequin Constructing

To observe the efficiency of our mannequin throughout coaching, I cut up the coaching knowledge into coaching and take a look at units.

# Prepare and take a look at cut up

X_train_tfidf, X_val_tfidf, y_train, y_val = train_test_split(X_train_tfidf, y_train, test_size=0.1, random_state=42)# Outline the neural community mannequin

mannequin = Sequential([

Dense(128, input_shape=(X_train_tfidf.shape[1],), activation='relu'),

Dropout(0.5),

Dense(64, activation='relu'),

Dropout(0.5),

Dense(1, activation='sigmoid')

])

# Compile the mannequin

mannequin.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

I’ve outlined a easy neural community mannequin utilizing Keras’ Sequential API. The mannequin consists of dense layers with ReLU activation features and dropout layers for regularization.

I’ve compiled the mannequin with binary cross-entropy loss and the Adam optimizer. We compile the mannequin with binary cross-entropy loss and the Adam optimizer.Adam is without doubt one of the finest optimizers normally used for the standard ML tasks.

Step 4: Mannequin Coaching

I’ve transformed the sparse matrices to dense matrices earlier than coaching the mannequin. I’ve additionally used early stopping to stop overfitting.

# Outline early stopping callback

early_stopping = EarlyStopping(monitor='val_loss', persistence=3, verbose=1)# Convert sparse matrices to dense matrices

X_train_tfidf = X_train_tfidf.toarray()

X_val_tfidf = X_val_tfidf.toarray()

X_test_tfidf = X_test_tfidf.toarray()

# Prepare the mannequin

historical past = mannequin.match(X_train_tfidf, y_train, epochs=10, batch_size=32, validation_data=(X_val_tfidf, y_val), callbacks=[early_stopping])Step 7:Mannequin Analysis

Step 7: Mannequin Analysis

Lastly, let’s consider the mannequin on the take a look at knowledge and print the accuracy and classification report. The end result obtained is:

On this information, I’ve demonstrated the best way to construct a textual content classification mannequin utilizing a neural community with Keras. I’ve coated knowledge loading, preprocessing with TF-IDF vectorization, splitting knowledge for validation, constructing and compiling a neural community, coaching the mannequin with early stopping, and evaluating the mannequin’s efficiency. By following these steps, you’ll be able to apply related methods to different textual content classification duties and datasets.

For supply code click on on Github!

Join with me on LinkedIn: Bhuvana Venkatappa