A choice tree is a flowchart-like construction during which every inside node represents a take a look at on an attribute (function), every department represents the end result of the take a look at, and every leaf node represents a category label (in classification) or a steady worth (in regression).

Instance: Suppose there’s a candidate who has a job provide and desires to determine whether or not he ought to settle for the provide or Not. So, to unravel this downside, the choice tree begins with the basis node (Wage attribute by ASM). The basis node splits additional into the subsequent resolution node (distance from the workplace) and one leaf node based mostly on the corresponding labels. The subsequent resolution node additional will get cut up into one resolution node (Cab facility) and one leaf node. Lastly, the choice node splits into two leaf nodes (Accepted affords and Declined provide). Contemplate the beneath diagram:

- Root Node: The topmost node in a call tree. It represents the complete dataset, which is then cut up into two or extra homogeneous units.

- Inside Nodes: Nodes that symbolize the options (attributes) examined to make choices.

- Leaf Nodes (Terminal Nodes): Nodes that symbolize the ultimate resolution or classification. These nodes don’t cut up additional.

- Branches (Edges): To attach nodes.

The method of dividing a node into two or extra sub-nodes is known as splitting. The selection of attribute for splitting and the purpose the place the cut up happens is decided by particular standards:

- Gini Index: Utilized in classification duties, it measures the impurity of a node. A node with a Gini index of 0 is pure, that means all situations belong to the identical class.

Measures the probability of an incorrect classification of a brand new occasion if it was randomly categorised in accordance with the distribution of lessons within the dataset.

the place pi, is the chance of a component being categorised to a selected class.

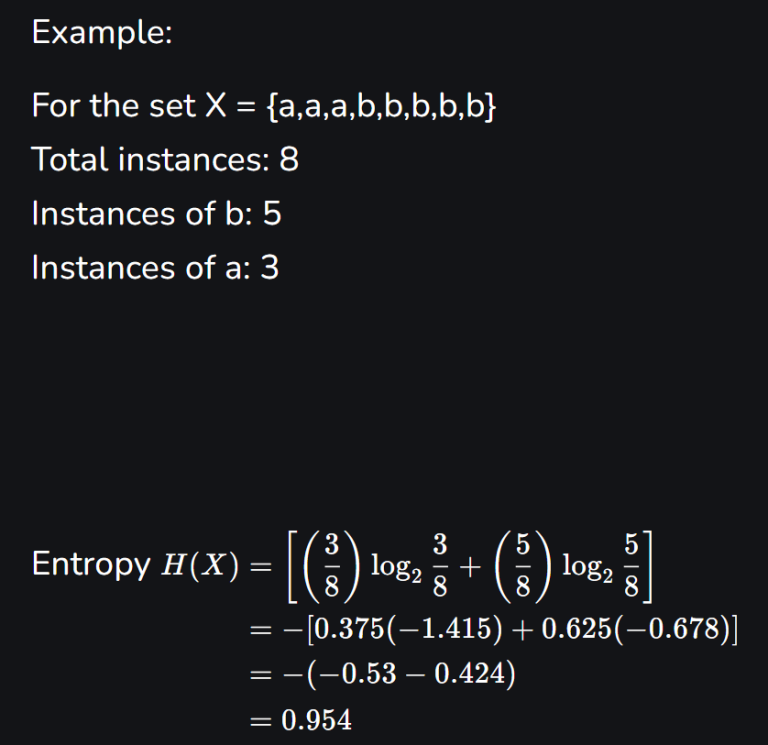

- Entropy (Data Achieve): One other criterion used for classification. It measures the quantity of data wanted to categorise a pattern.

the place pi, is the chance of a component being categorised to a selected class.

- Data Achieve is the discount in entropy:

- Variance Discount (for Regression Bushes): Measures the discount in variance after a cut up, aiming to reduce the variance inside every node.

Whether or not employed for regression or classification, a call tree gives a versatile and simply interpreted machine studying method. To create selections relying on the enter options, it constructs a construction like a tree. Leaf nodes within the tree point out the final word outcomes, whereas nodes within the tree symbolize choices or exams on the function values.

Right here’s an in depth breakdown of how the choice tree algorithm works:

- With all the info at its place to begin, the method is the basis node. With the intention to successfully divide the info into discrete lessons or values, the algorithm chooses a function along with a threshold. Relying on the job (classification or regression), the function and threshold are chosen to maximise data achieve or lower impurity.

- Relying on the end result of the function take a look at, the info is separated into subgroups. When a attribute like “Age” is used with a threshold of 30, as an example, the info is split into two subsets: information with Age lower than or equal to 30, and information with Age greater than 30.

- For each subgroup, the splitting process is repeated, leading to little one nodes. Up till a given situation is happy, this recursive course of retains going. A minimal quantity of information factors in a node, a predetermined tree depth, or the shortage of further data gained from splits past that time are examples of widespread stopping standards.

- A node turns right into a leaf node when a stopping requirement is happy. The ultimate judgment or forecast is represented by the leaf nodes. Every leaf node is classed utilizing the category label that’s commonest contained in the subset. In a regression, the goal variable’s imply or median worth inside the subset is often discovered within the leaf node.

- The tree construction that’s produced could be understood. The reasoning of the mannequin could be intuitively understood by viewing a call path from the basis to a leaf node as a algorithm.

Pruning reduces the dimensions of the choice tree by eradicating sections that present little data to categorise situations, thus stopping overfitting:

- Pre-pruning (Early Stopping): Stops the tree development earlier than it reaches a degree the place it completely classifies the coaching knowledge. Standards can embody setting a most depth, minimal variety of samples required to separate a node, or a minimal impurity lower.

Some widespread pre-pruning strategies embody:

Most Depth: It limits the utmost stage of depth in a call tree.

Minimal Samples per Leaf: Set a minimal threshold for the variety of samples in every leaf node.

Minimal Samples per Cut up: Specify the minimal variety of samples wanted to interrupt up a node.

Most Options: Prohibit the amount of options thought-about for splitting.

- Put up-pruning (Pruning): Removes branches from a completely grown tree. Strategies embody price complexity pruning, which removes branches that add little to the general predictive accuracy.

Some widespread post-pruning strategies embody:

Price-Complexity Pruning (CCP): This technique assigns a value to every subtree based totally on its accuracy and complexity, then selects the subtree with the bottom payment.

Reduced Error Pruning: Removes branches that don’t considerably have an effect on the general accuracy.

Minimal Impurity Decrease: Prunes nodes if the lower in impurity (Gini impurity or entropy) is beneath a certain threshold.

Minimal Leaf Dimension: Restrikes leaf nodes with fewer samples than a specified threshold.

The Determination Tree mannequin is utilizing the default method of scikit-learn’s DecisionTreeClassifier, which employs the Gini impurity criterion for making splits. That is evident from the parameter criterion="gini" handed to the DecisionTreeClassifier( ). constructor. Gini impurity is a measure of how usually a randomly chosen aspect from the set could be incorrectly labeled if it had been randomly labeled in accordance with the distribution of labels within the set.

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

from sklearn.tree import DecisionTreeClassifier

from sklearn.tree import plot_tree

import matplotlib.pyplot as plt# Load breast most cancers dataset

X, y = load_breast_cancer(return_X_y=True)

# Separating Coaching and Testing knowledge

X_train, X_test, y_train, y_test = train_test_split(X, y, train_size=0.2, random_state=42)

# Prepare resolution tree mannequin

mannequin = DecisionTreeClassifier(criterion="gini")

mannequin.match(X_train, y_train)

# Plot unique tree

plt.determine(figsize=(15, 10))

plot_tree(mannequin, crammed=True) #The crammed=True parameter is used so as to add coloration to the nodes

plt.title("Unique Determination Tree")

plt.present()

# Mannequin Accuracy earlier than pruning

accuracy_before_pruning = mannequin.rating(X_test, y_test)

print("Accuracy earlier than pruning:", accuracy_before_pruning)

The return_X_y=True parameter within the load_breast_cancer operate from sklearn.datasets is used to specify that the operate ought to return the options (X) and goal (y) as separate arrays as a substitute of a dictionary-like object.

Right here’s a short rationalization of the way it works:

from sklearn.datasets import load_breast_cancer

knowledge = load_breast_cancer()

X = knowledge.knowledge

y = knowledge.goal

from sklearn.datasets import load_breast_cancer

X, y = load_breast_cancer(return_X_y=True)

When return_X_y=True is specified, load_breast_cancer immediately returns the function matrix X and the goal vector y, which might make the code extra concise.

Let’s break down the operate requires readability:

- Loading the Dataset: The

load_breast_canceroperate masses the breast most cancers dataset, which is a built-in dataset in scikit-learn. This dataset is used for binary classification duties, the place the objective is to foretell whether or not a tumor is malignant or benign based mostly on varied options. - Returning the Information: Through the use of the

return_X_y=Trueparameter, the operate returns two separate arrays:X(options) andy(goal).

Output:

Accuracy earlier than pruning: 0.8793859649122807

Within the implementation, we pruning method is hyperparameter tuning by means of cross-validation utilizing GridSearchCV. Hyperparameter tuning entails looking for the optimum hyperparameters for a machine studying mannequin to enhance its efficiency. It doesn’t immediately prune the choice tree, but it surely helps to find the perfect mixture of hyperparameters, similar to max_depth, max_features, criterion, and splitter, which not directly controls the complexity of the choice tree and prevents overfitting. Subsequently, it’s a type of post-pruning method.

from sklearn.tree import DecisionTreeClassifier

parameter = {

'criterion' :['entropy','gini','log_loss'],

'splitter':['best','random'],

'max_depth':[1,2,3,4,5],

'max_features':['auto','sqrt','log2']

}

mannequin = DecisionTreeClassifier()

from sklearn.model_selection import GridSearchCV

cv = GridSearchCV(mannequin,param_grid = parameter,cv = 5)

cv.match(X_train,Y_train)

Visualizing

from sklearn.tree import export_graphviz

import graphviz

best_estimator = cv.best_estimator_

feature_names = optionsdot_data = export_graphviz(best_estimator, out_file=None, crammed=True, rounded=True,

feature_names=feature_names, class_names=['0', '1', '2'])

graph = graphviz.Supply(dot_data)

graph.render("decision_tree", format='png', cleanup=True)

graph

- Graphviz is an open-source graph visualization software program. It gives instruments for drawing graphs specified within the DOT language and rendering them into varied output codecs, similar to pictures (PNG, JPEG, SVG), PDF, PostScript, and many others. Graphviz helps varied sorts of graphs, together with directed and undirected graphs, bushes.

- The DOT language, brief for “graph description language,” is a straightforward text-based language used to explain graphs and networks. It’s the native language of the Graphviz software program, which is used to visualise graphs laid out in DOT format.

Right here’s a short overview of the DOT language:

- Graphs and Nodes: A DOT file usually represents a graph composed of nodes and edges. Nodes are outlined utilizing their names, and edges join pairs of nodes.

graph { A -- B;

B -- C;

C -- A;

}

2. Attributes: Nodes and edges can have attributes similar to coloration, form, measurement, label, and many others. Attributes are specified inside sq. brackets.

graph {

A [label="Node A", color=blue, shape=box];

B [label="Node B", color=red, shape=circle];

A -- B [label="Edge from A to B"];

}

3. Graph Kind: DOT helps various kinds of graphs, together with directed and undirected graphs.

digraph {

A -> B;

B -> C;

C -> A;

}

4. Graph Properties: DOT recordsdata can embody international graph properties similar to format, orientation, and total look.

graph {

format=neato;

rankdir=LR;

node [shape=ellipse];

A -- B;

B -- C;

C -- A;

}

5. Subgraphs: DOT permits nesting of graphs inside different graphs to symbolize hierarchical constructions.

graph {

subgraph cluster_A {

label="Subgraph A";

A -- B;

B -- C; }

subgraph cluster_B {

label="Subgraph B";

D -- E;

E -- F; }

A -- D;

}

out_file=None: This parameter specifies the place to avoid wasting the generated DOT file. Right here, it is set toNone, which implies the DOT knowledge is not going to be saved to a file however will likely be saved within thedot_datavariable.crammed=True: This parameter specifies whether or not to fill the choice tree nodes with colours to point the bulk class.rounded=True: This parameter specifies whether or not to attract the choice tree nodes with rounded corners.

Finest Parameters

cv.rating(X_test,Y_test)

cv.best_params_

Output:

0.9736842105263158

{'criterion': 'gini',

'max_depth': 4,

'max_features': 'sqrt',

'splitter': 'finest'}

# Price-complexity pruning (Put up-pruning)

path = mannequin.cost_complexity_pruning_path(X_train, y_train)

ccp_alphas, impurities = path.ccp_alphas, path.impurities# Prepare a collection of resolution bushes with completely different alpha values

pruned_models = []

for ccp_alpha in ccp_alphas:

pruned_model = DecisionTreeClassifier(criterion="gini", ccp_alpha=ccp_alpha)

pruned_model.match(X_train, y_train)

pruned_models.append(pruned_model)

# Discover the mannequin with the perfect accuracy on take a look at knowledge

best_accuracy = 0

best_pruned_model = None

for pruned_model in pruned_models:

accuracy = pruned_model.rating(X_test, y_test)

if accuracy > best_accuracy:

best_accuracy = accuracy

best_pruned_model = pruned_model

# Mannequin Accuracy after pruning

accuracy_after_pruning = best_pruned_model.rating(X_test, y_test)

print("Accuracy after pruning:", accuracy_after_pruning)

path = mannequin.cost_complexity_pruning_path(X_train, y_train): This line calculates the pruning path for the choice tree mannequin based mostly on the cost-complexity pruning algorithm. It takes the coaching knowledgeX_trainand labelsy_trainas inputs.

Price-complexity pruning is a way utilized in resolution tree algorithms to forestall overfitting by pruning the tree based mostly on the trade-off between tree complexity and accuracy. The algorithm iteratively prunes the choice tree by eradicating subtrees with low “cost-complexity” values, which symbolize a steadiness between the accuracy of the tree and its complexity (often measured by the variety of nodes or leaf nodes).

Right here’s how the cost-complexity pruning algorithm usually works:

- Calculate Impurity: Initially, a full resolution tree is grown utilizing the coaching knowledge. The impurity of every node within the tree is calculated utilizing a selected impurity measure, similar to Gini impurity or entropy.

- Calculate Price-Complexity: For every node within the tree, a “cost-complexity” worth is calculated. This worth represents the rise in impurity if the subtree rooted at that node is pruned. It’s calculated because the sum of the impurity of the node and a penalty time period proportional to the variety of leaf nodes within the subtree.

- Pruning Path: The algorithm constructs a pruning path by systematically pruning subtrees with the smallest cost-complexity values. That is usually achieved by iteratively eradicating the subtree with the smallest cost-complexity till a stopping situation is met, similar to reaching a desired tree measurement or a specified worth of alpha (a hyperparameter that controls the trade-off between tree complexity and accuracy).

- Choosing Alpha: The pruning path ends in a sequence of subtrees with growing ranges of pruning. The alpha values related to these subtrees symbolize the complexity parameter at every pruning step.

- Choose Optimum Tree: Lastly, the optimum pruned tree is chosen utilizing a validation dataset or cross-validation. That is usually achieved by selecting the subtree with the smallest alpha worth that achieves the perfect efficiency on the validation knowledge.

By pruning the choice tree based mostly on the cost-complexity criterion, cost-complexity pruning helps to forestall overfitting and enhance the generalization means of the mannequin. It usually results in easier and extra interpretable bushes with out sacrificing predictive accuracy.

ccp_alphas, impurities = path.ccp_alphas, path.impurities: Right here, the calculated pruning path is cut up into two arrays –ccp_alphasaccommodates the efficient alphas of the pruned subtrees, andimpuritiesaccommodates the full impurities at every step of the pruning.pruned_models = []: This initializes an empty listingpruned_modelsthe place we’ll retailer the choice tree fashions pruned with completely different alpha values.best_accuracy = 0andbest_pruned_model = None: These traces initialize variables to maintain monitor of the perfect accuracy achieved by the pruned fashions and the corresponding mannequin.for pruned_model in pruned_models:: This initiates a loop iterating by means of every pruned mannequin within the listingpruned_models.

Output:

Accuracy after pruning: 0.918859649122807

Determination Tree Pruning has an necessary function in optimizing the choice tree mannequin. It entails the removing of sure components of the tree which might doubtlessly cut back its efficiency. Right here is why resolution tree pruning is necessary:

- Prevents Overfitting: Determination bushes are susceptible to overfitting, the place the mannequin memorizes the coaching knowledge quite than studying generalizable patterns. Pruning helps stop overfitting by simplifying the tree construction, eradicating branches that seize noise or outliers within the coaching knowledge.

- Improves Generalization: By lowering the complexity of the choice tree, pruning enhances the mannequin’s means to generalize to unseen knowledge. A pruned resolution tree is extra prone to seize underlying patterns within the knowledge quite than memorizing particular situations, main to higher efficiency on new knowledge.

- Reduces Mannequin Complexity: Pruning ends in a less complicated resolution tree with fewer branches and nodes. This simplicity not solely makes the mannequin simpler to interpret but additionally reduces computational necessities throughout each coaching and inference. An easier mannequin can be much less susceptible to overfitting and extra sturdy to modifications within the knowledge.

- Enhances Interpretability: Pruning produces resolution bushes with fewer branches and nodes, that are simpler to interpret and perceive. That is notably necessary in functions the place human perception into the decision-making course of is effective, similar to in medical analysis or monetary decision-making.

- Speeds Up Coaching and Inference: Pruned resolution bushes require much less computational sources throughout each coaching and inference phases. With fewer branches and nodes, the decision-making course of turns into extra environment friendly, leading to sooner predictions with out sacrificing accuracy.

- Facilitates Mannequin Upkeep: Pruning helps keep resolution tree fashions over time by protecting them lean and related. As new knowledge turns into out there or the issue area evolves, pruned resolution bushes are simpler to replace and adapt in comparison with overly advanced, unpruned bushes.

Benefits:

- Simple to Perceive and Interpret: Determination bushes are easy to know and visualize. The visible illustration intently mirrors human decision-making processes.

- Handles Each Numerical and Categorical Information: They are often utilized to varied knowledge sorts.

- Non-Parametric: They don’t assume any underlying distribution of the info.

- Versatility: Can be utilized for each classification and regression duties.

- No Want for Function Scaling: Determination bushes don’t require normalization or scaling of the info.

- Handles Non-linear Relationships: Able to capturing non-linear relationships between options and goal variables.

Disadvantages:

- Overfitting: Bushes can change into overly advanced and mannequin the noise within the knowledge.

- Bias: They are often biased with imbalanced datasets.

- Variance: Small modifications in knowledge may end up in completely different bushes.

To mitigate some disadvantages, ensemble strategies like Random Forests and Gradient Boosting Bushes are used:

- Random Forest: Builds a number of resolution bushes (often educated with completely different components of the info) and merges them collectively to get a extra correct and steady prediction.

- Gradient Boosting: Builds bushes sequentially, the place every new tree corrects the errors of the earlier one.

CART is a call tree algorithm that can be utilized for each classification and regression duties. It really works by discovering splits that reduce the Gini impurity, a measure of impurity within the knowledge. CART makes use of Gini impurity for classification. When choosing a function to separate, it calculates the Gini impurity for every potential cut up and chooses the one with the bottom impurity.

The probability of incorrectly classifying a component chosen at random and labeled in accordance with the distribution of labels within the set is measured by the Gini impurity.

For regression duties, CART makes use of imply squared error (MSE) to judge splits. MSE measures the typical squared distinction between the expected and precise values. The cut up with the bottom MSE is chosen.

Recursively dividing the dataset in accordance with the attribute that minimizes the Gini impurity or maximizes the data achieve at every stage is finished by CART utilizing a grasping technique. It seems in any respect potential cut up factors for every attribute and selects the one which produces the bottom Gini impurity for the subsets which are generated.

Each inside node in a binary tree created by CART has precisely two little one nodes. This facilitates the splitting process and facilitates the interpretation of the resultant bushes.