On this tutorial, we are going to discover spectral clustering, a strong clustering method that leverages graph idea to establish inherent clusters inside information. We’ll use the penguins dataset, which gives a set of measurements from three completely different species of penguins. Our aim is to group these penguins into clusters that reveal hidden patterns associated to their bodily traits.

Conditions

To comply with this tutorial, you want:

- Python put in in your system

- Fundamental information of Python and machine studying ideas

- Familiarity with the pandas and matplotlib libraries

Putting in Required Libraries

Guarantee you may have the required Python libraries put in:

pip set up numpy pandas matplotlib scikit-learn

Let’s begin by loading the information (obtain here) right into a pandas DataFrame. We’ll deal with lacking values as effectively since they’ll have an effect on the efficiency of spectral clustering.

import pandas as pd# Load information

information = pd.read_csv('penguins.csv')

# Show the primary few rows of the dataframe

print(information.head())

# Dealing with lacking information by eradicating rows with NaN values and outliers

information = information.dropna()

information = information[(data['flipper_length_mm'] < 1000) & (information['flipper_length_mm'] > 0)]

Earlier than we apply spectral clustering, let’s do some primary EDA to know our information higher.

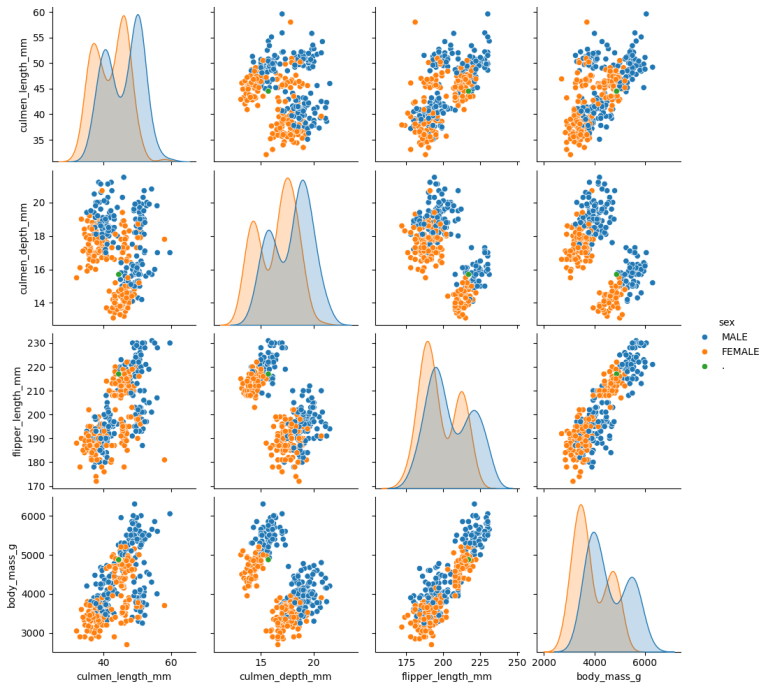

We are going to visualize the distribution of various options like culmen size, culmen depth, flipper size, and physique mass.

import matplotlib.pyplot as plt

import seaborn as snssns.pairplot(information, hue='intercourse', vars=['culmen_length_mm', 'culmen_depth_mm', 'flipper_length_mm', 'body_mass_g'])

plt.present()

The pair plot is a vital visualization in exploratory information evaluation for multivariate information like our penguins dataset. It reveals the relationships between every pair of variables in a grid format, the place the diagonal typically comprises the distributions of particular person variables (utilizing histograms or density plots), and the off-diagonal components are scatter plots displaying the connection between two variables.

Right here’s the right way to interpret the pair plot we generated and use its insights:

Diagonal: Distribution of Variables

- Histograms/Density Plots: Every plot on the diagonal represents the distribution of a single variable. For instance, analyzing the histogram for

body_mass_g, we will decide if the information is skewed, has a traditional distribution, or reveals any potential outliers. Constant patterns or deviations can present clues about inherent groupings or the necessity for information transformation.

Off-Diagonal: Relationships Between Variables

- Scatter Plots: Every scatter plot reveals the connection between two variables. As an example, a plot between

culmen_length_mmandculmen_depth_mmmay present a constructive correlation, indicating that as culmen size will increase, culmen depth tends to extend as effectively. These relationships might be linear or non-linear and should embrace clusters of factors, which recommend potential teams within the information. - Hue (Intercourse): Through the use of intercourse because the hue, we add one other dimension to the evaluation. This will reveal if there are distinct patterns or clusters in line with intercourse. As an example, if men and women type separate clusters within the scatter plot of

flipper_length_mmversusbody_mass_g, this means a major distinction in these measurements between sexes, probably influencing how clusters type in spectral clustering.

- Function Choice: Insights from pair plots can information characteristic choice for clustering algorithms. Options that present clear groupings or distinctions within the scatter plots is perhaps extra informative for clustering. In our case, if

flipper_length_mmandbody_mass_gpresent distinct groupings when plotted in opposition to different options, they is perhaps good candidates for clustering. - Figuring out Outliers: Outliers can disproportionately have an effect on the efficiency of clustering algorithms. Observations that stand out within the pair plot may want additional investigation or preprocessing (e.g., scaling or transformation) to make sure they don’t skew the clustering outcomes.

- Understanding Function Relationships: The relationships noticed may help in understanding how options work together with one another, which is essential when selecting parameters just like the variety of clusters or deciding on the clustering algorithm. For instance, if two options are extremely correlated, they could carry redundant info, which might affect the choice to make use of one over the opposite or to mix them someway earlier than clustering.

- Verifying Assumptions: Many clustering strategies have underlying assumptions (e.g., clusters having a spherical form in k-means). The pair plot may help confirm these assumptions. If the information naturally kinds non-spherical clusters, algorithms like spectral clustering that may deal with advanced cluster shapes is perhaps extra acceptable.

By critically analyzing the pair plot and making use of these insights, you may improve the setup of your clustering evaluation, resulting in extra significant and strong outcomes. This step, although seemingly easy, prepares the bottom for efficient data-driven decision-making in clustering setups.

Within the context of our penguin dataset, we’re eager about clustering based mostly on bodily measurements: culmen size, culmen depth, flipper size, and physique mass. These options are measured on completely different scales; for instance, physique mass in grams is of course a lot bigger numerically than culmen size in millimeters. If we don’t handle these scale variations, algorithms that depend on distance measurements (like spectral clustering) is perhaps unduly influenced by one characteristic over others.

options = information[['culmen_length_mm', 'culmen_depth_mm', 'flipper_length_mm', 'body_mass_g']]

Right here, we’re creating a brand new DataFrame options that features solely the columns we need to use for clustering. This excludes non-numeric or irrelevant information, such because the intercourse of the penguin, which we’re not utilizing for this explicit clustering.

from sklearn.preprocessing import StandardScalerscaler = StandardScaler()

scaled_features = scaler.fit_transform(options)

- Importing StandardScaler: The

StandardScalerfrom Scikit-Study standardizes options by eradicating the imply and scaling to unit variance. This course of is commonly known as Z-score normalization. - Creating an Occasion of StandardScaler: We create an occasion of

StandardScaler. This object is configured to scale information however hasn’t processed any information but. - Becoming and Remodeling: The

fit_transform()methodology computes the imply and customary deviation of every characteristic within theoptionsDataFrame, after which it scales the options. Basically, for every characteristic, the strategy subtracts the imply of the characteristic and divides the consequence by the usual deviation of the characteristic:

- Right here, x is a characteristic worth, μ is the imply of the characteristic, and σ is the usual deviation of the characteristic.

- End result: The output

scaled_featuresis an array the place every characteristic now has a imply of zero and a normal deviation of 1. This standardization ensures that every characteristic contributes equally to the space calculations, permitting the spectral clustering algorithm to carry out extra successfully.

Why Use StandardScaler?

The rationale we use StandardScaler versus different scaling strategies (like MinMaxScaler or MaxAbsScaler) is as a result of z-score normalization is much less delicate to the presence of outliers. It ensures that the characteristic distributions have a imply of zero and a variance of 1, making it a typical selection for algorithms that assume all options are centered round zero and have the identical variance.

By performing these steps, we put together our information to be within the optimum format for spectral clustering, the place the similarity between information factors (based mostly on their options) performs a vital position in forming clusters.

Now, we’re prepared to use spectral clustering. We’ll use Scikit-Study’s implementation.

from sklearn.cluster import SpectralClustering# Specifying the variety of clusters

n_clusters = 3

clustering = SpectralClustering(n_clusters=n_clusters, affinity='nearest_neighbors', random_state=42)

labels = clustering.fit_predict(scaled_features)

# Add the cluster labels to the dataframe

information['cluster'] = labels

How Spectral Clustering Works

1. Setting up a Similarity Graph:

- Step one in spectral clustering is to remodel the information right into a graph. Every information level is handled as a node within the graph. Edges between nodes are then created based mostly on the similarity between information factors; this may be calculated utilizing strategies such because the Gaussian (RBF) kernel, the place factors nearer in area are deemed extra comparable.

2. Creating the Laplacian Matrix:

- As soon as we’ve a graph, spectral clustering focuses on its Laplacian matrix, which gives a method to signify the graph construction algebraically. The Laplacian matrix is derived by subtracting the adjacency matrix of the graph (which represents connections between nodes) from the diploma matrix (which represents the variety of connections every node has).

3. Eigenvalue Decomposition:

- The core of spectral clustering includes computing the eigenvalues and eigenvectors of the Laplacian matrix. The eigenvectors assist establish the intrinsic clustering construction of the information. Particularly, the eigenvectors similar to the smallest non-zero eigenvalues (often called the Fiedler vector or vectors) give essentially the most perception into essentially the most vital splits between clusters.

4. Utilizing Eigenvectors to Kind Clusters:

- The following step is to make use of the chosen eigenvectors to remodel the unique high-dimensional information right into a lower-dimensional area the place conventional clustering strategies (like k-means) might be more practical. The remodeled information factors are simpler to cluster as a result of the spectral transformation tends to emphasise the grouping construction, making clusters extra distinct.

5. Making use of a Clustering Algorithm:

- Lastly, a standard clustering algorithm, akin to k-means, is utilized to the remodeled information to establish distinct teams. The output is the clusters of the unique information as knowledgeable by the spectral properties of the Laplacian.

Advantages of Spectral Clustering

Spectral clustering is especially highly effective for advanced clustering issues the place the construction of the clusters is unfair, akin to nested circles or intertwined spirals. It doesn’t assume clusters to be of any particular shapes or sizes as in k-means, which makes it a versatile selection for a lot of real-world eventualities.

This method shines in its means to seize the essence of knowledge when it comes to connectivity and relationships, making it best for functions starting from picture segmentation to social community evaluation.

Let’s visualize the clusters fashioned.

sns.scatterplot(information=information, x='flipper_length_mm', y='body_mass_g', hue='cluster', palette='viridis', type='intercourse')

plt.title('Penguin Clustering by Flipper Size and Physique Mass')

plt.present()

This scatter plot reveals how penguins are grouped based mostly on their flipper size and physique mass, with completely different kinds for every intercourse.

To judge the standard of clusters, we will use the silhouette rating.

from sklearn.metrics import silhouette_scorerating = silhouette_score(scaled_features, labels)

print(f"Silhouette Rating: {rating:.2f}")

The next silhouette rating signifies better-defined clusters.

On this tutorial, we utilized spectral clustering to the penguins dataset to uncover pure groupings based mostly on bodily traits. We dealt with real-world information points like lacking values, carried out EDA to realize insights, and visualized the outcomes to interpret our clusters.

This strategy demonstrates how spectral clustering can be utilized successfully on organic information, offering a useful software for ecological and evolutionary research.

Be at liberty to experiment with completely different parameters and options to see how they have an effect on the clustering final result!