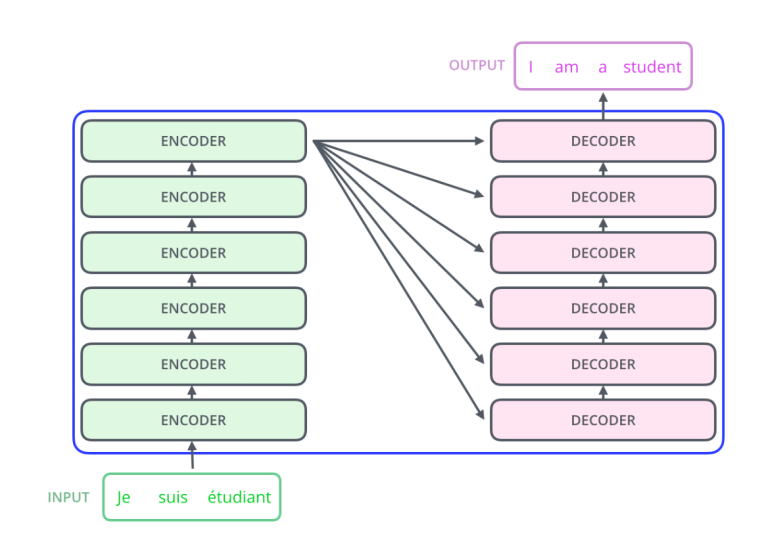

This structure is proposed in “Consideration: All You Want” paper [1]. It fully modifications the encoder-decoder, i.e. seq-to-seq, mannequin in some ways. First one is utilizing a number of encoders and decoders, the place solely the enter of the primary encoder is the enter, or embedding. The opposite encoders take the output of the earlier encoders as enter. The hidden layer created by final encoder is the enter to all encoders. The general structure is demonstrated in Determine 1.

One other change of this mannequin is self-attention community inside encoders. Self consideration community grasps relevances of options amongst themselves. For instance, within the sentence “The animal didn’t cross the road as a result of it was too drained”, the phrase “it” is extra associated with “animal” than any phrase else in right here. It resembles to seq-to-seq RNNs such that the upcoming phrases have details about the earlier phrases by way of the hidden state variables. Within the Determine 2, the relations of every phrase to “it” after coaching was proven.

A extra exact and detailed rationalization of self-attention networks requires the phrases “key”, “worth” and “question” to be defined. Key (k_i) and question (q_j) is multiplied for every enter (x_i) and softmax operate is utilized after divided by some fixed( √d_k, dk is the dimensions of enter vector). Calculated values are summed up, and multiplied by every worth (v_j ). The output is handed to feed ahead community above it. This course of is sequential, however the feed ahead neural community half could be parallelized. With softmax operate, the impact of the much less associated inputs are lessened, whereas the extra associated ones are amplified extra (Determine 3).

The “keys”, “queries” and “values” have weights W_k, W_q and W_v and their present values are the product of those weights with the present enter. If we name the output of the self-attention community as z, the matrix calculations could be formulated as in Equation 1.

“The Consideration: All You Want” paper[1] additional redefines the self-attention community to enhance the efficiency, however that is out of scope. Nevertheless, one level remained earlier than shifting into the decoder structure. Add & Normalize step after every sub-layer (self-attention, FFNN) is utilized as proven in Determine 4.

The encoding mechanism outputs a bunch of keys and values. These are fed into the decoder structure, during which every decoder consists of self-attention community, encoder-decoder consideration community and one FNN community. The self-attention layer is just allowed to take care of earlier positions within the output sequence. That is achieved by masking future positions (setting them to -inf) earlier than the softmax step within the self- consideration calculation.

The general structure is given in Determine 5.