Constraining a mannequin to make it easier and scale back the chance of overfitting known as regularization. For instance, if a linear mannequin has two parameters, θ₀ and θ₁. This offers the training algorithm two levels of freedom to adapt the mannequin to the coaching information: it could actually tweak each the peak (θ₀) and the slope (θ₁) of the road.

Equally, a easy strategy to regularize a polynomial mannequin is to cut back the variety of polynomial levels.

For a linear mannequin, regularization is often achieved by constraining the weights of the mannequin. We are going to now take a look at Ridge Regression, Lasso Regression, and Elastic Internet, which implement three other ways to constrain the weights.

Ridge Regression provides a regularization time period equal to αΣⁿᵢ₌₁ = θᵢ² is added to the price operate. This forces the training algorithm to not solely match the information but additionally hold the mannequin weights as small as attainable. Observe that the regularization time period ought to solely be added to the price operate throughout coaching. As soon as the mannequin is skilled, you need to consider the mannequin’s efficiency utilizing the unregularized efficiency measure.

Other than regularization, another excuse why the price operate used for coaching, and the efficiency measure used for testing may be totally different , is {that a} good coaching value operate ought to have optimization-friendly derivatives, whereas the efficiency measure used for testing needs to be as shut as attainable to the ultimate goal. An excellent instance of this can be a classifier skilled utilizing a value operate such because the log loss, however evaluated utilizing precision/recall.

The hyperparameter α controls how a lot you need to regularize the mannequin. If α = 0 then Ridge Regression is simply Linear Regression. If α could be very giant, then all weights find yourself very near zero and the result’s a flat line going by means of the information’s imply.

Observe that the sum begins at i = 1, not 0, which implies that the bias time period θ₀ isn’t regularized. To know this, we have to perceive norms.

Norms

The fee operate we’re attempting to attenuate proper now could be the RMSE (In apply, it’s easier to attenuate MSE, which is why we’re utilizing MSE above as a substitute of RMSE).

We are able to additionally use different efficiency measures. For instance, if there are various outliers, chances are you’ll think about using the Imply Absolute Error (MAE). RMSE and MAE are each methods to measure the space between two vectors:

- The vector of predictions

- The vector of goal values

Varied distance measures (norms) are attainable:

- RMSE corresponds to the Euclidean norm: It’s the notion of distance you might be aware of. It’s also referred to as the ℓ₂ norm, famous ∥ · ∥₂.

- MAE corresponds to the ℓ₁ norm, famous ∥ · ∥₁. It’s typically referred to as the Manhattan norm as a result of it measures the space between two factors in a metropolis should you can solely journey alongside orthogonal metropolis blocks.

- Extra usually, the ℓₖ norm of a vector v containing n parts is outlined as:

∥ V ∥ₖ = (|v₀|ᵏ + |v₁|ᵏ + ⋯ + |vₙ|ᵏ)¹ᐟᵏ

ℓ₀ simply provides the variety of non-zero parts within the vector, and ℓₘ provides the utmost absolute worth within the vector. The upper the norm index, the extra it focuses on giant values and neglects small ones. This is the reason the RMSE is extra delicate to outliers than the MAE. However when outliers are exponentially uncommon (like in a bell-shaped curve), the RMSE performs very nicely and is usually most well-liked.

Coming again to our Ridge regression equation:

If we outline w because the vector of function weights (θ₁ to θₙ), then the regularization time period is just equal to ½(∥ w ∥₂)², the place ∥ w ∥₂ represents the ℓ₂ norm of the burden vector. Now let’s perceive why we exclude the bias time period:

- The bias time period θ₀ represents the intercept of the regression line or hyperplane. It shifts all the prediction operate up or down with out affecting the slope or the match of the information.

- Regularizing θ₀ would indicate penalizing the intercept, which may result in biased estimates, particularly when the information isn’t centered across the origin. In different phrases, penalizing θ₀ might transfer the fitted line or aircraft away from the true heart of the information, which is undesirable.

- The aim of regularization is to forestall overfitting by shrinking the coefficients of the enter options (i.e., θ1,θ2,…,θn). This ensures that the mannequin doesn’t change into too complicated and matches the noise within the information.

The above implementation is for linear regression. For Gradient Descent, simply add αw to the MSE gradient vector

In sensible functions, θ₀ is commonly not regularized as a result of it permits the mannequin to freely modify the bottom stage of predictions to raised match the information with none penalty. Regularizing solely θ1 by means of θn focuses on controlling the complexity of the mannequin’s response to the enter options, which is the principle aim of regularization.

It is very important scale the information (e.g., utilizing a StandardScaler) earlier than performing Ridge Regression, as it’s delicate to the dimensions of the enter options. That is true of most regularized fashions.

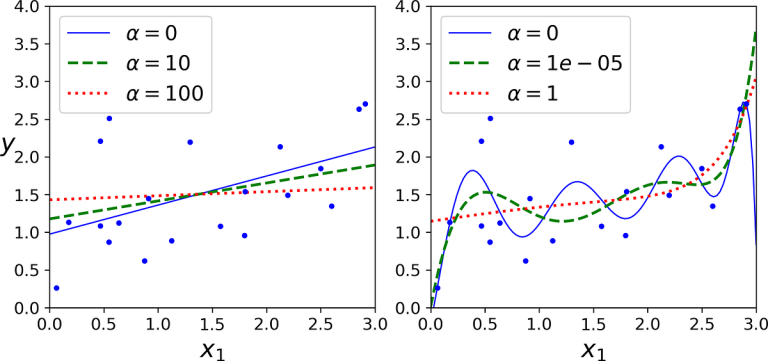

The next determine exhibits a number of Ridge fashions skilled on some linear information utilizing totally different α worth:

On the left, plain Ridge fashions are used, resulting in linear predictions. On the appropriate, the information is first expanded utilizing PolynomialFeatures(diploma=10), then it’s scaled utilizing a StandardScaler, and eventually the Ridge fashions are utilized to the ensuing options: that is Polynomial Regression with Ridge regularization. Observe how growing α results in flatter (i.e., much less excessive, extra cheap) predictions; this reduces the mannequin’s variance however will increase its bias.

As with Linear Regression, we will carry out Ridge Regression both by computing a closed-form equation or by performing Gradient Descent. The professionals and cons are the identical.

The equation beneath exhibits the closed-form resolution:

Right here A is the (n + 1) × (n + 1) id matrix besides with a 0 within the top-left cell, akin to the bias time period

Right here is how you can carry out Ridge Regression with Scikit-Study utilizing a closed-form resolution:

from sklearn.linear_model import Ridge

ridge_reg = Ridge(alpha=1, solver="cholesky")

ridge_reg.match(X, y)

ridge_reg.predict([[1.5]])

Right here, we use a variant of the closed-form equation utilizing a matrix factorization approach by André-Louis Cholesky. This offers the output:

array([[1.55071465]])

And utilizing Stochastic Gradient Descent:

sgd_reg = SGDRegressor(penalty="l2")

sgd_reg.match(X, y.ravel())

sgd_reg.predict([[1.5]])

The penalty hyperparameter units the kind of regularization time period to make use of. Specifying “l2” signifies that you really want SGD so as to add a regularization time period to the price operate equal to half the sq. of the ℓ2 norm of the burden vector: that is merely Ridge Regression. This offers us the output:

array([1.47012588])