Quantization refers to constraining an enter from a steady set of values to a discrete set of values. Constraining values on this means helps scale back computational load since floating-point computations are costly. Limiting precision, and representing weights utilizing 16, 8, or 4 bits moderately than 32 bits helps scale back storage. That is how quantization helps to run an enormous neural community on our telephones or laptops.

Another excuse a mannequin must be quantized is as a result of, some {hardware} like heterogenous compute chips or microcontrollers that go into edge gadgets (like telephones, and even gadgets in automobiles that use neural networks) are solely able to performing computations in 16-bit or 8-bit. These {hardware} constraints require fashions to be quantized to run on this {hardware}.

Each time we quantize the mannequin, a loss in accuracy needs to be anticipated. The graph under reveals the accuracy drop because the mannequin is more and more compressed (from proper to left, extra compression as we go to the left)

The graph above reveals that we are able to compress about 11% earlier than there’s a drastic loss in accuracy.

The aim of quantization and different compression methods is to scale back the mannequin dimension and scale back the latency of inference with out dropping accuracy.

This text will briefly go over 2 strategies of quantizing neural networks:

- Ok-Means Quantization

- Linear Quantization

Notice: this text is my notes from an MIT lecture about Quantization

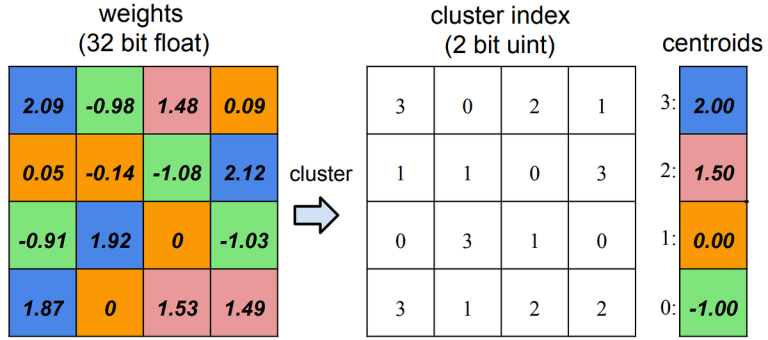

This technique works by grouping weights within the weight matrix utilizing the Ok-means clustering algorithm. We then choose a centroid of every group, which would be the quantized weight. We will then retailer the burden matrix as indices to the centroid.

Right here, the squares with the identical colours belong to the identical cluster and will probably be represented by an index to the centroid lookup desk. Let’s have a look at how this reduces storage

Storage:

Weights (32-bit float): 16 * 32 = 64 Bytes

Indices (2-bit Uint): 16 * 2 = 4 Bytes

Look-up Desk (32-bit float): 32 * 4 = 16 Bytes

Quantized Weights = 16 + 4 = 20 Bytes

Quantization error is the error between the reconstructed weights and the unique set of weights:

So for the instance above the quantization error would appear to be this:

We will “fine-tune” the quantized weights (the centroid values) to scale back this error by calculating the gradient after which clustering them in the identical means because the weights. We then accumulate the gradients, and sum them up. We then multiply the sum(s) by the educational charge after which subtract that from the preliminary centroids. On this means, we are able to tune the quantized weights.

Right here weights are saved as Integers. Throughout computation, we ‘decompress’ the weights by utilizing the lookup desk (centroid desk) and use these decompressed weights for computation. So we don’t scale back the computational load for the reason that centroids are nonetheless represented in floating level, however the reminiscence is drastically lowered.

This technique is beneficial when we have now a reminiscence bottleneck, like in LLAMA2, the place reminiscence is the bottleneck.

Works utilizing an Affine mapping to map integers to actual numbers (the weights).

The equation used for mapping is:

Right here is how we work out the Zero level and the Scale Issue

Instance:

Given the Weight Matrix:

We will calculate S as:

Then we calculate the Zero Level:

We have to around the end result for the reason that aim is for Z to be an integer.

So within the Instance from above:

So here’s what Linear Quantization appears to be like like:

Now for the computation side, we are able to substitute the mapping equation for the Weight Matrix.

As an illustration, a matrix multiplication with quantized weights would appear to be this: