Okay so this can be a observe on from my earlier article :

We’re gonna construct upon linear regression and canopy regularization, however as at all times,

So there’s fairly frequent time period in machine studying known as “over-fitting”, and what meaning is that your mannequin finally ends up studying the coaching knowledge so effectively that it even caputers the noise and outliers. In consequence, when a brand new knowledge level is launched, the mannequin fails to generalize successfully and performs poorly.

Consider it like this, let’s say we’ve we’ve to suit a line by way of 5 factors and let’s say a quadratic line matches it greatest. Now what occurs in overfitting is that your mannequin might find yourself studying a line of let’s say 8–9 diploma such that the road passes precisely by way of every level.

So how can we repair this ?

There may be some good rule of thumb to observe:

- When you’ve got small variety of knowledge then scale back mannequin complexity, which in case of linear regression can be utilizing decrease order polynomial

- Get extra knowledge. As you receive extra knowledge, you possibly can progressively enhance the complexity of the mannequin i.e, order of the polynomial

- The final one is our matter for at the moment: Regularization

Bear in mind from the earlier article that our purpose is to foretell the coefficients {w} that greatest mannequin the connection h(x, w) ≈ y,

Now what finally ends up taking place in overfitting is that these coefficients {w} find yourself being too giant. So a good suggestion to repair that might be to penalize giant coefficients and that’s mainly the idea behind regularization. So we are able to simply add a regularization time period to our error perform and get the components as:

the place ƛ is known as the regularization coefficient. This ƛ is what controls the tradeoff between “becoming error” and “complexity”

- Small regularization leads to advanced fashions with the chance of overfitting

- Giant regularization leads to easy fashions with the chance of underfitting

In less complicated phrases, if ƛ is just too giant then we find yourself penalizing an excessive amount of and the one solution to discover the optimum can be to have very low {w} and thus a easy mannequin and vice versa.

Earlier than we begin our from scratch implementation, let’s first run utilizing sklearn and see the impact of no regularization, L1 and L2 regularization.

We will see linear regression overfitting from these very giant coefficients, it performs extraordinarily effectively on coaching knowledge however fails on take a look at knowledge. L1 and L2 carry out wayy higher they too present some signal of overfitting as ideally practice and take a look at rating ought to be fairly shut however this can be a random dataset that I made so that ought to in all probability clarify that. Okay no to the principle stuff now…

L1 Regularization

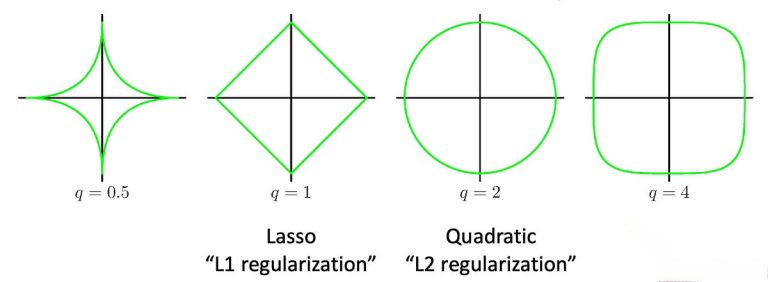

Okay so L1 regulaization, additionally known as Lasso, fights overfitting by shrinking the coefficients in direction of 0 and pinning some coefficients to 0. It’s largely used to introduce sparsity or to do characteristic choice. Because it pins some coefficients to 0, it may be used to filter out options. Geometrically, L1 regularization will be visualized as confining the coefficients to lie inside a diamond formed area centered on the origin. Mathematically, the brand new goal perform is:

As we mentioned within the earlier article, closed kind answer guidelines !!! however sadly there isn’t a closed kind answer for L1 as a result of L1 norm of w is mainly summation of absolute values of w and absolutely the perform is just not differentiable and thus we can not get a closed kind answer. As an alternative, we are going to implement L1 regularization utilizing coordinate descent algorithm. I received’t go too deep into it and easily the steps are:

There may be really one other variation of this the place you don’t must normalize knowledge earlier than the loop however in follow and from what I learn on-line, normalizing earlier than is how individuals have gotten it to work successfully. So let’s see how this appears to be like in code then:

As we’ve seen up to now, fairly quick code which maps precisely to the algorithm we noticed above. So how does this implementation performs?

As we are able to see, L1 regularization introduces sparsity by making a lot of the coefficients to be 0. In contrast with sklearn Lasso, our R2 rating is fairly comparable and each sklearn and our implementation pin virtually the identical coefficients to 0 aside from the final coefficient. In all probability there are nonetheless some adjustments required in my implementation to raised match sklearn however I can’t appear to determine that out. When you’ve got any strategies then do let me know !

L2 Regularization

Okay so L2 regularization, additionally known as Ridge, fights overfitting by shrinking the coefficients to be small however not making them precisely 0. It’s helpful in estimating a regression when there are extra options than observations and extra importantly it has a closed-form answer !!! Geometrically, L2 regularization will be visualized as confining the coefficients to lie inside a spherical formed area centered on the origin. Mathematically, the brand new goal perform is:

As I stated above L2 does have a closed kind answer, so let’s see what it’s:

Now we simply want to jot down this out in our script:

So how does this implementation performs?

As we are able to see, in comparison with L1 there are not any 0 coefficients and all of the coefficients values appear to be in an honest vary. In comparison with sklear Ridge, R2 rating, intercept and coefficents are fairly comparable which I’ll take as my implementation working effectively !

Abstract

As we noticed above, implementing L1 and L2 regularization is fairly straightforward and provides comparable outcomes to sklearn. L2 is my most popular technique however every has their very own completely different use case, L1 is used to do characteristic choice in sparse characteristic area whereas L2 largely outperforms L1 in relation to accuracy. There may be additionally Elastic Web regularization which is mainly L1+L2 however we received’t be going over that. Subsequent week we are going to go over Logistic regression !

All of the code for this may be discovered at — Regularization from scratch