In current months, a brand new time period has been circulating within the AI neighborhood: the Kolmogorov-Arnold Community (KAN). This idea has been championed by just a few lovers who argue that KANs may revolutionize machine studying and make deep neural networks (DNNs) out of date. Nevertheless, a more in-depth look reveals that these claims is likely to be overstated. As somebody who has been concerned in machine studying (ML) for years, I wish to dive into the core of those arguments and spotlight why DNNs stay the go-to answer in fashionable AI.

The Kolmogorov-Arnold Illustration Theorem: A Refresher

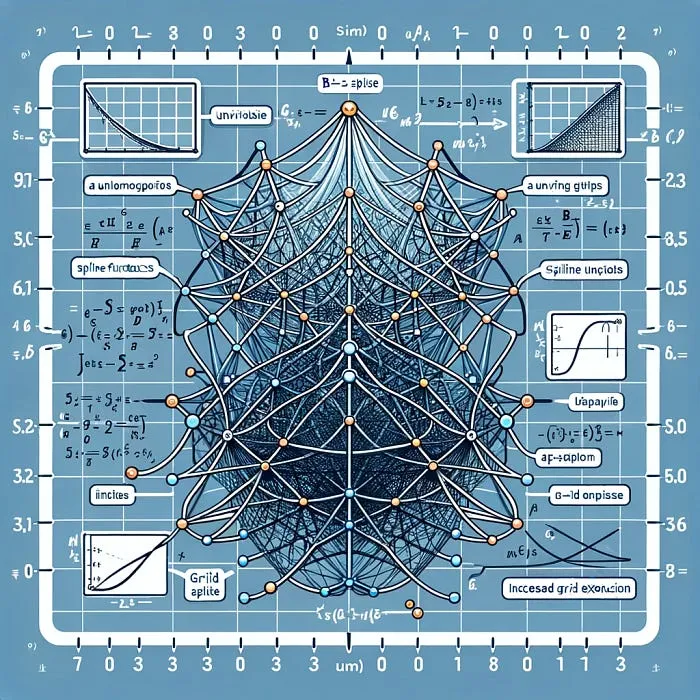

The Kolmogorov-Arnold illustration theorem, a elementary end result within the principle of operate approximation, states that any multivariate steady operate might be represented as a finite sum of steady features of 1 variable. Primarily, it gives a theoretical basis for approximating advanced features utilizing less complicated elements.

Deep studying, a subfield of ML, leverages DNNs for his or her skill to mannequin advanced, high-dimensional information by way of layers of non-linear transformations. Right here’s why DNNs proceed to dominate:

1. Primitive, Scalable Operations: The success of DNNs is basically on account of their reliance on primitive operations (e.g., matrix multiplications, element-wise non-linearities) that scale effectively with fashionable {hardware}. This aligns with Richard Sutton’s precept that straightforward, scalable strategies will prevail in the long term.

2. High-quality-Tuned Architectures: The architectures of DNNs have been meticulously fine-tuned over a long time. Researchers like Geoffrey Hinton and Yann LeCun have contributed to a physique of data that has optimized these networks for varied duties, from picture recognition to pure language processing.

3. Intrinsic Compliance with KA Theorem: Apparently, DNNs already fulfill the Kolmogorov-Arnold theorem. They approximate advanced features by combining less complicated ones in a hierarchical method. Thus, the proposed swap to KANs affords little further worth by way of theoretical operate approximation.

4. Current Explainability Instruments: Explainable AI (XAI) instruments have been developed and are successfully used on the scales the place DNNs function. Transitioning to KANs would require validating these instruments’ effectiveness at new scales and on new architectures, a process that’s not assured to be simple or useful.

The Phantasm of Compute Bottlenecks

Reflecting on the early days of ML, computational sources had been certainly a major bottleneck. Right this moment, due to advances in {hardware} and optimized software program frameworks, that is now not the case. Sutton’s argument that the best, most scalable construction will win holds extra weight now than ever earlier than. DNNs, with their steadiness of simplicity and scalability, stay the popular selection.

AI startups and researchers want to stay grounded in actuality. Whereas it’s tempting to leap on the most recent hype, it’s essential to think about the sensible implications and current proof. The Kolmogorov technique, whereas theoretically interesting, should be critically evaluated in opposition to the sturdy, confirmed capabilities of DNNs.

The Kolmogorov-Arnold Community would possibly sound like the following massive factor, nevertheless it’s important to acknowledge that deep studying frameworks have been rigorously examined and refined over a few years. Their design, grounded in simplicity and scalability, makes them appropriate for the huge array of functions they presently dominate.

Within the race to advance AI, it’s necessary to keep in mind that whereas new theories and strategies are value exploring, they should be weighed in opposition to the established, scalable options which were driving progress so far. Earlier than asking about your AI startup’s Kolmogorov technique subsequent week, take into account whether or not it really affords a bonus over the tried-and-tested architectures we have already got.

Moore’s Legislation is unforgiving, and within the quest for scalability, the best options are sometimes the simplest.

Keep knowledgeable, keep crucial, and don’t be swayed by each new hype.

— — — — — —

Should you discovered this text insightful, please share it along with your community. Observe me for extra knowledgeable insights into the evolving world of AI and machine studying.