Laptop Imaginative and prescient

GoogLeNet, also called Inception-v1, stands as a landmark achievement within the realm of convolutional neural networks (CNNs). Unveiled by researchers at Google in 2014, It launched a novel method to designing deep networks that not solely achieved distinctive accuracy but in addition demonstrated outstanding computational effectivity. This text delves into the architectural intricacies, design rules, and profound impression of GoogLeNet on the panorama of deep studying.

Previous CNN architectures, akin to LeNet-5 and AlexNet, have been usually shallow, which means that they had a restricted variety of layers. This restricted their capacity to study complicated patterns in information. Moreover, these architectures weren’t very computationally environment friendly, which means they required a considerable amount of processing energy to coach and run.

If you wish to study extra about different CNN Architectures, take a look at my earlier defined blogs on LeNet-5, AlexNet, VGG-Net and Resnet

One of many fundamental challenges with rising the depth of CNNs is that it might result in the downside of vanishing gradients. It is because because the community turns into deeper, the gradients of the loss operate with respect to the weights of the community turn into smaller and smaller. This makes it tough for the community to study successfully.

One other problem with rising the depth of CNNs is that it might result in an explosion in computational necessities. It is because the variety of computations required to propagate an enter via a deep community grows exponentially with the depth of the community.

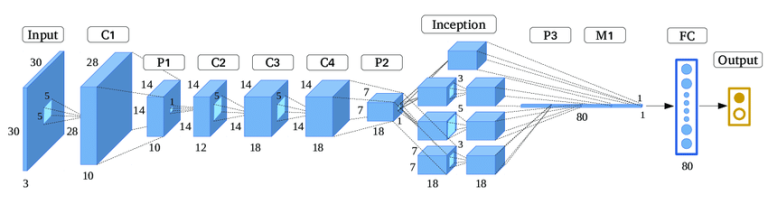

GoogLeNet addressed the challenges of earlier CNN architectures by introducing the idea of inception modules. Inception modules are a kind of constructing block that enables for the parallel processing of information at a number of scales. This enables the community to seize options at totally different scales extra effectively than earlier architectures.

An inception module usually consists of a number of convolutional layers with totally different filter sizes. These layers are organized in parallel, in order that the community can course of the enter information at a number of resolutions concurrently. The output of the convolutional layers is then concatenated and handed via a pooling layer. Nevertheless, later there have been varied variations of the inception module which was built-in accordingly within the structure which consisted of various layers and filter dimension patterns.

This parallel processing method has a number of benefits. First, it permits the community to seize options at totally different scales extra effectively. It is because the community can course of the enter information at a number of resolutions concurrently, which permits it to seize each large-scale and small-scale options. Second, it helps to alleviate the issue of vanishing gradients. It is because the parallel processing method permits the community to study options at a number of scales, which might help to stabilise the coaching course of.

The inception module permits the community to seize data at totally different scales. The inception module is made up of 4 paths:

- 1×1 convolution: This path applies a 1×1 convolution to the enter. This reduces the variety of channels within the enter, which helps to scale back the computational complexity of the community.

- 3×3 convolution: This path applies a 3×3 convolution to the enter. This can be a customary convolutional operation that’s used to extract options from the enter picture.

- 5×5 convolution: This path applies a 5×5 convolution to the enter. This path is used to seize larger-scale options from the enter picture.

- Max pooling: This path applies a max pooling operation to the enter. This operation reduces the scale of the enter by conserving the utmost worth in every 2×2 window.

The outputs of the 4 paths are then concatenated collectively, and a 1×1 convolution is utilized to the end result. This last 1×1 convolution helps to scale back the variety of channels within the output, and likewise helps to enhance the accuracy of the community.

This method is usually utilized in many of the Convolutional Neural Networks to scale back the full variety of parameters and to minimise overfitting. Typically it’s positioned on the finish of the CNN structure.

International Common Pooling performs a median operation throughout the Width and Top for every filter channel individually. This reduces the characteristic map to a vector that is the same as the scale of the variety of channels. The output vector captures probably the most distinguished options by summarizing the activation of every channel throughout the whole characteristic map.

Common Pooling is reverse that the method that’s used within the max pooling, to know extra about max pooling you possibly can head to this text which provides you with extra correct understanding of the tactic: Understanding Convolutional Neural Networks: Part 1

In GoogLeNet structure, changing totally related layers with world common pooling improved the top-1 accuracy by about 0.6%. In GoogLeNet, world common pooling will be discovered on the finish of the community, the place it summarises the options realized by the CNN after which feeds it immediately into the SoftMax classifier.

These classifiers helps to beat the problems which might be confronted generally by neural networks often called vanishing gradient problem. These classifiers are positioned on the facet of the community and are solely included in coaching and never throughout inferences or testing.

Within the GoogLeNet structure, there are two auxiliary classifiers within the community. They’re positioned strategically, the place the depth of the characteristic extracted is adequate to make a significant impression, however earlier than the ultimate prediction from the output classifier. The construction of every auxiliary classifier is talked about under:

- A mean pooling layer with a 5×5 window and stride 3.

- A 1×1 convolution for dimension discount with 128 filters.

- Two totally related layers, the primary layer with 1024 items, adopted by a dropout layer and the ultimate layer similar to the variety of lessons within the activity.

- A SoftMax layer to output the prediction possibilities.

These auxiliary classifiers assist the gradient to circulation and never diminish too shortly, because it propagates again via the deeper layers. Furthermore, the auxiliary classifiers additionally assist with mannequin regularisation. Since every classifier contributes to the ultimate output, consequently, the community is inspired to distribute its studying throughout totally different components of the community. This distribution prevents the community from relying too closely on particular options or layers, which reduces the possibilities of overfitting. That is what makes coaching doable.

GoogLeNet (Inception v1) turned loads profitable throughout the preliminary introduction to the pc imaginative and prescient neighborhood. Due to this fact, as a result of this a number of variants have been developed to enhance the effectivity of the structure. Variations like Inception-v2, v3, v4 and ResNet hybrids have been launched. Every of those fashions launched key enhancements and optimizations, addressing varied challenges, and pushing the boundaries of what was doable with the CNN architectures.

- Inception v2 (2015): The second model of Inception was modified with enhancements akin to batch normalisation and shortcut connections. It additionally refined the inception modules by changing bigger convolutions with smaller, extra environment friendly ones. These adjustments improved accuracy and decreased coaching time.

- Inception v3 (2015): The v3 mannequin additional refined Inception v2 through the use of atrous convolution (dilated convolutions that increase the community’s receptive area with out sacrificing decision and considerably rising community parameters).

- Inception v4, Inception-ResNet v2 (2016): This model of Inception launched residual connections (impressed by ResNet) into the Inception lineage, which led to additional efficiency enhancements.

- GoogLeNet launched inception modules, revolutionising CNN design by enabling parallel processing at a number of scales.

- Its structure addressed challenges like vanishing gradients and computational effectivity, boosting each accuracy and velocity.

- International common pooling changed totally related layers, enhancing accuracy and lowering overfitting.

- Auxiliary classifiers aided gradient circulation and regularisation, making coaching extra environment friendly and stopping over-reliance on particular options.