In at the moment’s fast-paced technological panorama, the power to deploy and handle machine studying fashions effectively is essential for companies aiming to ship cutting-edge AI-powered functions. Kubernetes has emerged as a number one platform for deploying containerized functions at scale, and Kserve, an open-source Kubernetes-based serverless framework, offers a robust resolution for deploying and managing machine studying fashions seamlessly. On this weblog, we’ll discover the ideas of Kserve’s inference graphs and inference companies, and supply sensible examples utilizing YAML information.

What are Inference Graphs?

Inference graphs in Kserve signify a directed acyclic graph (DAG) that orchestrates the movement of information between a number of machine studying fashions (or customized inference docker pictures appearing as fashions. Nonetheless,on this weblog, we’ll restrict our scope to solely plain ML fashions) throughout inference.

- InferenceGraph: It’s made up with an inventory of routing

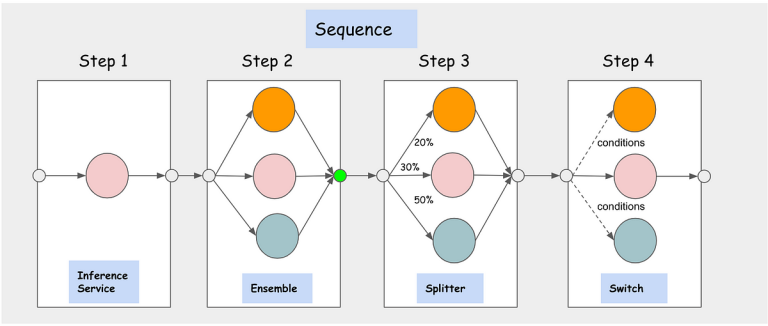

Nodes, everyNodeconsists of a set of routingSteps. EveryStepcan both path to anInferenceService(we’ll see later within the weblog, what’s Inference Service) or one otherNodeoutlined on the graph which makes theInferenceGraphextremely composable. The Inference graph’s router is deployed behind an HTTP endpoint and could be scaled dynamically based mostly on request quantity. TheInferenceGraphhelps 4 various kinds of RoutingNodes: Sequence, Swap, Ensemble, Splitter. - Sequence Node: It permits customers to outline a number of

StepswithInferenceServicesorNodesas routing targets in a sequence. TheStepsare executed in sequence and the request/response from earlier step could be handed to the following step as enter based mostly on configuration. - Swap Node: It permits customers to outline routing situations and choose a step to execute if it matches the situation, the response is returned as quickly it finds step one that matches the situation. If no situation is matched, the graph returns the unique request.

- Ensemble Node: A mannequin ensemble requires scoring every mannequin individually after which combining the outcomes right into a single prediction response. You may then use completely different mixture strategies to provide the ultimate end result. A number of classification timber, for instance, are generally mixed utilizing a “majority vote” technique. A number of regression timber are sometimes mixed utilizing numerous averaging strategies.

- Splitter Node: It permits customers to separate the site visitors to a number of targets utilizing a weighted distribution.

Going ahead, we’ll see an instance,which would come with (Sequence Node and Swap Node).

Introduction to Inference Providers

Inference companies in Kserve present a handy abstraction for deploying and managing machine studying fashions. Every inference service encapsulates a number of fashions and exposes a http prediction endpoint for making inference requests. Inference companies deal with mannequin versioning, scaling, and monitoring, simplifying the operational features of deploying machine studying fashions in manufacturing.

The best way to start: Now, Atfirst, you want the fashions, whom you need to orchestrate proper ? The best way to expose these fashions in order that we are able to incorporate them in Inference Service. For you could have following approaches –

a) Practice a mannequin with fundamental inference code and bundle it following a selected format. Verify this hyperlink here.

b) You may write a customized inference service with superior parsing options (which might internally make a name to a mannequin endpoint, assuming you have got already deployed your mannequin individually on some cloud supplier for instance: AWS Sagemaker). Containerize the Inference Service code and push to a artifactory. This technique comes useful, if you need to deploy Inference Mannequin Code involving some customized logic, and in addition if you need a number of inference service to make use of the identical mannequin code.

On this weblog, we’ll solely see the primary strategy. We are going to take a 2 step course of instance the place in step one, we’ll attempt to establish a given picture as both animal or plant. If its a animal, ship it to its fine-grained animal-classifier-model, if plant, ship it to its plant-classifier-model. So, general we’ll use 3 fashions.

Let’s outline the inference_services.yaml file.

apiVersion: "serving.kserve.io/v1alpha1"

sort: "InferenceService"

metadata:

title: "high-level-classification-service"

spec:

predictor:

pytorch:

sources:

requests:

cpu: 100m

storageUri: "s3://sdccoding/high_level_classification"

---

apiVersion: "serving.kserve.io/v1alpha1"

sort: "InferenceService"

metadata:

title: "animal-fine-grained-classification-service"

spec:

predictor:

pytorch:

sources:

requests:

cpu: 100m

storageUri: "s3://sdccoding/animal_fine_grained_classification"

---

apiVersion: "serving.kserve.io/v1alpha1"

sort: "InferenceService"

metadata:

title: "plant-fine-grained-classification-service"

spec:

predictor:

pytorch:

sources:

requests:

cpu: 100m

storageUri: "s3://sdccoding/plant_fine_grained_classification"

Allow us to deploy the person inference companies first, after which see in the event that they received deployed.

kubectl apply -f inference_services.yaml

kubectl get inferenceservice

We see the next as output.

NAME URL READY DEFAULT TRAFFIC AGE

high-level-classification-service http://high-level-classification-service True 100 10m

animal-fine-grained-classification-service http://animal-fine-grained-classification-service True 100 8m

plant-fine-grained-classification-service http://plant-fine-grained-classification-service True 100 8m

To shortly examine if the inference companies are working as anticipated: Do a port-forwarding from some localhost port to the inner port in Kubernetes cluster (EKS right here), after which make a publish request with a base64 encoded picture to the localhost port which you have got forwarded.

kubectl port-forward high-level-classification-service 8080:8080

As soon as the person inference companies are deployed, let’s outline and deploy the inference graph at times see the deployed model

apiVersion: "serving.kserve.io/v1alpha1"

sort: "InferenceGraph"

metadata:

title: "image-processing-graph"

spec:

nodes:

root:

routerType: Sequence

steps:

- serviceName: high-level-classification-service # Service title ought to match the inference service that's declared.

title: high_level_classification_service # step title

- serviceName: animal-fine-grained-classification-service

title: animal_fine_grained_classification_service

information: $request # $request comprises the total information being handed on from earlier step.

situation: "[@this].#(predictions.0=="animal")" # This can be a swap case. Provided that prediction of earlier step is animal, it can come right here.

- serviceName: plant-fine-grained-classification-service

title: plant_fine_grained_classification

situation: "[@this].#(predictions.0=="plant")"

kubectl apply -f inference_graph.yaml

kubectl get ig image-processing-graph

We see the next as output

NAME URL READY AGE

image-processing-graph http://image-processing-graph.default.svc.cluster.native True 1m

To shortly checkout issues, simply do port-forwarding (similar to how we did for Inference Providers). Understand that port forwarding exposes the service to native machine solely and needs to be used for debugging or growth functions, not for manufacturing site visitors. OR comply with this strategy here

That is only a introductory weblog. Will preserve including issues with time.