Usually in course of design evaluation, we have to take into accounts a number of variables, which may produce conflicting objectives. Nonetheless, we have to make a name in the long run of the day.

It’s broadly identified that people battle to deal with an excessive amount of data on the identical time, so how can we be extra “data-driven” in our choices if the variety of vital variables repeatedly improve?

A superb method is to “focus” on the vital elements of the knowledge. In a human perspective, this may be the equal to summarizing texts or choosing “manually” the knowledge. In a computational perspective, this implies decreasing the dimensionality of the info accessible of research.

One of the vital frequent methods for decreasing dimensionality of the dataset is the Principal Part Evaluation — PCA, for brief. In such a method, new coordinates are computed for every datapoint, by projecting the unique values right into a decrease dimensional area, in a manner that a lot of the unique information variance is preserved within the first parts. We sometimes end-up with a brand new dataset with much less columns than the unique, however representing (nearly) an identical quantity of data.

Nonetheless, PCA solely works for linear relationships between variables. And it’s not uncommon that course of techno-economic evaluation variables are associated to one another in a non-linear manner, which can restrict PCA’s software. On this article, let’s discuss a deep studying different referred to as: Self-Organizing Maps, or SOMs.

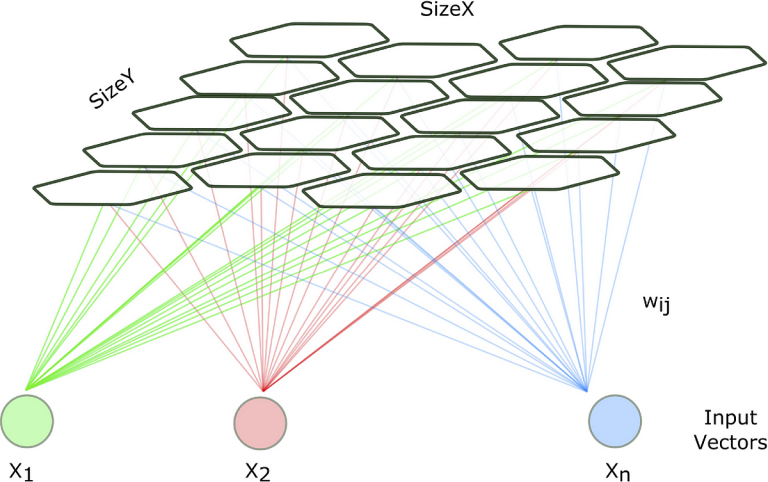

SOMs, often known as Kohonen maps (they have been created by Teuvo Kohonen, within the Eighties) are a sort of neural networks, utilized for unsupervised studying. Additionally they act as a dimensionality discount methods.

The primary attribute of a SOM is their community construction. Whereas some neural networks have a “input-hidden-output” construction, SOMs don’t have the output layer. They’re simply consist in a grid of nodes (neurons) organized in a two-dimensional lattice. Every neuron is related to a weight vector of the identical dimensionality because the enter information.

Every weight works as a “node characteristic”. In different phrases, they act as nodes coordinates within the enter information area.

However how does the SOM learns patterns?

Coaching a SOM is definitely defining which neurons have the weights values which might be the closest to the enter vector. Naturally, if the inputs current sure patterns, they’ve the tendency to remain near the identical neurons. That is referred to as “successful neuron activation”.

The distances between inputs and weight vectors in every node are calculated utilizing Euclidean system:

The closest neuron (and an outlined neighborhood of neurons) to every enter vector will get activated and its weights are up to date. The scale of this neighborhood is tunable (i.e., it’s a hyperparameter), however the depth of the replace is inversely proportional to the space of every neuron contained in the neighborhood and the successful neuron.

It is because we wish to keep away from the identical neuron getting activated each row, and by no means giving likelihood to others get up to date. This additionally permits the creation of a “area” that represents a decided sample within the information.

Extra technical particulars of SOMs coaching will be discovered at: https://medium.com/machine-learning-researcher/self-organizing-map-som-c296561e2117

Once we are coping with the design of a brand new course of, it’s not uncommon that we have no idea upfront the values of some variables and premises essential to guage the viability of the undertaking. Nonetheless, we have to calculate some indicators and indexes that may level in the suitable route for the undertaking.

A typical method is to make use of the Monte Carlo simulation method, which consists in sampling a number of values for the enter variables (the premises) and attempt to perceive how they relate to the distribution obtained of the output outcomes. However these enter variables samplings are often impartial from one another, which can hamper PCA outcomes, as the next evaluation reveals.

The use case of this research shall be higher defined partially 2, however the information represents a techno-economic evaluation of a eucalyptus based mostly biorefinery to supply jet gas. The info consists of 5000 simulations (rows). Every row accommodates the values sampled of a number of enter variables that may have an effect on the feasibility of the undertaking.

The objective is to research the info and perceive the profiles that result in a extra aggressive situation. The “competitivity issue” right here was studied because the minimal promoting value of the jet gas, an vital financial parameter to trace, if we’re going to “assault” the fossil gas market.

Notice how the PCA can not “choose” a fewer variety of parts that accumulate all the unique variance, as a result of it appears to be equally distributed throughout the parts. For the reason that simulation enter variables are virtually impartial from one another, PCA struggles to supply dimensionality discount.

Even when we take the primary two parts of the projected information, it’s onerous to search out patterns in them. As well as, we’d be retaining solely about 30 % of unique variance. As present within the picture beneath, we can not isolate a area that presents extra aggressive (i.e., decrease) values of MSP, though we are able to spot a sample that decrease values of PC2 and better of PC1 to have decrease MSP.

We can also attempt to apply a clustering algorithm within the already projected information. On this research we utilized the KMeans algorithm, and the variety of clusters was chosen utilizing the Silhouette rating.

Even we are able to spot a most silhouette rating at 3 clusters, the rating itself is low. Because of this even the info may very well be cut up into clusters, the clusters aren’t so statistically totally different. Let’s take a fast look on the descriptive statistic desk for the MSP (minimal jet gas value), after grouping the info by the recognized clusters:

The distributions weren’t symmetric, as we are able to observe by the slight distinction between common and medians. Therefore, the arrogance intervals have been calculated utilizing the percentiles of a bootstrapped pattern.

Though the distinction is low, a Kruskal-Wallis check was carried out on this samples and it pointed a statistically important distinction between the teams (p < 0.001), which suggests we’re profitable in our activity of discovering a bunch that presents a decrease MSP.

However earlier than we go rejoice, let’s take a step again and do some important evaluation concerning the method we’ve taken:

- To seek out the “finest” cluster, we needed to implement two sequential fashions — it will improve upkeep and complexity effort. As well as, it might hamper mannequin interpretability

- File that PCA solely captures linear relationships. Would the non-linear results be of any assist right here?

- The clusterization method was carried out over solely the primary two parts (solely 30 % of unique variance). What concerning the remaining 70 %?

The solutions to such questions shall be discovered within the half 2 of this text! See you there !