Introduction

In an period the place synthetic intelligence is reshaping industries, controlling the ability of Large Language Models (LLMs) has develop into essential for innovation and effectivity. Think about a world the place customer support chatbots not solely perceive however anticipate your wants, or the place advanced knowledge evaluation instruments present insights instantaneously. To unlock such potential, companies should grasp the artwork of LLM serving—remodeling these fashions into high-performance, real-time purposes. This text delves into the intricacies of effectively serving LLMs and LLM deployment, offering a complete information to the perfect platforms, optimization methods, and sensible examples to make sure your AI options are each highly effective and responsive.

Studying Goals

- Perceive the idea of LLM deployment and its significance in real-time purposes.

- Discover numerous frameworks for serving LLMs, together with their key options and use instances.

- Acquire hands-on expertise with template codes for deploying LLMs utilizing completely different serving frameworks.

- Be taught to match and benchmark LLM serving frameworks based mostly on latency and throughput.

- Establish the best-case eventualities for using applicable LLM serving frameworks in several purposes.

This text was revealed as part of the Data Science Blogathon.

What’s Triton Inference Server?

Triton Inference Server is a robust platform for deploying and scaling machine studying fashions in manufacturing environments. Developed by NVIDIA, it helps a number of frameworks reminiscent of TensorFlow, PyTorch, ONNX, and customized backends.

Key Options

- Mannequin Administration: Dynamic mannequin loading/unloading, model management.

- Inference Optimization: Multi-model ensemble, batching, and dynamic batching.

- Metrics and Logging: Integration with Prometheus for monitoring.

- Accelerator Assist: GPU, CPU, and DLA help.

Setup and Configuration

Organising the Triton Inference Server may be advanced, requiring familiarity with Docker and Kubernetes for containerized deployments. Nonetheless, NVIDIA supplies intensive documentation and group help to facilitate the method.

Use Case:

Preferrred for large-scale deployments the place efficiency, scalability, and multi-framework help are essential.

Demo Code for Serving and Rationalization

# Required libraries

!pip set up nvidia-pyindex

!pip set up tritonclient[all]

# Triton Inference Server Instance

from tritonclient.grpc import InferenceServerClient

import numpy as np

# Initialize the Triton Inference Server shopper

shopper = InferenceServerClient(url="localhost:8001")

# Put together enter knowledge

input_data = np.array([[1.0, 2.0, 3.0]], dtype=np.float32)

# Create inference request

inputs = [client.InferInput("input", input_data.shape, "FP32")]

inputs[0].set_data_from_numpy(input_data)

# Carry out inference

outcomes = shopper.infer(model_name="your_model_name", inputs=inputs)

# Get outcomes

output = outcomes.as_numpy("output")

print("Inference end result:", output)The above code snippet establishes a connection to the Triton Inference Server and sends a pattern enter to carry out inference. It prepares the enter knowledge as a numpy array, units it as enter for the mannequin, and retrieves the mannequin’s predictions as a numpy array (output_data). This setup permits for scalable and environment friendly deployment of machine studying fashions, making certain dependable inference dealing with in manufacturing environments.

Textual content Technology Inference: Optimizing HuggingFace Fashions for Manufacturing

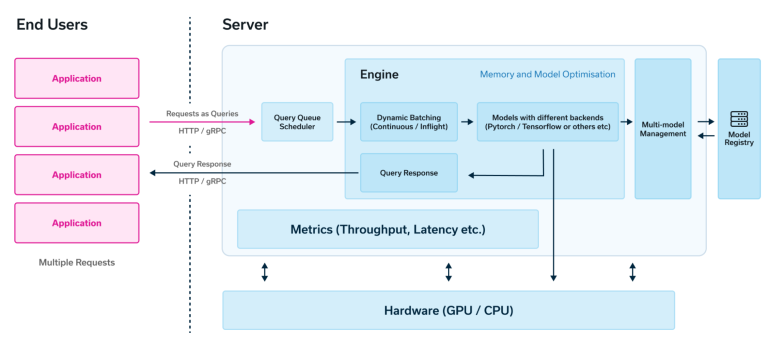

Textual content Technology Inference leverages HuggingFace fashions for textual content era duties. It emphasizes native help for HuggingFace with no need a number of adapters for core fashions. TGI works by dividing the mannequin into smaller shards for parallel processing, utilizing a buffer to handle incoming requests, and a batcher to group requests for environment friendly dealing with. gRPC facilitates quick and dependable communication between elements, making certain responsive textual content era throughout distributed techniques. This setup optimizes useful resource utilization and enhances throughput, which is essential for real-time purposes like chatbots and content material era instruments. Under is a schematic of the identical.

Key Options

- Ease of Use: Seamless integration with HuggingFace’s mannequin hub.

- Customizability: Permits fine-tuning and customized configurations for textual content era fashions.

- Assist for Transformers: Leverages the highly effective Transformers library.

Use Instances:

Excellent for purposes needing direct integration with HuggingFace fashions, reminiscent of chatbots, content material era, and automatic summarization.

Demo Code for Serving and Rationalization

# Required libraries

!pip set up grpcio

!pip set up protobuf

!pip set up transformers

# Textual content Technology Inference Instance

import grpc

from transformers import GPT2Tokenizer, GPT2Model

import numpy as np

# Load tokenizer and mannequin

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

mannequin = GPT2Model.from_pretrained("gpt2")

# Put together enter knowledge

input_text = "Howdy, how are you?"

input_ids = tokenizer.encode(input_text, return_tensors="pt")

# Carry out inference

with grpc.insecure_channel('localhost:8500') as channel:

stub = mannequin(input_ids)

response = stub.ahead(input_ids=input_ids)

# Get outcomes

output_ids = response[0].argmax(dim=-1)

output_text = tokenizer.decode(output_ids[0])

print("Generated textual content:", output_text)This Flask software serves a HuggingFace mannequin for textual content era. It listens for POST requests containing a immediate, which it tokenizes and sends to the mannequin for textual content era. After producing the textual content, it decodes the output and returns it as a JSON response ({‘generated_text’: ‘Generated textual content’}). This setup permits seamless integration of superior pure language era capabilities into net purposes.

vLLM: Revolutionizing Batch Processing for Language Fashions

vLLM is designed for max pace in batched immediate supply. It optimizes latency and throughput for big language fashions. It operates by processing a number of enter prompts concurrently by means of vectorized operations and parallel processing. This strategy optimizes efficiency, reduces latency, and enhances throughput for environment friendly batched textual content era. By successfully leveraging {hardware} capabilities, vLLM scales to deal with giant volumes of requests, making it appropriate for real-time purposes requiring quick and responsive textual content era.

Key Options

- Excessive Efficiency: Optimized for low-latency and high-throughput inference.

- Batch Processing: Environment friendly dealing with of batched requests.

- Scalability: Appropriate for large-scale deployments.

Use Instances:

Greatest for purposes the place pace is crucial, reminiscent of real-time translation and interactive AI techniques.

Demo Code for Serving and Rationalization

# Required libraries

!pip set up vllm

# vLLM Instance

from vllm import LLMServer

# Initialize the vLLM server

server = LLMServer(model_name="gpt-2")

# Put together enter prompts

prompts = ["Hello, how are you?", "What is your name?"]

# Carry out batched inference

outcomes = server.generate(prompts)

# Get outcomes

for i, end in enumerate(outcomes):

print(f"Immediate {i+1}: {prompts[i]}")

print(f"Generated textual content: {end result}")The vLLM server code initializes and runs a server for batched immediate dealing with and textual content era utilizing a specified language mannequin. It defines an endpoint for producing textual content based mostly on batched prompts, facilitating environment friendly batch processing and high-speed responses. This setup is good for eventualities requiring fast era of textual content from a number of enter prompts in server-side purposes.

DeepSpeed-MII: Harnessing DeepSpeed for Environment friendly LLM Deployment

DeepSpeed-MII caters to customers skilled with the DeepSpeed library who need to proceed deploying LLMs utilizing it. DeepSpeed excels in optimizing the coaching of huge fashions. DeepSpeed facilitates environment friendly deployment and scaling of huge language fashions (LLMs) by optimizing mannequin parallelism, reminiscence effectivity, and coaching pace. It enhances efficiency by means of methods like pipeline parallelism and environment friendly reminiscence administration, enabling sooner coaching and inference. DeepSpeed’s modular design permits seamless integration with current machine studying frameworks, supporting accelerated improvement and deployment of LLMs in various purposes.

Key Options

- Effectivity: Reminiscence and computational effectivity by means of optimizations.

- Scalability: Designed to deal with very giant fashions with ease.

- Integration: Seamless with current DeepSpeed workflows.

Use Instances:

Preferrred for researchers and builders already accustomed to DeepSpeed, specializing in high-performance coaching and deployment.

Demo Code for Serving and Rationalization

# Required libraries

!pip set up deepspeed

!pip set up torch

# DeepSpeed-MII Instance

import deepspeed

import torch

from transformers import GPT2Model

# Initialize the mannequin with DeepSpeed

mannequin = GPT2Model.from_pretrained("gpt2")

ds_model = deepspeed.init_inference(mannequin, mp_size=1)

# Put together enter knowledge

input_ids = torch.tensor([[50256, 50256, 50256]], dtype=torch.lengthy)

# Carry out inference

outputs = ds_model(input_ids)

# Get outcomes

print("Inference end result:", outputs)The DeepSpeed-MII code snippet deploys a GPT-2 mannequin for textual content era duties. It serves the mannequin utilizing the mii library, permitting purchasers to generate textual content by sending prompts to the deployed mannequin. This setup helps interactive purposes and real-time textual content era, leveraging environment friendly mannequin serving capabilities for seamless integration into manufacturing environments.

OpenLLM: Versatile Adapter Integration

OpenLLM is tailor-made for connecting adapters to the core mannequin and using HuggingFace Brokers. It helps numerous frameworks, together with PyTorch.

Key Options

- Framework Agnostic: Helps a number of deep studying frameworks.

- Agent Integration: Leverages HuggingFace Brokers for enhanced functionalities.

- Adapter Assist: Versatile integration with mannequin adapters.

Use Instances:

Nice for initiatives needing flexibility in framework alternative and intensive use of HuggingFace instruments.

Demo Code for Serving and Rationalization

# Required libraries

!pip set up openllm

!pip set up transformers

# OpenLLM Instance

from openllm import LLMServer

from transformers import GPT2Tokenizer, GPT2Model

# Initialize the OpenLLM server

server = LLMServer(model_name="gpt2")

# Put together enter knowledge

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

input_text = "What's the which means of life? Clarify it with some strains of code."

input_ids = tokenizer.encode(input_text, return_tensors="pt")

# Carry out inference

outcomes = server.generate(input_ids)

# Get outcomes

output_text = tokenizer.decode(outcomes[0])

print("Generated textual content:", output_text)The OpenLLM server code begins a server occasion for deploying a specified HuggingFace mannequin, configured for textual content era duties. It defines an endpoint to obtain POST requests containing prompts, which it processes utilizing the mannequin to generate textual content. The server returns the generated textual content as a JSON response ({‘generated_text’: ‘Generated textual content’}), using HuggingFace Brokers for versatile and high-performance pure language processing purposes.Alternatively, it will also be accessed over an online API as proven under.

Leveraging Ray Serve for Scalable Mannequin Deployment

Ray Serve affords a steady pipeline and versatile deployment choices, making it appropriate for extra mature initiatives that want dependable and scalable serving options.

Key Options

- Flexibility: Helps a number of deployment architectures.

- Scalability: Designed to deal with high-load purposes.

- Integration: Works effectively with Ray’s ecosystem for distributed computing.

Use Instances:

Preferrred for established initiatives needing a sturdy and scalable serving infrastructure.

Demo Code for Serving and Rationalization

# Required libraries

!pip set up ray[serve]

# Ray Serve Instance

from ray import serve

import transformers

# Initialize Ray Serve

serve.begin()

# Outline a deployment for textual content era

@serve.deployment

class TextGenerator:

def __init__(self):

self.mannequin = transformers.GPT2Model.from_pretrained("gpt2")

self.tokenizer = transformers.GPT2Tokenizer.from_pretrained("gpt2")

def __call__(self, request):

input_text = request["text"]

input_ids = self.tokenizer.encode(input_text, return_tensors="pt")

output = self.mannequin.generate(input_ids)

return self.tokenizer.decode(output[0])

# Deploy the mannequin

TextGenerator.deploy()

# Question the mannequin

deal with = TextGenerator.get_handle()

response = deal with.distant({"textual content": "Howdy, how are you?"})

print("Generated textual content:", rThe Ray Serve deployment code initializes a Ray Serve occasion and deploys a GPT-2 mannequin for textual content era. It defines a deployment class that initializes the mannequin and handles incoming requests to generate textual content based mostly on consumer prompts. This setup demonstrates steady pipeline deployment and versatile request dealing with, making certain a dependable and scalable mannequin serving in manufacturing environments.

Rushing Up Inference with CTranslate2

CTranslate2 focuses on pace, notably for working inference on CPUs. It’s optimized for translation fashions and helps numerous neural community architectures.

Key Options

- CPU Optimization: Excessive efficiency for CPU-based inference.

- Compatibility: Helps fashionable mannequin architectures like Transformer.

- Light-weight: Minimal dependencies and useful resource necessities.

Use Instances:

Appropriate for purposes prioritizing pace and effectivity on CPU, reminiscent of translation providers and low-latency textual content processing.

Demo Code for Serving and Rationalization

# Required libraries

!pip set up ctranslate2

!pip set up transformers

# CTranslate2 Instance

import ctranslate2

from transformers import GPT2Tokenizer

# Load tokenizer and mannequin

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

translator = ctranslate2.Translator("path/to/mannequin")

# Put together enter knowledge

input_text = "Howdy, how are you?"

input_ids = tokenizer.encode(input_text, return_tensors="pt")

# Carry out inference

outcomes = translator.translate_batch(input_ids.numpy())

# Get outcomes

output_text = tokenizer.decode(outcomes[0]["tokens"])

print("Generated textual content:", output_text)The CTranslate2 Flask server code units up an endpoint to obtain POST requests containing textual content for translation. It hundreds a CTranslate2 mannequin and makes use of it to translate the enter textual content into one other language. The translated textual content is returned as a JSON response ({‘translation’: [‘Translated text’]}), showcasing CTranslate2’s environment friendly batch translation capabilities appropriate for multilingual purposes. Under is an instance excerpt of CTranslate2 output generated utilizing the LLaMA 2.7b LLM.

Comparability based mostly on Latency and Throughput

Now that we perceive serving utilizing every framework, it’s supreme to match and benchmark every. Benchmarking was carried out utilizing the GPT3 LLM with the immediate “As soon as upon a time.” for textual content era. The GPU used was an NVIDIA GeForce RTX 3070 on a workstation with different circumstances managed. Nonetheless, this worth may fluctuate, and consumer discretion and information are really useful if used for publishing functions. Under is the comparative framework.

The matrices used for comparability have been latency and throughput. Latency signifies the time it takes for a system to answer a request. Decrease latency means sooner response instances, essential for real-time purposes. Throughput displays the speed at which a system processes duties or requests. Increased throughput signifies higher capability to deal with concurrent workloads, which is crucial for scaling operations.

Understanding and optimizing latency and throughput are crucial for assessing and bettering system efficiency in LLM serving frameworks and different purposes.

Conclusion

Effectively serving large language models (LLMs) is crucial for deploying responsive AI purposes. On this weblog, we explored numerous platforms reminiscent of Triton Inference Server, vLLM, DeepSpeed-MII, OpenLLM, Ray Serve, CTranslate2, and TGI, every providing distinctive benefits by way of latency, throughput, and specialised use instances. Selecting the best platform will depend on particular necessities like mannequin parallelism, edge computing, and CPU optimization.

Key Takeaways

- Mannequin serving is the method of deploying skilled machine studying fashions for inference, enabling real-time or batch predictions in manufacturing environments.

- Totally different platforms excel in numerous features of efficiency, from low latency to excessive throughput.

- A framework needs to be chosen based mostly on the precise use case, whether or not it’s for cell edge computing, server-side inference, or batched processing.

- Some frameworks are higher suited to scalable, versatile deployments in mature initiatives.

Regularly Requested Questions

A. Mannequin serving is the deployment of skilled machine studying fashions for real-time or batch processing, enabling environment friendly and dependable prediction or response era in manufacturing environments.

A. The selection of LLM framework will depend on software necessities, latency, throughput, scalability, and {hardware} kind. Platforms like Triton Inference Server, vLLM, and MLC LLM are appropriate.

A. Giant language fashions current challenges like latency, efficiency, useful resource consumption, and scalability, necessitating cautious optimization of deployment methods and environment friendly {hardware} useful resource use.

A. A number of serving frameworks may be mixed to optimize completely different elements of an software, reminiscent of Triton Inference Server for common mannequin serving, vLLM for fast duties, and MLC LLM for on-device inference.

A. Methods like mannequin optimization, distributed computing, parallelism, and {hardware} accelerations can improve LLM serving effectivity, scale back latency, and enhance useful resource utilization.

The media proven on this article isn’t owned by Analytics Vidhya and is used on the Creator’s discretion.