On this data article, I’ll handhold and stroll you thru the RAG framework/pipeline for creating personalized LLM with the newest data base.

Synopsis:

- Introduction to RAG

- Why RAG?

- RAG Structure

- Finish-2-Finish RAG Pipeline

- Advantages of RAG

- Pitfall

- RAG use-cases

- Conclusion

As everyone knows and use opensource LLMs and paid ones for our customised duties with already pre-trained fashions as much as a specific data or information, and in case you ask for any domain-specific or personalised reply on your particular want the LLMs will not be that environment friendly on your question.

Don’t be concerned it is only a pace bumper on your driving classes, will get you thru!

RAG: Retrieval Augmented Era is a framework or a pipeline that enables your LLM to attach with present/real-world domain-specific information. So, RAG is an structure that enables the LLM to attach with exterior sources of information.

- LLMs are pre-trained fashions with restricted data and will not be related to the present date.

- Transparency can happen which may result in deceptive data.

- It will probably Hallucinate

So, now we obtained to know what’s RAG, let me outline the structure of RAG.

RAG is just outlined as retrieval augmented technology

- Retrieval

- Augmented

- Era

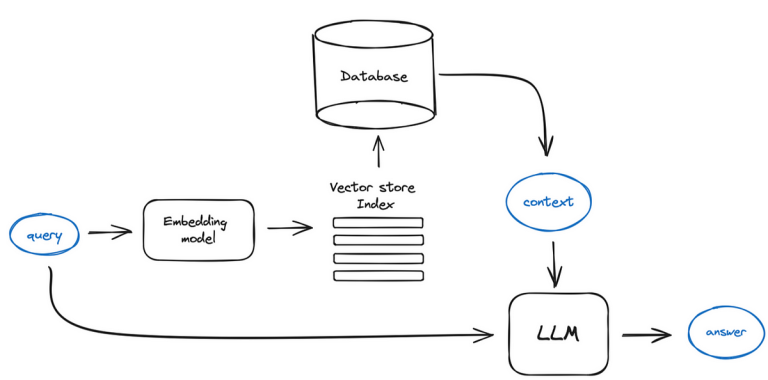

Retrieval is outlined as, as soon as the person asks the question, the question ought to go and fetch the solutions on the database that is the retrieval course of.

Augmented is outlined as gathering/enhancing all of the solutions which have been fetched from the database.

Era is outlined as as soon as after gathering all the information from the database we go the reply together with the immediate to the LLM, and the LLM will generate a solution primarily based on the immediate and query given to it

There are three parts concerned in RAG structure

- Ingestion

- Retrieval

- Era

Ingestion

Ingestion or information ingestion is just loading the information. As soon as the information is loaded we now have to separate the information. after splitting the information we now have to embed the information and if required we are able to additionally assemble indexing. As soon as this course of is completed we now have to retailer this vectorised information within the vector DB, a vector DB is a database that’s much like different databases however specialised for storing embedded information.

So, Ingestion is the mixture of loading, splitting, embedding, and storing the information within the DB

Ingestion => Load + Cut up + Embed + Storing in DB

Why is information splitting required?

in case you look by means of the documentation of any LLM fashions there will probably be a time period known as Context Window for every of the LLM fashions, so in case you load a doc for a domain-specific use case that’s related to your specific use case, to boost the LLM efficiency to the present state of affairs. the doc will be in a special context dimension which can exceed the context window dimension of the LLM fashions. So, to successfully deliver the big doc right into a smaller context window becoming the context window of the LLM mannequin we’re splitting the big doc into smaller chunks.

Why embedding required?

After splitting the information into smaller chunks we now have to embed the chunks. As all of us in all probability know machines perceive solely numeric representations of any information and never textual content information. So to transform the textual content information that we’re loading into the LLM we’re utilizing a textual content embedding mannequin which will be both OpenAI embeddings or open supply embeddings.

Why ought to we retailer it within the vector database?

so we now have transformed the entire textual content illustration of the doc right into a numeric illustration and this numeric illustration needs to be saved in a database for accessing the information for future functions. let’s think about a person is asking a question to a person domain-specific query, the LLM has to undergo the doc after which has to generate a response based on the query requested by the person. To entry the information that we now have loaded already, we retailer the information in a vector database for environment friendly and correct retrieval choices.

A number of cloud and in-memory databases can be found you could skim by means of the web based on your necessities.

As mentioned earlier, ingestion includes Load + Cut up + Embed + Storing in DB, after the first half is completed right here comes the method of retrieving the information from the database, primarily based on the person question. Retrieval is a course of that includes fetching the information from the vector database primarily based on the questions requested, there are a number of methods concerned in superior RAG for quicker, correct data retrieval.

As soon as the information is retrieved from the database then the information that has been retrieved will probably be lastly handed to the LLM together with the immediate given to generate the tailor-made response based on the query requested by the person.

- Connection to the exterior sources of information

- Extra relevancy and accuracy

- Open-domain particular fashions

- Diminished bais or e hallucinations

- RAG does not require any mannequin coaching

The efficiency of the RAG is closely depending on the structure itself and its data base. If no correct optimization is carried out within the structure it could result in poor efficiency.

- Doc Q/A

- Conversational brokers

- Actual-time occasion commentary

- Content material technology

- Personalised suggestion

- Digital help

The RAG (Retrieval-Augmented Era) software stands as a groundbreaking answer within the realm of AI-driven instruments, merging the perfect of each retrieval and generative fashions to ship extremely correct and contextually related responses. By leveraging a strong mixture of data retrieval and complex language technology, RAG ensures that customers obtain exact, well-informed solutions to their queries.

This progressive method not solely enhances the standard and reliability of data supplied but additionally considerably improves person expertise by minimizing response occasions and rising the depth of information out there at their fingertips. The RAG software showcases the immense potential of AI in remodeling how we entry and make the most of data, setting a brand new customary for clever, environment friendly, and user-centric AI options.

As we proceed to refine and increase the capabilities of RAG, we anticipate even higher developments in varied fields, from buyer help and content material creation to analysis and training. The way forward for AI-powered purposes is certainly promising, and RAG is on the forefront of this thrilling evolution.