4. Different ML fashions

I skilled different machine studying fashions corresponding to KNN, SVM, and Gradient Boosting to match them with Logistic Regression and Random Forest.

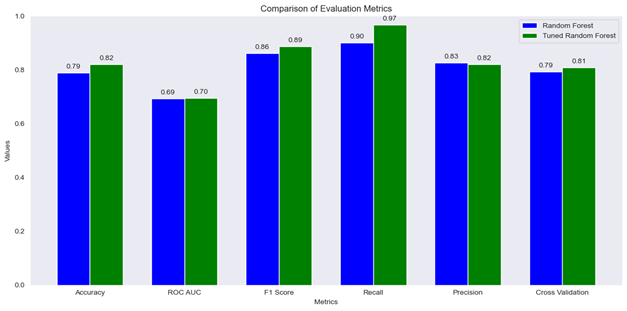

For brevity on this presentation, I’ve solely offered the analysis metrics under. Nevertheless, you will need to notice that I fitted fashions with and with out hyperparameter tuning, then chosen the best-performing algorithm for every ML approach, just like what I demonstrated beforehand with Random Forest, Choice Tree, and Logistic Regression.

The output under shows the analysis metrics for the best-performing algorithms, whether or not tuned or untuned, for every ML approach

Output 1: Skilled all options within the dataset ML Algorithm Accuracy ROC AUC F1 Rating Recall Precision Cross Validation

Random Forest 0.82 0.70 0.89 0.97 0.82 0.81

LR 0.84 0.72 0.90 0.98 0.83 0.75

Gradient Boosting 0.83 0.70 0.89 0.98 0.82 0.79

KNN 0.67 0.50 0.80 0.88 0.73 0.66

SVM 0.84 0.72 0.90 0.98 0.83 0.73

Key Curiosity: Which mannequin performs higher?

From the output offered above:

Okay-Nearest Neighbor (KNN) is evidently not aggressive for comparability because of its considerably low accuracy, ROC, and cross-validation scores.

Help Vector Machine (SVM) performs comparably properly to Logistic Regression. Nevertheless, the cross-validation rating is low and most significantly, SVM coaching time was notably longer. Due to this fact, I’ll exclude SVM from additional consideration. The purpose is to establish a mannequin with superior efficiency whereas additionally contemplating comparatively shorter coaching time.

The three remaining fashions, particularly Logistic Regression, Random Forest, and Gradient Boosting, exhibit related efficiency. I’ll retain these three algorithms and assess their consistency ranges when some options within the dataset are eliminated, adopted by retraining the fashions.