Introduction

Large Language Models have revolutionized productiveness by enabling duties like Q&A, dynamic code era, and agentic programs. Nonetheless, pre-trained vanilla fashions are sometimes biased and may produce dangerous content material. To enhance efficiency, algorithms like Reinforcement Studying with Human Suggestions and Direct Choice Optimization (DPO) can be utilized. This text focuses on RLHF strategies and the implementation of DPO utilizing Unsloth, highlighting the significance of those strategies in enhancing the standard and effectiveness of fashions in varied duties.

Studying Goals

- Perceive the importance of fine-tuning large language models (LLMs) for particular duties and purposes.

- Differentiate between RLHF (Reinforcement Studying with Human Suggestions) and DPO (Direct Choice Optimization) as fine-tuning approaches for LLMs.

- Establish the professionals and cons of each RLHF and DPO strategies within the context of LLM fine-tuning.

- Discover open-source instruments out there for implementing DPO fine-tuning, equivalent to TRL (Transformers for Reinforcement Studying) library, Axolotl, and Unsloth.

- Be taught the steps concerned in fine-tuning a Llama 3 8B mannequin utilizing DPO with Unsloth, together with knowledge preparation, mannequin set up, coaching, and inference.

- Perceive the important thing parameters and hyperparameters concerned in coaching a mannequin utilizing DPO.

- Acquire insights into the advantages of utilizing Llama 3 fashions, equivalent to diminished reminiscence footprint and improved inference velocity.

This text was printed as part of the Data Science Blogathon.

What’s Llama 3?

The Llama 3 is a household of open-source fashions just lately launched by Meta. The mannequin household consists of pre-trained and instruction-tuned chat fashions with 8B and 70B parameters. Since its launch, the mannequin has been well-received by the OSS group. The fashions have carried out properly in varied benchmarks like MMLU, HUMANEVAL, MATH, and so forth. The small 8B mannequin particularly has outperformed many greater fashions. This makes the mannequin perfect for private makes use of and edge deployment. Nonetheless, many use circumstances require the fashions to be fine-tuned on a {custom} dataset to carry out properly. So, let’s perceive what’s RLHF and DPO then implement it.

What’s RLHF?

RLHF is an alignment method often utilized after the Supervised Fantastic-tuning course of to drill down sure forms of habits right into a base mannequin. For instance, the mannequin may be educated to refuse to answer dangerous texts or to keep away from hate speeches. This is a vital step earlier than releasing the fashions to the general public. Massive firms like Google, Meta, and OpenAI spend monumental sources to align the fashions earlier than releasing them within the wild.

How Does RLHF Work?

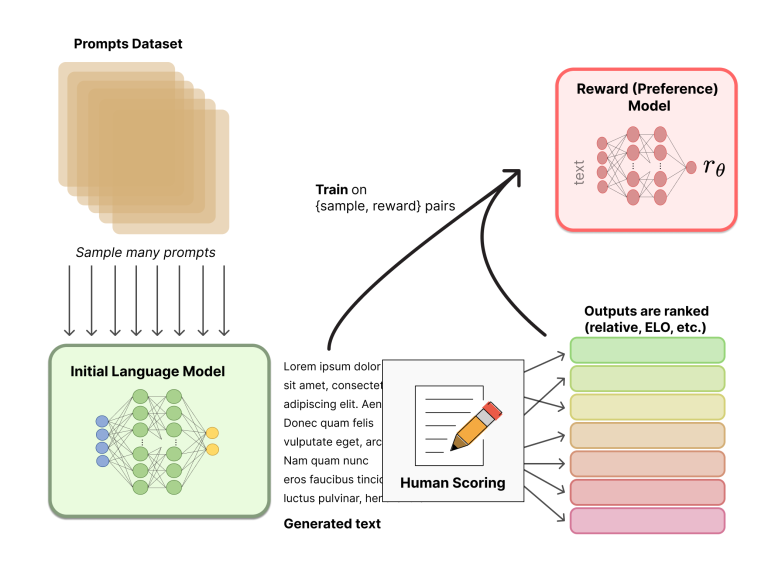

The RLHF method is a two-step course of that entails coaching a reward mannequin on choice knowledge and fine-tuning the bottom mannequin with reinforcement studying. The choice dataset is a extremely curated dataset of accepted and rejected responses from foundational language fashions. A human knowledge annotator ranks every response so as to add variability. The reward mannequin is then educated or fine-tuned on the choice knowledge, which may be the identical mannequin or totally different language mannequin. It’s attainable to make use of a conventional classification mannequin as properly.

The following step is to fine-tune the bottom mannequin utilizing RL. Historically in RLHF, the PPO (Proximal Coverage Optimization) algorithm is used to replace the parameters of the bottom mannequin based mostly on a reward perform. In PPO, we’ve an preliminary language mannequin, a coverage mannequin that will likely be fine-tuned, and the reward mannequin from the earlier step.

The choice dataset prompts the RL coverage mannequin to generate responses, that are then fed to the preliminary base mannequin to calculate the relative KL penalty. The KL penalty measures the distinction between one likelihood distribution and one other, guaranteeing the coverage mannequin doesn’t drift removed from the bottom mannequin. The formulation for calculating the KL penalty is given.

Within the subsequent step, the reward mannequin assigns choice scores to the responses from the RL coverage mannequin. After this, the parameters of the RL coverage mannequin are up to date by maximizing the reward perform. The reward perform is the sum of the choice rating and KL penalty.

From right here onwards the coverage mannequin may be up to date iteratively.

Whereas RLHF utilizing PPO has upsides like better flexibility to include varied forms of suggestions, the implementation may be unstable. Listed here are among the execs and cons of the RLHF fine-tuning technique.

Execs of RLHF

- RLHF supplies better management over fine-tuning because it permits for designing nuanced reward fashions.

- RLHF may also accommodate various reward codecs equivalent to numbers, implicit suggestions, and textual corrections.

- RLHF may be helpful when the mannequin must be educated over huge knowledge.

Cons of RLHF

- Coaching and becoming a reward mannequin may be difficult each technically and computationally.

- Whereas it permits various suggestions to information LLMs, it’s typically unstable and fewer dependable than DPO.

What’s Direct Choice Optimization?

Direct Choice Optimization is a fine-tuning method that goals to enhance on the shortcomings of PPO. DPO simplifies the RLHF by eliminating the necessity for reward modeling and coaching the mannequin by way of RL-based optimization. As a substitute, it instantly optimizes the language mannequin based mostly on human choice knowledge. That is achieved by utilizing pairwise comparisons of mannequin outputs, the place human evaluators select which of two responses they like for a given immediate. The suggestions from these comparisons is used on to information the coaching of the language mannequin. We will additionally use responses from higher fashions as most well-liked and weaker fashions as rejected to fine-tune base fashions.

Direct Choice Optimization makes use of a reference mannequin as an alternative of a reward mannequin, aiming to output a better likelihood for most well-liked responses and a decrease likelihood for rejected responses. This strategy is extra steady and environment friendly than PPO-based RLHF, because it bypasses in depth reward mannequin coaching and becoming processes.

Execs of Direct Choice Optimization

- DPO is simple in relation to implementation. There isn’t a want to coach a separate reward mannequin.

- Moreover being straightforward to implement, DPO can also be extra steady and predictable. The fashions may be reliably guided towards a selected aim.

- DPO is extra resource-efficient because it instantly operates on the LLM.

Cons of Direct Choice Optimization

- DPO doesn’t present the pliability of a posh reward mechanism design.

- It cannot work with various suggestions like RLHF. DPO depends on binary suggestions format.

Now, let’s discover the open-source instruments for implementing DPO. There are various methods you’ll be able to implement DPO utilizing totally different open-source instruments.

- TRL: The most well-liked is thru Huggingface’s TRL library. It has all of the bells and whistles for environment friendly DPO fine-tuning. As it’s from Huggingface, you’ll be able to combine different libraries from Huggingface seamlessly.

- Axolotl: If you don’t want to hassle your self with Python codes, there may be one other open-source device known as Axolotl. As a substitute of writing the codes in Python, it lets us outline all of the parameters and hyper-parameters in a YAML file. This makes it a lot simpler to handle the fine-tuning course of. It wraps the TRL library beneath, therefore we will use all of its performance however in a cleaner approach.

- Unsloth: One other open-source device that allows you to fine-tune LLMs optimally. The library implements CUDA-optimized {custom} triton kernels for sooner coaching and inferencing. It additionally leaves a lesser reminiscence footprint throughout mannequin coaching. We’ll use Unsloth for the DPO fine-tuning the Llama 3 8B mannequin.

So, let’s implement Direct Choice Optimization fine-tuning on the Llama 3 mannequin utilizing Unsloth.

DPO Fantastic-tuning with Unsloth

Allow us to now discover DPO fine-tuning with unsloth. We have to undergo sure steps.

Step1: Set up Dependencies

Earlier than shifting forward, set up the dependencies. We’ll set up Unsloth from their git repository, flash-attention, trl, and Wandb for logging. Optionally you’ll be able to set up deep velocity for distributed coaching throughout GPUs.

import torch

major_version, minor_version = torch.cuda.get_device_capability()

# Should set up individually since Colab has torch 2.2.1, which breaks packages

!pip set up "unsloth[colab-new] @ git+https://github.com/unslothai/unsloth.git"

if major_version >= 8:

# Use this for brand new GPUs like Ampere, Hopper GPUs (RTX 30xx, RTX 40xx, A100, H100, L40)

!pip set up --no-deps packaging ninja einops flash-attn xformers trl peft

speed up bitsandbytes

else:

# Use this for older GPUs (V100, Tesla T4, RTX 20xx)

!pip set up --no-deps xformers trl peft speed up bitsandbytes

crossStep2: Set Key in Native Setting

Now, set WANDB_API_KEY in your native surroundings.

import os

os.environ['WANDB_API_KEY'] = "your_api_key"Step4: Knowledge Preparation

We’ll use the Orca DPO dataset from Intel for alignment by way of DPO. As we discovered earlier than, a DPO dataset has a immediate column, a column for chosen solutions, and a immediate for rejected solutions.

This can be a small dataset, you need to use different DPO datasets like Argilla’s ultra-feedback preference data.

The info is ideal for DPO tuning. We will load the info utilizing Huggingface’s dataset library. Change the column identify query to immediate as TRL’s DPOTrainer requires it. We may even want to separate Prepare and Take a look at knowledge.

from datasets import load_dataset

dataset = load_dataset("Intel/orca_dpo_pairs", break up = "practice")

dataset = dataset.rename_column('query','immediate')

dataset_dict = dataset.train_test_split(test_size=0.04)Step5: Set up Llama 3

We’ll now set up Llama 3 instruct quantized mannequin from Unsloth. This may take a number of moments. The 4-bit quantized mannequin is round 5.76 GB. The script beneath will set up and cargo the mannequin on the GPU.

from unsloth import FastLanguageModel

import torch

max_seq_length = 4096

dtype = None

load_in_4bit = True # Use 4bit quantization to cut back reminiscence utilization. May be False.

mannequin, tokenizer = FastLanguageModel.from_pretrained(

model_name = "unsloth/llama-3-8B-instruct-bnb-4bit",

max_seq_length = max_seq_length,

dtype = dtype,

load_in_4bit = load_in_4bit,

)Step6: Load LoRA Adapters

We will now load all of the required LoRA adapters to the Llama mannequin. We’ll solely replace some 1-10% of the overall parameters. Setting gradient checkpointing to “unsloth” permits 30% much less reminiscence use and accommodates 2x bigger batch sizes.

mannequin = FastLanguageModel.get_peft_model(

mannequin,

r = 64, # Select any quantity > 0 ! Urged 8, 16, 32, 64, 128

target_modules = ["q_proj", "k_proj", "v_proj", "o_proj",

"gate_proj", "up_proj", "down_proj",],

lora_alpha = 64,

lora_dropout = 0,

bias = "none",

use_gradient_checkpointing = "unsloth", # True or "unsloth" for very lengthy context

random_state = 3407,

use_rslora = False,

loftq_config = None,

)- r: The “r” stands for the rank of the low-rank adapters. A better rank will improve the variety of trainable parameters; which improves the mannequin’s adaptability to knowledge. On the identical time, this will increase computing necessities.

- lora_alpha: That is much like “studying charge”. This modulates the impact of coaching replace matrices on the unique weight of the fashions.

- target_modules: The goal modules right here signify the layers of the mannequin structure to which the updates will likely be utilized.

Step7: Outline LoRA Hyper-parameters

Now, outline all of the coaching arguments and hyperparams for the mannequin coaching. However earlier than that patch the DPOTrainer. That is solely wanted if you’re doing it in a Pocket book. This enhances the mannequin logging in a Jupyter Pocket book. Ignore the step if you’re not on an IPython Pocket book.

from unsloth import PatchDPOTrainer

PatchDPOTrainer()Log in to your Weights and Biases profile.

import wandb

wandb.login()Now outline the LoRA hyper-parameters.

from transformers import TrainingArguments

from trl import DPOTrainer

import wandb

project_name = "llama3"

entity = "wandb"

# os.environ["WANDB_LOG_MODEL"] = "checkpoint"

wandb.init(mission=project_name, identify = "mistral-7b-instruct-DPO-1")

dpo_trainer = DPOTrainer(

mannequin = mannequin,

args = TrainingArguments(

per_device_train_batch_size = 2,

gradient_accumulation_steps = 3,

warmup_ratio = 0.1,

num_train_epochs = 1,

learning_rate = 5e-6,

fp16 = not torch.cuda.is_bf16_supported(),

bf16 = torch.cuda.is_bf16_supported(),

logging_steps = 1,

#max_steps=20,

optim = "adamw_8bit",

weight_decay = 0.0,

lr_scheduler_type = "linear",

seed = 42,

report_to="wandb", # allow logging to W&B

output_dir = "outputs",

),

beta = 0.1,

train_dataset = dataset_dict["train"],

eval_dataset = dataset_dict["test"],

tokenizer = tokenizer,

max_length = 1024,

max_prompt_length = 512,

)Right here’s a fast breakdown of all the important thing coaching arguments used above.

- per_device_train_batch_size: The batch dimension per system throughout coaching.

- gradient_accumulation_steps: The variety of steps to build up gradients earlier than performing a backward/replace cross.

- warmup_ratio: The ratio of the overall coaching steps the place studying charge linearly ramps as much as its most worth.

- num_train_epochs: The variety of full passes by way of the coaching dataset.

- optim: The kind of optimizer used. Right here, it’s an adamw 8-bit.

- lr_scheduler: This parameter adjusts the training charge throughout coaching. A linear scheduler linearly adjusts the worth of the training charge.

Now, begin coaching.

dpo_trainer.practice()This may kick-start mannequin fine-tuning. In case you encounter an out-of-memory (OOM) error attempt lowering coaching batch dimension and accumulation steps. You’ll be able to visualize the coaching run within the Pocket book or observe it out of your Wandb profile.

Step8: Inferencing

As soon as the coaching is completed save the LoRA mannequin.

mannequin.save_pretrained("lora_model")Now you can load the LoRA mannequin and begin asking questions.

from unsloth import FastLanguageModel

mannequin, tokenizer = FastLanguageModel.from_pretrained(

model_name = "lora_model",

max_seq_length = 512,

# dtype = dtype,

load_in_4bit = True,

)

FastLanguageModel.for_inference(mannequin) We will outline a transformer pipeline for inferencing.

import transformers

message = [

{"role": "system", "content": "You are a helpful assistant chatbot."},

{"role": "user", "content": "What is a Large Language Model?"}

]

immediate = tokenizer.apply_chat_template(message, add_generation_prompt=True, tokenize=False)

# Create pipeline

pipeline = transformers.pipeline(

"text-generation",

mannequin=mannequin,

tokenizer=tokenizer

)

terminators = [

pipeline.tokenizer.eos_token_id,

pipeline.tokenizer.convert_tokens_to_ids("<|eot_id|>")

]

# Generate textual content

sequences = pipeline(

immediate,

do_sample=True,

temperature=0.7,

top_p=0.9,

eos_token_id=terminators,

num_return_sequences=1,

max_length=200,

)

print(sequences[0]['generated_text'][len(prompt):])You might also wrap it in a Gradio chat interface utilizing the beneath script.

import gradio as gr

messages = []

def add_text(historical past, textual content):

international messages #message[list] is outlined globally

historical past = historical past + [(text,'')]

messages = messages + [{"role":'user', 'content': text}]

return historical past, ""

def generate(historical past):

international messages

immediate = pipeline.tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

terminators = [

pipeline.tokenizer.eos_token_id,

pipeline.tokenizer.convert_tokens_to_ids("<|eot_id|>")

]

outputs = pipeline(

immediate,

max_new_tokens=256,

eos_token_id=terminators,

do_sample=True,

temperature=0.6,

top_p=0.9,

)

response_msg = outputs[0]["generated_text"][len(prompt):]

for char in response_msg:

historical past[-1][1] += char

yield historical past

cross

with gr.Blocks() as demo:

chatbot = gr.Chatbot(worth=[], elem_id="chatbot")

with gr.Row():

txt = gr.Textbox(

show_label=False,

placeholder="Enter textual content and press enter",

)

txt.submit(add_text, [chatbot, txt], [chatbot, txt], queue=False).then(

generate, inputs =[chatbot,],outputs = chatbot,)

demo.queue()

demo.launch(debug=True)

Conclusions

Llama 3 from Meta has confirmed to be very succesful, particularly the small 8B mannequin. It may be run on cheaper {hardware} and fine-tuned to stick to specific use circumstances. However to make them commercially viable, we could must fine-tune them for {custom} use circumstances. This text mentioned fine-tuning strategies like RLHF, DPO, and implementation of DPO utilizing Unsloth. Listed here are the important thing takeaways from the article.

Key Takeaways

- RLHF is an alignment method often utilized to fashions post-training. PPO is probably the most extensively used RLHF technique that permits for mannequin alignment with better management.

- DPO has emerged as an efficient various to PPO coaching. It bypasses PPO’s difficult and unreliable workflow by utilizing a duplicate of the mannequin itself because the reward mannequin.

- DPO may be applied utilizing open-source instruments like Unsloth, HuggingFace TRL and Transformer, Axolotl, and so forth.

- Unsloth has custom-optimized implementations of well-liked language fashions which assist scale back coaching time, and reminiscence footprints and enhance mannequin inferencing.

Ceaselessly Requested Questions

A. Fantastic-tuning, within the context of machine studying, particularly deep studying, is a method the place you’re taking a pre-trained mannequin and adapt it to a brand new, particular process.

A. Sure, it’s attainable to fine-tune small LLMs utilizing Colab’s T4 GPU and QLoRA.

A. Fantastic-tuning significantly improves the capabilities of pre-trained base fashions on a number of duties like coding, reasoning, math, and so forth.

A. DPO or Direct Choice Optimization is an alignment method to adapt fashions to new knowledge. It makes use of a reference mannequin and a choice dataset to fine-tune the bottom mannequin,

A. Llama 3 is a household of fashions from Meta. There are 4 fashions in whole, 8B and 70B fashions with pre-trained and instructor-tuned fashions.

The media proven on this article isn’t owned by Analytics Vidhya and is used on the Writer’s discretion.