- Fundamental Machine Studying Ideas: Earlier than diving into particular algorithms, it’s important to know elementary ideas like supervised studying, unsupervised studying, regression, classification, overfitting, bias-variance tradeoff, and mannequin analysis strategies (like cross-validation and efficiency metrics).

- Resolution Bushes: Understanding determination bushes is foundational for extra superior strategies like Random Forest. Resolution bushes are intuitive and straightforward to interpret, making them an awesome place to begin. Find out about how determination bushes are constructed, break up standards (like Gini impurity and entropy), pruning, and tree visualization.

- Ensemble Studying: When you’re comfy with determination bushes, you’ll be able to transfer on to ensemble studying. Ensemble strategies mix a number of fashions to enhance predictive efficiency. Perceive the instinct behind ensemble strategies and the way they handle the restrictions of particular person fashions.

- Random Forest: Random Forest is a well-liked ensemble studying approach primarily based on determination bushes. Find out how Random Forest builds a number of determination bushes and combines their predictions by way of averaging or voting. Dive into subjects like bagging, characteristic sampling, and out-of-bag analysis.

Now, let’s delve into Random Forest and Ensemble Studying:

Ensemble studying entails combining a number of fashions to enhance the general efficiency of the system. The essential concept is that by aggregating the predictions of a number of fashions, the ensemble can usually obtain higher predictive accuracy than any particular person mannequin. There are a number of strategies for ensemble studying, together with:

- Bagging (Bootstrap Aggregating): Bagging entails coaching a number of fashions independently on completely different subsets of the coaching knowledge, sampled with substitute. Every mannequin has an equal vote within the remaining prediction.

- Boosting: Boosting works by sequentially coaching fashions, the place every subsequent mannequin corrects the errors of the earlier ones. Examples embrace AdaBoost (Adaptive Boosting) and Gradient Boosting.

- Stacking: Stacking combines the predictions of a number of fashions utilizing one other mannequin, usually referred to as a meta-learner or blender. The bottom fashions’ predictions function options for the meta-learner.

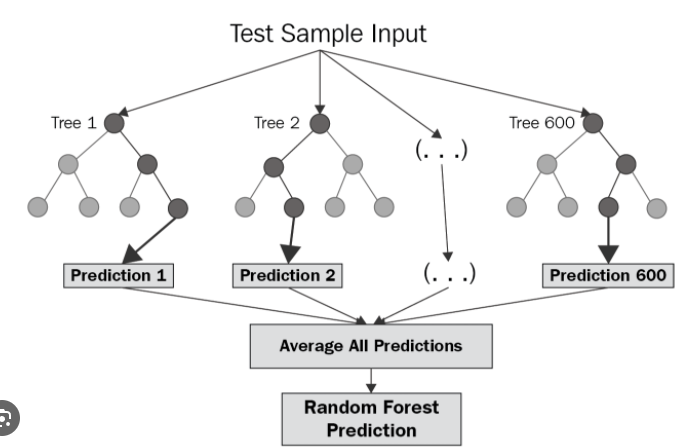

Random Forest is a selected kind of ensemble studying technique that builds a group of determination bushes and aggregates their predictions. Right here’s the way it works:

- Random Sampling of Knowledge: For every tree within the forest, a random pattern of the coaching knowledge is taken with substitute (bagging).

- Random Function Choice: At every node of the choice tree, as a substitute of contemplating all options for splitting, a random subset of options is chosen. This helps in decorrelating the bushes and enhancing range.

- Constructing Resolution Bushes: A number of determination bushes are constructed independently utilizing the sampled knowledge and options.

- Aggregation of Predictions: When making predictions, the predictions from all bushes are aggregated. For regression duties, this usually entails averaging the predictions, whereas for classification duties, it could contain majority voting.

Random Forests are sturdy, scalable, and fewer liable to overfitting in comparison with particular person determination bushes. They excel in dealing with high-dimensional knowledge with complicated relationships and are extensively utilized in varied domains, together with finance, healthcare, and pure language processing.

Understanding ensemble studying and Random Forests after mastering determination bushes offers a stable basis for constructing extra subtle predictive fashions.