Variance Threshold is a straightforward baseline function choice technique. It removes all options whose variance doesn’t meet a specified threshold. Options with zero variance are fixed and supply no data that distinguishes between completely different samples, and thus may be eliminated.

- Take away Uninformative Options: Options with low variance are sometimes not very informative. Eradicating them may also help in lowering the dimensionality of the info with out dropping a lot data.

- Cut back Overfitting: Eradicating options that don’t range a lot may also help scale back the complexity of the mannequin and thus keep away from overfitting.

- Enhance Effectivity: Fewer options can result in sooner coaching and prediction occasions.

- Calculate Variance: Compute the variance for every function within the dataset.

- Threshold Comparability: Evaluate the variance of every function in opposition to a predefined threshold.

- Function Choice: Retain options whose variance is above the edge.

Let’s undergo an instance for instance using the VarianceThreshold technique from the sklearn library.

Pattern Code :

import pandas as pd

from sklearn.feature_selection import VarianceThreshold# Pattern dataset

knowledge = {

'Feature_1': [1, 1, 1, 1, 1],

'Feature_2': [2, 2, 2, 2, 2],

'Feature_3': [1, 2, 3, 4, 5],

'Feature_4': [2, 3, 4, 5, 6]

}

df = pd.DataFrame(knowledge)

# Outline the VarianceThreshold object with a threshold

threshold = 0.0 # This threshold will take away solely options with zero variance

vt = VarianceThreshold(threshold)

# Match the VarianceThreshold mannequin and rework the info

vt.match(df)

transformed_data = vt.rework(df)

# Get the names of the options which might be stored

features_kept = df.columns[vt.get_support()]

print(f'Options stored: {features_kept.tolist()}')

print(f'Remodeled Information:n{pd.DataFrame(transformed_data, columns=features_kept)}')

- Import Libraries: Import crucial libraries, together with

pandasandVarianceThresholdfromsklearn.

2. Create Pattern Information:

- A pattern dataset is created with 4 options.

Feature_1andFeature_2have zero variance, whereasFeature_3andFeature_4have non-zero variance.

3. Outline VarianceThreshold Object:

- The

VarianceThresholdobject is initialized with a threshold of 0.0, which means solely options with zero variance can be eliminated.

4. Match and Rework Information:

- The

matchtechnique computes the variance of every function and thereworktechnique removes the options that don’t meet the edge.

5. Get Options Stored:

vt.get_support()returns a boolean array indicating which options are retained.- The names of the retained options are printed together with the remodeled knowledge.

Options stored: ['Feature_3', 'Feature_4']

Remodeled Information:

Feature_3 Feature_4

0 1 2

1 2 3

2 3 4

3 4 5

4 5 6

You’ll be able to specify the next threshold to take away options with low variance however not essentially zero:

higher_threshold = 0.5

vt_high = VarianceThreshold(higher_threshold)

vt_high.match(df)

transformed_data_high = vt_high.rework(df)

features_kept_high = df.columns[vt_high.get_support()]

print(f'Options stored with increased threshold: {features_kept_high.tolist()}')

print(f'Remodeled DataFrame with increased threshold:n{pd.DataFrame(transformed_data_high, columns=features_kept_high)}')

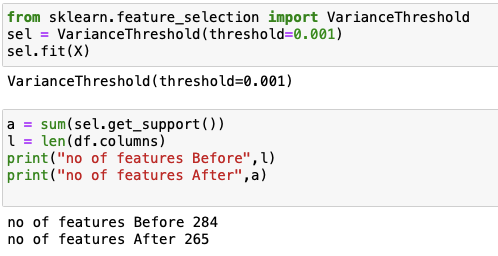

Let’s contemplate an in depth instance with a bigger dataset:

import numpy as np

import numpy as np# Creating a bigger pattern dataset

np.random.seed(0)

data_large = {

'Feature_1': np.random.randint(0, 2, measurement=100), # Low variance

'Feature_2': np.random.randint(0, 10, measurement=100), # Increased variance

'Feature_3': np.random.regular(0, 1, measurement=100), # Increased variance

'Feature_4': np.random.regular(0, 0.01, measurement=100) # Low variance

}

df_large = pd.DataFrame(data_large)

# Making use of Variance Threshold

vt_large = VarianceThreshold(threshold=0.1)

vt_large.match(df_large)

transformed_data_large = vt_large.rework(df_large)

features_kept_large = df_large.columns[vt_large.get_support()]

print(f'Options stored in giant dataset: {features_kept_large.tolist()}')

print(f'Remodeled DataFrame with giant dataset:n{pd.DataFrame(transformed_data_large, columns=features_kept_large)}')

- Selecting the Threshold: The selection of threshold is determined by the precise downside and dataset. The next threshold will take away extra options, probably together with those who carry some data. It’s necessary to experiment with completely different thresholds.

- Scaling Information: Variance is affected by the dimensions of the options. It might be helpful to scale your knowledge earlier than making use of Variance Threshold to make sure that options are comparable.

- Kind of Information: Variance Threshold is often extra helpful for steady options. For categorical knowledge, different strategies like chi-square check or mutual data is likely to be extra applicable.

Variance Threshold is a simple and efficient technique for function choice, particularly when you might want to take away options with little to no variance. It’s a great first step within the function choice course of, serving to to simplify your dataset and enhance mannequin efficiency. Nonetheless, it’s necessary to fastidiously select the edge and contemplate the character of your knowledge to take advantage of out of this method.