Consideration-based neural machine translation (NMT) has emerged as a robust method to enhance the efficiency of machine translation methods.

The eye mechanism in machine translation was launched in 2015 with the analysis paper “Efficient Approaches To Consideration-Based mostly Neural Machine Translation” by Minh-Thang Luong, Hieu Pham, and Christopher D. Manning.

Historical past:

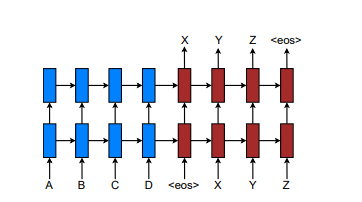

Earlier than the Consideration-based neural machine translation (NMT), the encoder would generate a illustration for every supply sentence and the decoder would generate one goal phrase at a time. The principle downside with the standard encoder-decoder translation was that I couldn’t deal with lengthy sentences.

To beat this downside, Consideration-based neural machine translation (NMT) was launched. This technique permits the decoder to entry the whole encoded enter sentence since sure parts of the enter are extra essential than others to supply the subsequent phrase in Translation.

This paper has the next content material and we’ll focus on them one after the other:

1. Consideration-based fashions

2. World Consideration

3. Native Consideration

4. Outcomes

5. Evaluation

6. Conclusion

1. Consideration-based fashions:

As within the historical past portion of this text, we mentioned why there was a necessity for an attention-based mannequin. Within the attention-based mannequin, to foretell the subsequent phrase in translation, the decoder accesses the whole encoded enter sentence to offer consideration to phrases primarily based on their relevance to the phrase being translated.

The core concept is to get the eye weights α over the enter sentence to assign precedence to the phrases the place the suitable data is current to supply the subsequent output token.

The eye weights are calculated in an consideration block and the aim is then to drive a context Vector CT that captures related supply facet data to assist predict the present Goal phrase

This specific structure of the eye mannequin can take any enter illustration and cut back it to a single fixed-length context Vector.

2. World Consideration:

Within the world consideration, to translate the present goal phrase, it goes over all of the phrases within the enter sentence to assign precedence to the phrases which can be related to the goal phrase.

Let’s say that we have now X1, X2, and X3 as our inputs and have calculated the primary output token now how can we have to calculate the second Goal hidden State utilizing the worldwide consideration. The method we might take is that take the present hidden State and each Supply hidden State to suit it right into a rating perform and what this perform does is it measures the similarity between two vectors as proven within the beneath diagram.

The paper suggests 4 methods of calculating the worldwide consideration rating:

To calculate the alignment vector as a method within the beneath diagram reveals we merely use the softmax perform to transform our rating values into possibilities given the alignment Vector

The context Vector CT is computed because the weighted common over all of the supply hidden States. Given the Goal hidden State HT and the supply facet context vector CT we make use of a easy concatenation layer to mix the knowledge from each vectors to offer an attentional hidden State. The attentional vector is then fed by means of the softmax layer to foretell the subsequent output token.

3. Native Consideration:

Native consideration is just like world consideration however as an alternative of going over all of the enter phrases within the sentence we create a window across the goal phrase to compute the context vector.

The context Vector CT is then derived as a weighted common over the set of supply hidden States throughout the window Pt minus D and Pt Plus D wherein D is empirically chosen PT could be chosen in two methods

- Monotonic (local-m):

We merely set pt = t assuming that supply and goal sequences are roughly monotonically aligned. Which means that the interpretation mannequin focuses on a small group of phrases within the supply sentence, shifting step-by-step in a straight line. It seems to be at a set, close by part of the sentence for every phrase it interprets, assuming the order of phrases principally stays the identical.

The alignment Vector a_t is outlined in response to this method which is rather like what we noticed in world consideration which is shorter and has a set size.

- Predictive (local-p):

Predictive alignment is extra versatile. The interpretation mannequin predicts which a part of the supply sentence to give attention to for every phrase it interprets. It might probably take a look at a wider or narrower part of the sentence as wanted, spreading its consideration easily across the predicted place.

WP and VP are the mannequin parameters that shall be discovered to foretell positions. S is the supply sentence size. On account of sigmoid, PT is at all times between zero and S.

- By reversing the supply sentence, the mannequin BLEU rating is 16.9.

- Including World consideration boosted the BlEU rating by 2.2 factors indicating the significance of the eye mechanism.

- In one other try to make their mannequin higher, the authors used an input-feeding method that helped their mannequin by 1.0. If we made attentional selections with out contemplating earlier alignment decisions whereas selecting new alignments it could be much less optimum so to resolve this an enter feeding technique was advised.

- Utilizing dropout improved the rating by 2.7.

- Incorporating the UNK token within the mannequin permits it to deal with extra assorted enter textual content, thus bettering the BLEU rating. Neural machine translation fashions have a restricted vocabulary because of computational and reminiscence constraints. Phrases that don’t seem continuously sufficient within the coaching information or are out of the predefined vocabulary are changed with the UNK token.

Suppose the supply sentence incorporates the uncommon phrase “chiffonier” which isn’t within the mannequin’s vocabulary:

- With out UNK: The mannequin may fail to translate the sentence accurately as a result of it does not acknowledge “chiffonier”.

- With UNK: The mannequin replaces “chiffonier” with UNK, permitting it to give attention to translating the remainder of the sentence accurately.

6. The mannequin lastly reached the state-of-the-art standing by ensembling eight fashions and attaining a 23 BLEU rating.

Evaluation:

The above diagram reveals the educational curve of this mannequin. The take a look at prices are in perplexity metric. In NLP, it’s a solution to seize the diploma of uncertainty a mannequin has in predicting or assigning possibilities to some textual content.

The eye mannequin outperforms the non-attention mannequin relating to lengthy sentences.

Conclusion:

On this paper, we have now examined two easy but efficient courses of attentional mechanisms for neural machine translation (NMT); native and world consideration. We mentioned the effectiveness of each the worldwide and native consideration approaches on English-German translation duties. The contributions of this paper spotlight the significance of consideration mechanisms in bettering the efficiency of neural machine translation methods.