I shall be utilizing my Kidney Stone Detection with YOLOv10 and YOLOv8 as a reference for this demo.

!pip set up ultralytics

!pip set up -q git+https://github.com/THU-MIG/yolov10.git

!pip set up supervision

!mkdir -p /kaggle/working/yolov10/weights

!wget -P /kaggle/working/yolov10/weights -q https://github.com/THU-MIG/yolov10/releases/obtain/v1.1/yolov10m.pt

The method begins by pip putting in the ultralytics package deal to get the YOLOv10 class and the YOLOv10 weights from the GitHub repo. The supervision module is to assist us visualize the bounding containers on the pictures we’re performing predictions on.

import supervision

import ultralytics

from ultralytics import YOLO, YOLOv10

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

import PIL

from PIL import Picture

from IPython.show import show

import matplotlib.pyplot as plt

from glob import glob

import random

import cv2

import warnings

import os

warnings.simplefilter('ignore')

os.environ['WANDB_DISABLED'] = 'true'class CFG:

EPOCHS = 50

BATCH_SIZE = 32

SEED = 6

LEARNING_RATE = 0.001

NUM_SAMPLES = 16

OPTIMIZER = 'Adam'

DATA_PATH = '/kaggle/enter/kidney-stone-images/information.yaml'

SAMPLE_PATH = '/kaggle/enter/kidney-stone-images/take a look at/pictures/*'

What I love to do previous to import all the pictures and initiating coaching is to setup a CFG class which helps me setup hyperparameters simply with out going forwards and backwards.

images_data = glob(CFG.SAMPLE_PATH)

random_image = random.pattern(images_data, CFG.NUM_SAMPLES)plt.determine(figsize=(12,10))

for i in vary(CFG.NUM_SAMPLES):

plt.subplot(4,4,i+1)

plt.imshow(cv2.imread(random_image[i]))

plt.axis('off')

As soon as the pictures have been imported, I like having a look at them and displaying a batch of them for the reader to provide them context concerning the dataset I’m utilizing.

yolo_v10 = YOLOv10('/kaggle/working/yolov10/weights/yolov10m.pt')v10_model = yolo_v10.practice(information=CFG.DATA_PATH,seed=CFG.SEED,epochs=CFG.EPOCHS,

lr0=CFG.LEARNING_RATE, optimizer=CFG.OPTIMIZER, verbose=True,

challenge='ft_models', identify='yolo_v10')

Then coaching is as straightforward as calling an API. Working 50 epochs with round a 1000 pictures on YOLOv10 took round half-hour for me with GPU T4 x2 on Kaggle.

# Perform to carry out stone detections

def stone_detection(img_path, mannequin):# Learn the picture

img = cv2.imread(img_path)

# Move the picture via the detection mannequin and get the end result

detect_result = mannequin(img)

# Plot the detections

detect_img = detect_result[0].plot()

# Convert the picture to RGB format

detect_img = cv2.cvtColor(detect_img, cv2.COLOR_BGR2RGB)

return detect_img

# Outline the listing the place the customized pictures are saved

custom_image_dir = '/kaggle/enter/kidney-stone-images/take a look at/pictures'

# Get the listing of picture information within the listing

image_files = os.listdir(custom_image_dir)

# Choose 16 random pictures from the listing

selected_images = random.pattern(image_files, 16)

v10_trained = YOLOv10('/kaggle/working/ft_models/yolo_v10/weights/finest.pt')

# Create a determine with subplots for every picture

fig, axes = plt.subplots(nrows=4, ncols=4, figsize=(15, 15))

for i, img_file in enumerate(selected_images):

# Compute the row and column index of the present subplot

row_idx = i // 4

col_idx = i % 4

# Load the present picture and run object detection

img_path = os.path.be part of(custom_image_dir, img_file)

detect_img = stone_detection(img_path, v10_trained)

# Plot the present picture on the suitable subplot

axes[row_idx, col_idx].imshow(detect_img)

axes[row_idx, col_idx].axis('off')

# Modify the spacing between the subplots

plt.subplots_adjust(wspace=0.05, hspace=0.05)

plt.present()

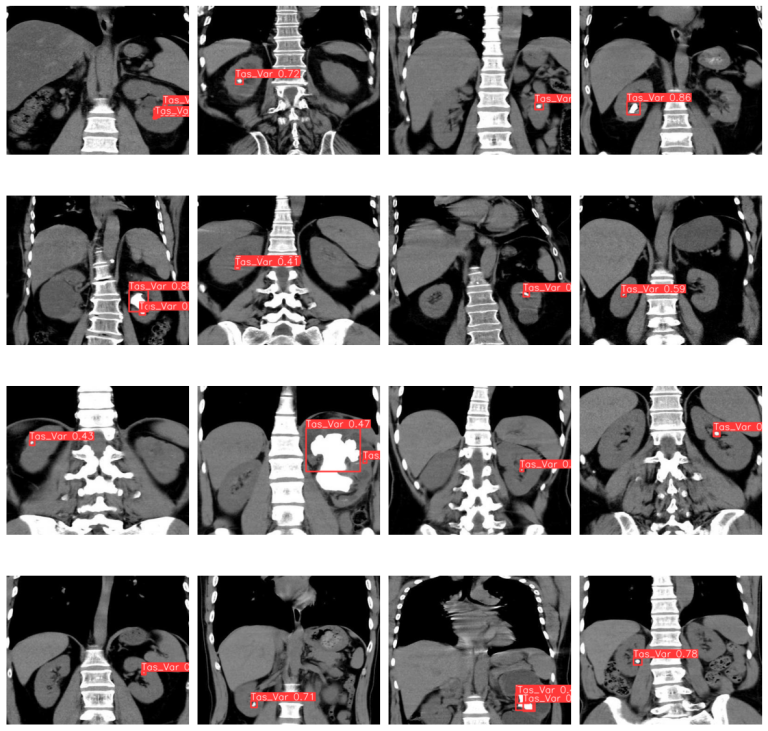

After coaching, I created a operate known as stone_detection which takes within the picture path and the mannequin. It mainly runs the inference on the testing set and plots the bounding field prediction on the picture. After doing it for 16 samples, proven above is the end result.

YOLOv10 makes use of the next metrics:

- Field Loss measures the error in predicting the coordinates of bounding containers. On this case, we had large quantities of overfitting as validation loss did not converge with the coaching loss.

- Class Loss measures the error in predicting the proper class for every detected object. CL carried out superbly as in 50 epochs, it managed to converge fairly nicely.

- Distribution Focal Loss measures the precision of predicted bounding field coordinates, with a concentrate on bettering accuracy by emphasizing tougher examples. Principally in instances of sophistication imbalance, the NN focuses extra on much less frequent courses to enhance generalization and localization.

- Imply Common Precision (mAP) is a metric used to judge the efficiency of object detection algorithms in figuring out and finding objects inside pictures. It integrates each precision (proportion of appropriately predicted constructive situations) and recall (proportion of precise constructive situations appropriately recognized) for numerous object classes. The mAP is calculated by averaging the Common Precision (AP) values throughout all classes, offering an general measure of the algorithm’s effectiveness. Will be improved via: extra epochs, hyperparameter tuning (batch dimension, preliminary studying charge

lr0, studying charge varylrf, optimizers). Proven beneath, YOLOv10 achieved 78.3% mAP on the dataset.

Primarily as a developer, my expertise with YOLOv10 was easy and I had no points experimenting with it in a pocket book setting. Although it is likely to be fast, there are nonetheless tradeoffs in relation to mAP when latency shouldn’t be a spotlight. General, YOLOv10 nonetheless ranks across the #10 — #60 spots on Papers With Code throughout totally different benchmarks. Simply keep in mind, going quick doesn’t all the time imply going nicely.