Analogy from classical machine studying: LLM (Massive Language Mannequin) = optimizer; code = parameters; LangProp = PyTorch Lightning

You’ve gotten in all probability used ChatGPT to write down your emails, summarize paperwork, discover out info, or show you how to debug your code. However can we take a step additional and make ChatGPT drive a automobile?

This was the query we needed to reply after I began my internship at Wayve in March final yr. Wayve is an autonomous driving startup in London, making use of end-to-end studying to the difficult drawback of city driving. On the time, the corporate was nearly to launch its LLM analysis group, which has since efficiently developed LINGO-1 and LINGO-2. AutoGPT had simply come out, and Voyager had not come out but. And but, the disruption attributable to LLMs was palpable. The query was, how can we use this new know-how to driving, a website the place language isn’t the principle modality?

On this weblog put up, I wish to give an summary of our paper LangProp, which we offered on the LLM Brokers workshop at ICLR (the Worldwide Convention on Studying Representations) final month (Could 2024).

The problem of making use of LLMs to driving is available in twofold: firstly, LLMs are, because the identify says, very giant fashions that require a number of compute and may be gradual to run, which makes them not-so-ideal for safety-critical real-time purposes, akin to autonomous driving; secondly, whereas language is sweet at high-level descriptions and serves as a complicated instrument for logic, reasoning and planning, it doesn’t have the granularity and element that’s wanted to explain observations and provides spatial management actions.

We realised, nevertheless, that we don’t essentially have to make use of LLMs for inferring driving actions. What we might do as a substitute is to make LLMs write the code for driving itself.

When you’ve got ever used ChatGPT to write down code then this may occasionally sound like a horrible thought. The code that it writes seldom works out of the field, and sometimes comprises some errors. However what if we use LLMs to additionally detect bugs and routinely repair them, thereby iteratively enhancing the code high quality?

We took this concept a step additional — as a substitute of simply fixing bugs, we designed a coaching framework that permits us to enhance the code that the LLM generates in direction of an goal operate of your alternative. You may “practice” your code to enhance on a coaching dataset and attempt to scale back the losses. The code enhancements may be quantified by working it on a validation dataset.

Does this begin to sound like Machine Studying? As a result of it primarily is! However are we fine-tuning the LLM? No — in actual fact, there is no such thing as a neural community that’s being fine-tuned. As an alternative, we’re fine-tuning the code itself!

In LangProp, the “code” is the parameters of the mannequin, and the LLM is the optimizer that guides the parameters to enhance within the route that reduces the loss. Why is that this cool? As a result of by making use of this pondering, we are able to now automate optimization of software program themselves in a data-driven method! With deep studying, we witnessed the facility of data-driven approaches to fixing hard-to-describe issues. However to date, the appliance area of machine studying has been constrained to fashions parametrized by numeric values. Now, they’ll cope with programs described in code, too.

When you’ve got adopted the historical past of Synthetic Intelligence, that is a chic solution to unify the as soon as in style method of Symbolic AI with the extra fashionable and profitable Machine Studying method. Symbolic AI was about having human specialists describe an ideal mannequin of the world within the type of logic and code. This had its limitations, as many complicated duties (e.g. object recognition) had been past what human specialists can feasibly describe with logic alone. Machine Studying, however, lets the information converse for itself and suits a mannequin that finest describes them in an automatic method. This method has been very profitable in a variety of disciplines, together with sample recognition, compression and performance approximation. Nonetheless, logic, reasoning, and long-term planning are fields the place naively becoming a neural community on information typically fails. It is because it’s difficult to study such complicated operations within the modality of neural community parameter area. With LLMs and LangProp, we are able to lastly apply the data-driven studying methodology to study symbolic programs and automate their enchancment.

Disclaimer

Earlier than we dive in additional, I really feel that some disclaimers are so as.

- This work on LangProp was performed as an internship undertaking at Wayve, and doesn’t straight replicate the corporate’s Analysis & Growth priorities or methods. The aim of this weblog put up is to explain LangProp as a paper, and every thing on this weblog put up is written within the capability of myself as a person.

- Whereas we demonstrated LangProp primarily for the case of autonomous driving, we additionally wish to stress its limitations, akin to (a) it requires good statement of the surroundings, (b) we solely made it work in a simulated surroundings and it’s removed from real-world deployment, (c) the generated driving code isn’t good nor subtle, and has many points to be appropriate for real-world deployment. We see LangProp as a analysis prototype that showcases the potential of LLMs utilized to data-driven software program optimization, not as a product for deployment.

If you happen to want additional info on the constraints of LangProp, please try the limitation part within the appendix of our paper.

With that mentioned, let’s check out how LangProp works!

…we carry again Symbolic AI and Evolutionary Algorithms

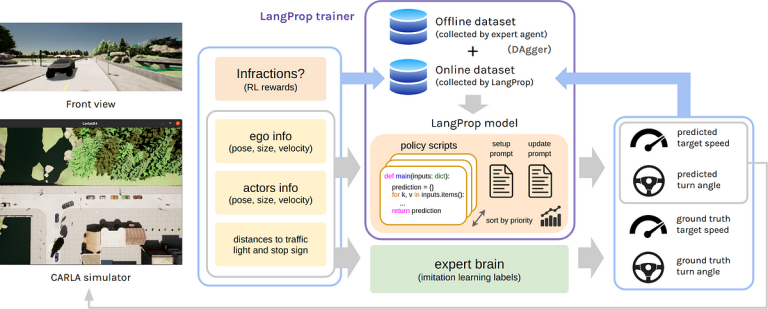

LangProp is designed like PyTorch Lightning — a LangProp module retains monitor of the parameters (assortment of scripts) which are educated and used for inference. Within the coaching mode, a coverage tracker retains a document of the inputs, outputs, and any exceptions through the ahead move. The efficiency of the code is evaluated by an goal operate. Based mostly on the scores, the coverage tracker re-ranks the scripts that at the moment exist, and passes the highest ok scripts to the LLM for refinement. At inference time, making a prediction is so simple as calling the code with the very best rating.

The LangProp coach takes a LangProp module to be educated, a coaching dataset, and a validation dataset. The dataset may be any iterable object, together with a PyTorch Dataset object, which makes making use of LangProp to current duties simpler. As soon as the coaching is completed, we are able to save a checkpoint, which is the gathering of refined code together with some statistics for rating the code.

The mechanism we use to decide on the very best code and enhance them is much like evolutionary algorithms, during which samples are initially chosen randomly, however then those which are high-performing are stored and perturbed to spawn a brand new technology of fitter samples.

Now let’s attempt utilizing LangProp to drive in CARLA!

CARLA is an open-sourced driving simulator used for autonomous driving analysis. There’s a leaderboard challenge to benchmark your self-driving automobile agent. We examined LangProp on the usual routes and cities on this problem.

The benefit of formulating LangProp as a Machine Studying framework is that, now we are able to apply not simply basic supervised studying but additionally imitation studying and reinforcement studying methods.

Particularly, we begin our coaching on an offline dataset (driving demonstrations from an skilled that comprises state and motion pairs), after which carry out on-line rollouts. Throughout on-line rollout, we make use of DAgger [1], which is a dataset aggregation method the place samples collected by on-line rollouts are labelled with skilled labels and aggregated with the present dataset.

The enter to the mannequin (the code) is a Python dictionary of the state of the surroundings, together with the poses and velocities of the car and surrounding actors, and the distances to the site visitors mild / cease signal. The output is the driving motion, which is the pace and steering angle at which the car ought to drive.

At any time when there’s an infraction, e.g. ignoring a site visitors mild or cease signal, collision with one other car, pedestrian or bicycle owner, or being stationary for too lengthy, there’s a penality to the efficiency scores. The coaching goal is to maximise the mixed rating of imitation studying scores (how effectively can the agent match the bottom fact motion labels) and the reinforcement studying scores (lowering the infraction penalty).

Now watch the LangProp agent drive!

We noticed throughout coaching that, the preliminary driving coverage that ChatGPT generates could be very defective. Particularly, it typically learns a naive coverage that copies the earlier velocity. It is a well-known phenomenon known as causal confusion [2] within the subject of imitation studying. If we simply practice with behavioral cloning on the offline dataset, such naive however easy insurance policies receive a excessive rating in comparison with different extra complicated insurance policies. This is the reason we have to use methods akin to DAgger and reinforcement studying to guarantee that the coverage performs in on-line rollout.

After an iteration or two, the mannequin stops copying the earlier velocity and begins shifting ahead, however is both overly cautious (i.e. stops at any time when there’s an actor close by, even when they don’t seem to be in collision course), or reckless (driving ahead till it collides into an actor). After a pair extra iterations, the mannequin learns to maintain a distance from the car in entrance and even calculates this distance dynamically primarily based on the relative velocities of the automobiles. It additionally predicts whether or not different actors (e.g. J-walking pedestrians) are on collision course with the car by wanting on the velocity and place vectors.

Within the experiments in our paper, we present that the LangProp driving agent outperforms lots of the beforehand applied driving brokers. We evaluate towards each a PPO skilled agent (Carla-Roach [3], TCP [4]) and researcher-implemented skilled brokers (TransFuser [5], InterFuser [6], TF++ [7]), and LangProp outperformed all skilled brokers aside from TF++. All of the skilled brokers had been revealed after the GPT 3.5 coaching cutoff of September 2021, so this result’s each shocking and thrilling!

Thanks for becoming a member of me on the trip! Whereas on this work we primarily explored the appliance of LangProp to autonomous driving in CARLA, we additionally confirmed that LangProp may be simply utilized to extra basic issues, akin to the standard RL surroundings of CartPole-v1. LangProp works finest in environments or issues the place suggestions on the efficiency may be obtained within the type of textual content or code, giving the mannequin a richer semantic sign that’s extra than simply numerical scores.

There are limitless attainable purposes of LangProp-like coaching to iteratively enhance software program primarily based on information, and we’re excited to see what is going to occur on this area!

If you happen to preferred our work, please think about constructing on prime of it and citing our paper:

@inproceedings{

ishida2024langprop,

title={LangProp: A code optimization framework utilizing Massive Language Fashions utilized to driving},

writer={Shu Ishida and Gianluca Corrado and George Fedoseev and Hudson Yeo and Lloyd Russell and Jamie Shotton and Joao F. Henriques and Anthony Hu},

booktitle={ICLR 2024 Workshop on Massive Language Mannequin (LLM) Brokers},

yr={2024},

url={https://openreview.internet/discussion board?id=JQJJ9PkdYC}

}

[1] Stéphane Ross, Geoffrey Gordon, and Drew Bagnell. “A discount of imitation studying and structured prediction to no-regret on-line studying.” In Proceedings of the fourteenth worldwide convention on synthetic intelligence and statistics, JMLR Workshop and Convention Proceedings, 2011.

[2] Pim De Haan, Dinesh Jayaraman, and Sergey Levine. “Causal confusion in imitation studying”. Advances in Neural Info Processing Programs, 2019.

[3] Zhejun Zhang, Alexander Liniger, Dengxin Dai, Fisher Yu, and Luc Van Gool. “Finish-to-end city driving by imitating a reinforcement studying coach.” In Proceedings of the IEEE/CVF worldwide Convention on Laptop Imaginative and prescient, pp. 15222–15232, 2021.

[4] Penghao Wu, Xiaosong Jia, Li Chen, Junchi Yan, Hongyang Li, and Yu Qiao. “Trajectory-guided management prediction for end-to-end autonomous driving: A easy but sturdy baseline.” Advances in Neural Info Processing Programs, 35:6119–6132, 2022.

[5] Kashyap Chitta, Aditya Prakash, Bernhard Jaeger, Zehao Yu, Katrin Renz, and Andreas Geiger. “Transfuser: Imitation with transformer-based sensor fusion for autonomous driving.” IEEE Transactions on Sample Evaluation and Machine Intelligence, 2022.

[6] Hao Shao, Letian Wang, Ruobing Chen, Hongsheng Li, and Yu Liu. “Security-enhanced autonomous driving utilizing interpretable sensor fusion transformer.” In Convention on Robotic Studying, pp. 726–737. PMLR, 2023.

[7] Jaeger, Bernhard, Kashyap Chitta, and Andreas Geiger. “Hidden biases of end-to-end driving fashions.” Proceedings of the IEEE/CVF Worldwide Convention on Laptop Imaginative and prescient. 2023.