RAG & API Retrieval, Partitioning & Extraction

FlowMind goals to unravel for hallucination by offering contextual reference knowledge at inference; analogous to RAG. The API additionally seeks to retrieve, partition and extract related XML-like blocks. Blocks are once more very a lot much like chunks.

FlowMind can be challenged by the issues of choosing the highest retrieved blocks/chunks and truncating blocks that are too lengthy.

Embeddings are additionally utilized in FlowMind to go looking based on semantic similarity.

So FlowMind will be thought of as JPMorganChase’s propriety RAG resolution and clearly it meets their knowledge privateness and governance necessities. What I discover curious is that the market usually has settled on sure terminology and a shared understanding has been developed.

JPMorganChase breaks from these phrases and introduces their very own lexicon. Nonetheless, FlowMind could be very a lot akin to RAG usually.

It’s evident that by this implementation, JPMorganChase has full management over their stack on a really granular stage. The method and move Python features created by FlowMind most likely matches into their present ecosystem.

MindFlow can be leveraged by expert customers to generate flows based mostly on an outline which will be re-used.

The purpose of FlowMind is to treatment hallucination in Giant Language Fashions (LLMs) whereas making certain there isn’t a direct hyperlink between the LLM and proprietary code or knowledge.

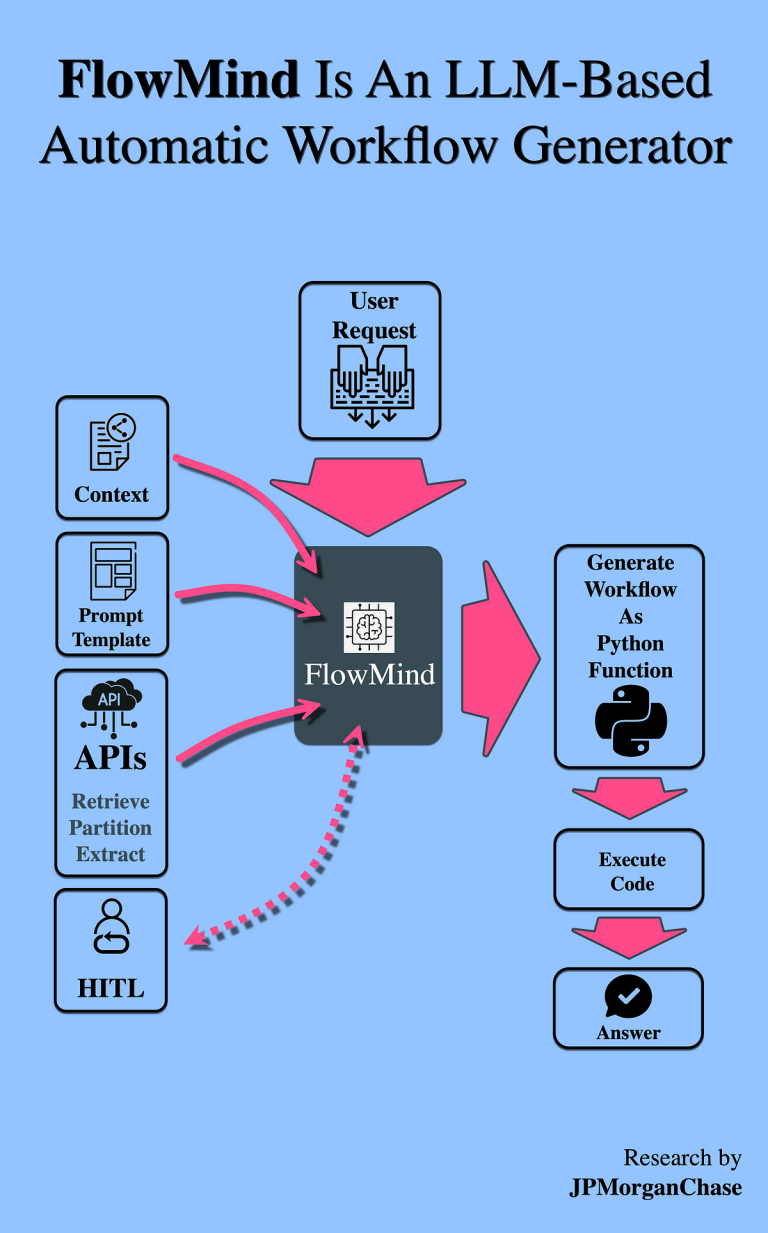

FlowMind creates flows or pipelines on the fly, a course of the paper refers to as robotic course of automation. There’s a human-in-the-loop factor, which can be seen as a single dialog flip, permitting customers to work together with and refine the generated workflows.

Utility Programming Interfaces (APIs) are used for grounding the LLMs, serving as a contextual reference to information their reasoning. That is adopted by code technology, code execution, and finally delivering the ultimate reply.

Stage 1: It begins by following a structured lecture plan (immediate template as seen above) to create a lecture immediate. This immediate educates the Giant Language Mannequin (LLM) concerning the context and APIs, getting ready it to write down code.