Like everybody else, I took a take a look at the KAN paper by Liu et. al. to see what all of the hype is about. It’s not every single day that somebody takes a swipe at MLPs, an structure that has been round in a single kind or one other because the late 60s, and nonetheless lies on the coronary heart of most neural community architectures.

After studying it a few occasions, my predominant challenge with the paper is that it treats splines primarily like a black-box. They’re spoken of as ‘trainable activation capabilities’, a (delicate) abuse of the time period in my view. An activation operate is often a set non-linear operate that’s utilized element-wise, whereas the ‘trainable’ elements of a community are applied by way of linear ops. This permits us to make use of extremely optimized linear algebra code for the dynamic a part of the community, whereas any non-linearities might be compiled right down to static bits of code that may be utilized uniformly.

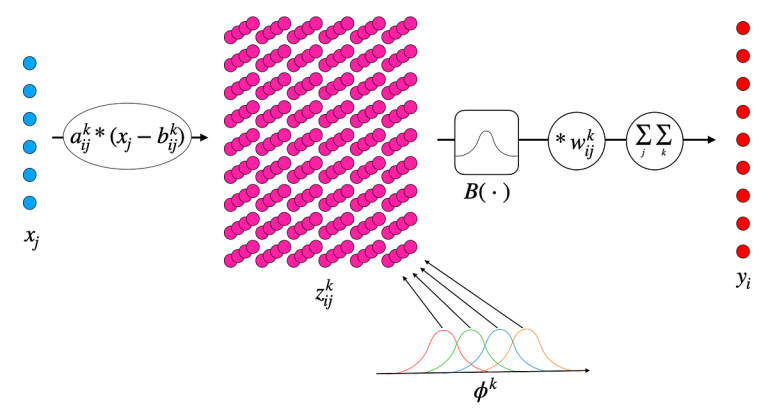

What about KANs then? Are they innovating away from this tried-and-tested mannequin? It positive appears to be like prefer it, based mostly on the textual content and the formulation within the paper. There may be one place within the paper although, the place we will peak contained in the black-box: in Determine 2.2 the authors zoom inside certainly one of these trainable activation capabilities. The image is acquainted to anybody with a pc graphics background, a cubic b-spline generated by summing shifted-squeezed-and-scaled copies of a grasp foundation spline:

What did we discover contained in the field? A hard and fast non-linear operate (the grasp foundation spline), plus trainable linear operations (the shift-squeeze-scale)!

Let’s see the place the maths takes us. A KAN layer, with n_in inputs x and n_out outputs y, calculates its output by feeding the enter ahead by way of a matrix of scalar b-splines:

the place the idea operate B is an acceptable piecewise-cubic polynomial with no free parameters. It’s the quantity n_node of spline nodes and the parameters a (‘squeeze’ elements), b (‘shift’ elements) and w (‘scale’ elements) that decide the form of the activation operate phi. Within the paper it’s only the weights w which might be straight trainable (by way of backpropagation). The quantity, form and placement (n_node, a, b) of the idea splines are initialised in order that the idea splines a) interlock with one another appropriately and b) cowl the specified output vary (see the determine above). They’re typically fastened, however the authors counsel that they are often adjusted periodically by way of (non-differentiable) ‘grid extensions’.

How do KANs examine to MLPs? The equal MLP layer feeds its enter ahead by way of a linear transformation adopted by a non-linear operate (resembling a Sigmoid or a ReLU):

We discover the next variations:

- The KAN layer applies two linear transformations: one contained in the non-linearity (to shift-and-squeeze) and one exterior. The MLP solely transforms as soon as.

- Furthermore, the KAN linear transformations are higher-dimensional: there’s an additional index that loops over the idea splines. There’s a value to pay for growing the decision/flexibility of our activation capabilities, we have to scale that further dimension up!

- The output vary of the KAN layer shouldn’t be straight controllable: by putting the non-linearity final, the MLP layer permits us wonderful management over the vary of its output; a sigmoid’s output is all the time in (-1,1), the output of ReLU is rarely adverse. The KAN layer sandwiches the non-linearity between two linear transforms, shedding this convenient property. We must be further cautious with the initialization of the weights w consequently.

Allow us to translate this mathematical (re)formulation of the KAN layer into PyTorch code. Assuming that our nn.Module holds

- three

(n_in, n_node, n_out)formed tensors,weights, shift, squeeze, and - the b-spline foundation operate

B_func,

we will implement the ahead cross of the layer as

def ahead(self, X):

X = X.unsqueeze(-1).unsqueeze(-1) # broadcast x_j to x_{ij}^okay

Z = self.B_func(self.squeeze*(X-self.shift)) # apply the grasp spline

Y = torch.sum(self.weights * Z, (-3,-2)) # scale back over the j,okay indices

return Y

This setup is kind of normal; to implement Liu et. al.’s KAN, one units require_grad=False for the squeeze and shift tensors, and naturally units B_func to calculate the grasp foundation spline.

At this level, and given the additional computational value in comparison with an equal MLP layer, we’d as nicely go for the total flexibility that this model of the structure, allow us to name it the basis- or B-KAN, permits:

- allow us to practice all of the parameters of the mannequin, together with the shift b and squeeze parameters a, and

- allow us to contemplate different potentialities for the non-linear operate B.

One might deliver again the ReLU for a real one-for-one comparability with the MLPs, or strive much more unique choices such because the sin operate (see Sitzmann et. al.’s SIREN networks). This is able to be very attention-grabbing in relation to the interpretability of KANs (part 2.5 of the paper), because it permits us to check for a selected operate ansatz straight.

In brief, we disassembled the b-splines on the coronary heart of the KAN layer, rendering its true value specific, after which reassembled it into the B-KAN. The generalized B-KAN structure is

- straight similar to the MLP,

- simple to implement and practice, and

- not restricted to spline interpolation.

Personally, I loved this train and I’m curious to place the B-KAN by way of its paces. Is the hype justified, will KANs (or B-KANs) substitute MLPs? Not so positive about that, to be sincere, let me know what you assume within the feedback.